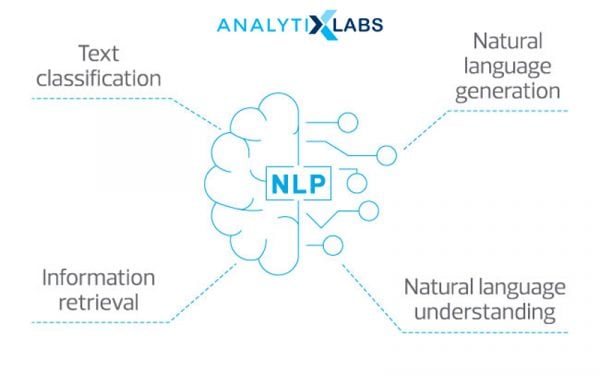

Natural Language Processing (NLP), by definition, is a method that enables the communication of humans with computers or rather a computer program by using human languages, referred to as natural languages, like English. These include both text and speech input. It helps computers to understand and interpret the languages and reply validly in a valid manner. It is an attempt to reduce the communication gap between humans and machines.

NLP is one of the major and most addressed parts of Artificial Intelligence. It is very commonly used in our day-to-day lives, in applications like Google Assistant, Siri, Google Translate, Alexa, etc. NLP includes dividing the input into smaller pieces and performing tasks to understand the relationship between them and then create meaningful output.

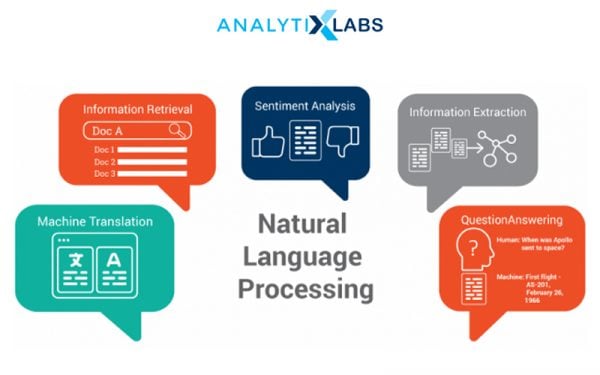

The Most Common Use-cases of NLP are:

- Sentiment Analysis

- Text Summarization

- Content Categorization

- Speech-to-Text conversion and vice-versa

- Machine Translation

Let Us Look At The Most Common NLP Terms

- Vocabulary: The group of terms used in a text or speech.

- Corpus, or Corpora(Plural): It is a collection of text of similar type, for example, movie review, social media posts, etc.

- Documents: They are the body of text and collectively form a corpus.

- Out of Vocabulary: Terms that are not included in the vocabulary that we created during our model’s training are included in this category.

- Preprocessing: It is a method that attempts to remove unwanted text, or noise, from the given text and to make it “clean.” It is the first step of any NLP task. You may also like to read about Data Preprocessing in Machine Learning.

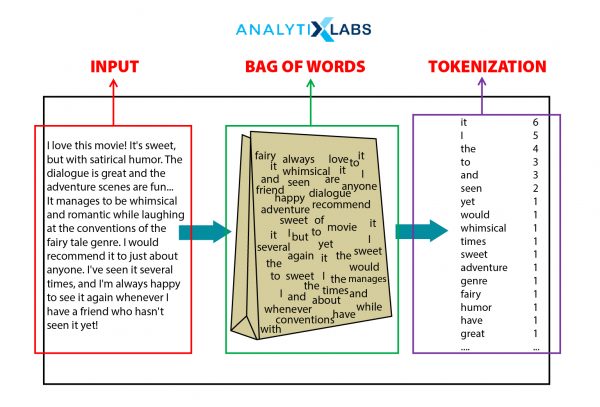

- Tokenization: Tokenization in NLP breaks down the large sets of text into small parts for easy readability and understanding. Each small part is referred to as ‘text’ and provides a piece of meaningful information.

- Embeddings (Word): It is the process of embedding each token as a vector before passing it into a machine learning model. Embeddings can also be done on phrases and characters as well, apart from words.

- N-grams: It is a continuous sequence (similar to the power set in number theory) of n-tokens of a given text.

- Transformers: They are deep learning architectures that can have the ability to parallelize computations. Transformers are used to learn long term dependencies.

- Parts of Speech (POS): They are the word’s functions, like a noun, verb, etc.

- Parts of Speech Tagging: It is the process of tagging words in the sentences into different parts of speech.

- Stop Words: It is the removal of unwanted text from further processing of text, for example, a, to, can, etc.

- Normalization: It is the process of mapping similar terms to a canonical form, i.e., a single entity.

- Lemmatization: Lemmatization in NLP is a type of normalization used to group similar terms to their base form based on the parts of speech. For example, talking and talking can be mapped to a single term, walk.

- Stemming: Stemming in NLP is also a type of normalization and is similar to lemmatization, but the difference here is that it segregates the words without the parts of speech tags. It is faster than lemmatization and can also be more accurate in some cases.

NLP Interview Questions With Answers

In this section, let us see the extensive set of NLP interview questions. Following the question links, you can also find answers to these NLP interview questions.

- What is the NLG (Natural Language Generation)?

- What is the order of steps in natural language understanding?

- What is signal processing in NLP?

- What is pragmatic analysis in NLP?

- What is syntactic analysis in NLP?

- What is semantic analysis in NLP?

- What is sentiment analysis in NLP?

- What is discourse analysis in NLP?

- What is pragmatic ambiguity in NLP?

- What are the major applications of NLP?

- List any real-world application of NLP?

- What are the common NLP techniques?

- What are the components of NLP?

- What are the tools used for training NLP models?

- Which NLP technique uses a lexical knowledge base to obtain the correct base form of the words?

- List the models to reduce the dimensionality of data in NLP.

- List some open-source libraries for NLP.

- Explain the masked language model.

- What is the bagofwords (bag of words) model?

- What is CBOW in NLP?

- What is TF-IDF, and what are its uses?

- What are POS and tagging?

- What is n-gram in NLP?

- What is skip-gram?

- What is the corpus in NLP?

- What are the features of the text corpus in NLP?

- What is normalization in NLP?

- What is keyword normalization?

- What is lemmatization in NLP?

- What is stemming in NLP?

- What is ambiguity in NLP?

- What is tokenization in NLP?

- What are stop words in NLP?

- How to find word similarity in NLP?

- How to find sentence similarity in NLP?

- How to find document similarity in NLP?

- What are transformers?

- What are punctuations in NLP, and how can we remove them?

- What is latent semantic indexing (LSI)?

- What is named entity recognition (NER)?

- What is NLTK in NLP?

- What is spaCY?

- What is openNLP?

- What is the difference between NLTK and openNLP?

- What is parsing?

- What is dependency parsing?

- What is semantic parsing?

- What is constituency parsing?

- What is shallow parsing?

- What are the differences between dependency parsing and shallow parsing?

- What is language modeling?

- What is topic modeling?

- What is text summarization in NLP?

- What is the difference between a regular expression (RegEx) and regular grammar?

- What is perplexity in NLP?

- What is the Naive Bayes algorithm, and where is it used in NLP?

- What is the PageRank algorithm?

- What is noise removal?

- What is word embedding?

- What are the word embedding libraries?

- What is word2vec?

- What is doc2vec?

- What is a document-term matrix?

- What is wordnet?

- What is GloVe in NLP?

- What is a flexible string matching?

- What is cosine similarity?

- What is information extraction?

- What is object standardization, and when is it used?

- What is text generation, and when is it done?

- How can we estimate the entropy of the English language?

- What is Latent Dirichlet Allocation?

- What are the conditional random fields?

- What are the hidden Markov random fields?

- What is a coreference resolution?

- What is PAC learning?

- What is sequence learning?

- What is an ensemble method?

1. What is the NLG (Natural Language Generation)?

Natural Language Generation is a part of AI and generates natural language texts from structured data to produce an output. It can be seen as NLP’s reverse process, where NLP is used to understand and interpret the natural language to form data, and NLU is used to generate outputs in natural language from structured data.

2. What is the order of steps in natural language understanding?

The order of steps that are to be followed in Natural Language Understanding is as follows:

- Signal Processing

- Syntactic Analysis

- Semantic Analysis

- Pragmatics

3. What is signal processing in NLP?

Signal processing is a method that enables software to analyze, modify, and synthesize signals. In NLP, these can be sound or text signals.

4. What is pragmatic analysis in NLP?

The pragmatic analysis is the process of information extraction from the given text. It is a set of linguistic and logical tools that enable us to churn out the meaning of the given structure of a text.

5. What is syntactic analysis in NLP?

The syntactic analysis, also referred to as parsing and syntax analysis, is the phase in which we try to process the given text’s structure. This process tries to draw meaning from the text by comparing it to formal grammar rules or syntax.

6. What is semantic analysis in NLP?

The semantic analysis is the process of understanding the meaning of the text in the way humans perceive and communicate. It focuses on larger parts of data for processing, as compared to other analysis techniques.

7. What is sentiment analysis in NLP?

The sentiment analysis, also known as opinion mining and emotion AI, is a process of detecting the polarity of the opinion in the text or can be a part of it. It is majorly used to identify, extract, and quantify user or customer reviews’ polarity, survey responses, or social media opinions.

8. What is discourse analysis in NLP?

Discourse is a structured group of the sentence. Discourse analysis can be termed as an approach to analyzing the discourse, i.e., text or language. It involves text interpretations and interactions.

9. What is pragmatic ambiguity in NLP?

Pragmatic ambiguity can be referred to as a condition where words have multiple interpretations. This condition arises when the meaning of words is not specific; i.e., it can give different meanings.

10. What are the major applications of NLP?

The major applications of NLP are:

- Machine Translation

- Speech Recognition

- Sentiment Analysis

- Text Classification

11. List any real-world application of NLP?

The most used real-world application of NLP is speech recognition. Examples of speech recognition applications are Amazon Alexa, Google Assistant, Siri, HP Cortana.

12. What are the common NLP techniques?

The common NLP techniques for text extraction are:

- Named Entity Recognition

- Sentiment Analysis

- Text Summarization

- Aspect Mining

- Text Modelling

What are the components of NLP?

The components of NLP are:

- Lexical Analysis

- Syntactic Analysis

- Semantic Analysis

- Discourse Integration

- Pragmatic Analysis

14. What are the tools used for training NLP models?

The tools used to train NLP models are NLTK, spaCY, PyTorch-NLP, openNLP.

15. Which NLP technique uses a lexical knowledge base to obtain the correct base form of the words?

The NLP techniques that use lexical knowledge to obtain the correct base form are lemmatization and stemming.

16. List the models to reduce the dimensionality of data in NLP.

The commonly used models are TF-IDF, Word2vec/Glove, LSI, Topic Modelling, Elmo Embeddings.

17. List some open-source libraries for NLP.

The popular libraries are NLTK (Natural Language ToolKit), SciKit Learn, Textblob, CoreNLP, spaCY, Gensim.

18. Explain the masked language model.

Masked modeling is an example of autoencoding language modeling. Here the output is predicted from corrupted input. By this model, we can predict the word from other words present in the sentences.

19. What is the bag of words model?

The Bagofwords model is used for information retrieval. Here the text is represented as a multiset, i.e., a bag of words. We don’t consider grammar and word order, but we surely maintain the multiplicity.

20. What is CBOW in NLP?

CBOW or continuous bag of words is a model that tries to predict the target word from the available source context words, i.e., the surrounding words. Here the context words are taken into account as multiple words for a given target word.

21. What is TF-IDF and what are its uses?

TF-IDF is an abbreviation for the term frequency-inverse documentary frequency. It is used to provide a numeric value to a word to show how important it is in the document or a corpus.

22. What are POS and tagging?

Parts Of Speech (POS) are the functions of the word, like a noun, verb, etc., and tagging is labeling the words present in the sentences into different parts of speech.

23. What is n-gram in NLP?

N-grams are the continuous sequence (similar to the power set in number theory) of n-tokens of a given text.

24. What is skip-gram?

Skip gram is an unsupervised learning technique used to find the most related words to a target word. It is a reverse process of the continuous bag of words model.

25. What is the corpus in NLP?

Corpus or corpora (plural), is a collection of the text of a similar type, for example, movie reviews, social media posts, etc.

26. What are the features of the text corpus in NLP?

The features of text corpus are:

- Word count

- Vector notation

- Part of speech tag

- Boolean feature

- Dependency grammar

27. What is normalization in NLP?

Normalization is the process of mapping similar terms to a canonical form, i.e., a single entity.

28. What is keyword normalization?

Keyword normalization is an NLP technique where we apply normalization on a word to condense it to its most basic form.

29. What is lemmatization in NLP?

Lemmatization is a type of normalization used to group similar terms to their base form-based on the parts of speech. For example, talking and talking can be mapped to a single term, walk.

30. What is stemming in NLP?

Stemming in NLP is also a type of normalization and is similar to lemmatization, but the difference here is that it segregates the words without the parts of speech tags. It is faster than lemmatization and can also be more accurate in some cases.

31. What is ambiguity in NLP?

Ambiguity can be referred to as a condition when a word can have multiple interpretations and results in being misunderstood. Natural languages are ambiguous and can make it difficult to process NLP techniques on them, resulting in the wrong output.

32. What is tokenization in NLP?

Tokenization is the process of breaking down large sets of text into small parts for easy readability and understanding. Each small part is referred to as ‘text’ and provides a piece of meaningful information.

33. What are stop words in NLP?

Stop words are the unwanted text that is present in the input. It is the process of removal of unwanted text from further processing of text, for example, a, to, can, etc.

34. How to find word similarity in NLP?

Word similarity in NLP is done by calculating the word vectors of the words in the vector space and then calculating the similarity on a scale of 0 to 1.

35. How to find sentence similarity in NLP?

Sentence similarity is done in NLP by finding the cosine similarity between the two sentences. It can be done by finding the cosine angle between the vectors of two sentences in the inner product space.

36. How to find document similarity in NLP?

Document similarity is done in NLP by converting the documents into the TF-IDF vectors form and finding their cosine similarity.

37. What are transformers?

Transformers are deep learning architectures that can parallelize computations. They are used to learn long-term dependencies.

38. What are punctuations in NLP, and how can we remove them?

Punctuations are the punctuations in the corpus or the input text. We can remove them by using the tokenizer function of NLTK. We can use nltk.RegexpTokenizer() to remove all punctuations.

39. What is latent semantic indexing (LSI)?

Latent Semantic Indexing,, also referred to as latent semantic analysis, is an NLP technique used to remove stop words from processing the text into the text’s main content. It is used to find relationships between different words.

40. What is named entity recognition (NER)?

Named Entity Recognition is a part of information retrieval, a method to locate and classify the entities present in the unstructured data provided and convert them into predefined categories.

41. What is NLTK in NLP?

NTLK, an abbreviation of Natural Language Toolkit, is one of NLP’s most popular libraries. It was written in Python and contained libraries for tokenization, classification, tagging, stemming, parsing, and semantic reasoning.

42. What is spaCY?

spaCY is an open-source library for natural language processing on an advanced level. It is mostly used for production-level usage and uses convolutional neural network models.

43. What is openNLP?

openNLP is a java based library used for Natural Language Processing, and it supports most of the NLP tasks such as tokenization, language detection, etc.

44. What is the difference between NLTK and openNLP?

There is a small difference between NTLK and openNLP, i.e., NLTK is written in python, and openNLP is based on java. One other difference is that NTLK has an option of downloading corpora by an in-built method.

45. What is parsing?

Parsing is the method of analyzing the sentence automatically based on the syntactic structure.

46. What is dependency parsing?

Dependency parsing, also called syntactic parsing, recognizes a dependency parse of a sentence and assigns a syntax structure to a sentence. It focuses on the relationship between different words.

47. What is semantic parsing?

Semantic parsing is a method of conversion of natural language into machine-understandable form.

48. What is constituency parsing?

Constituency parsing is a method of division of sentences into sub-parts or constituencies. It aims to extract a constituency-based parse tree from the constituencies of the sentences.

49. What is shallow parsing?

Shallow parsing, also known as light parsing and chunking, identifies constituents of sentences and then links them to different groups of grammatical meanings.

50. What are the differences between dependency parsing and shallow parsing?

The difference between shallow parsing and dependency parsing is that shallow parsing is the parsing of limited parts of the information. In contrast, dependency parsing is the process of finding relations between all the different words.

51. What is language modeling?

Language modeling is the process of creating a probability distribution of a sequence of words. It is used to provide probability to all the words present in the sequence.

52. What is topic modeling?

Topic modeling is a method of finding abstract topics in a document or set of documents to find hidden semantic structures.

53. What is text summarization in NLP?

Text summarization in NLP is the process of conversion of large pieces of text to short text. It is intended to summarize the given text, keeping the main contents and overall meaning intact.

54. What is the difference between a regular expression and regular grammar?

The difference between regular and regular grammar is that regular grammar is used to generate regular language, and regular expression is used to represent regular language.

55. What is perplexity in NLP?

Perplexity is the condition when the system encounters something unaccountable or which is not meaningful.

56. What is the Naive Bayes algorithm, and where is it used in NLP?

Naive Bayes algorithm is used to predict tags of text by calculating the probability for each tag for the text and then providing the one with the highest probability.

57. What is the PageRank algorithm?

Google uses the PageRank algorithm. It is the algorithm to rank web pages in the search engine results.

58. What is noise removal?

Noise removal is one of the NLP techniques i.e., used to remove pieces of text from the corpus that is not necessary as they can hinder our text analysis.

59. What is word embedding?

Word embedding is the process of mapping words from the vocabulary to vectors of real numbers.

60. What are the word embedding libraries?

The libraries that provide word embedding features are spaCY and genism.

61. What is word2vec?

Word2vec is a collection of models that are used to generate word embeddings. These models are trained to reconstruct the linguistic context of the words in the corpus.

61. What is doc2vec?

Doc2vec is one of the unsupervised algorithms used to generate vectors of sentences or documents irrespective of their length.

63. What is a document-term matrix?

The document-term matrix, also called the term-document matrix, is the matrix that describes the frequency of terms occurring in a document.

64. What is wordnet?

Wordnet can be described as a database created to store words from different languages connected by their semantic relationships.

65. What is GloVe in NLP?

The gloVebased on their pronunciation.

66. What is a flexible string matching?

Flexible string matching or fuzzy string matching is a method to find strings that are likely to match a specific pattern. It is also called approximate string matching as it uses an approximation to find patterns between strings.

67. What is cosine similarity?

Cosine similarity is the measure of cosine difference between two non-zero vectors in the inner product space. It is used to find the similarity between documents irrespective of their size.

68. What is information extraction?

Information extraction is the process of extracting useful data in a structured way from a given unstructured set of data.

69. What is object standardization, and when is it used?

Object standardization is the process of extracting useful information from abbreviations and other acronyms that can not be meaningful in lexical dictionaries.

71. What is text generation, and when is it done?

Text-generation is the process of generating natural language texts automatically in response to the communication. It uses artificial intelligence and computational linguistic knowledge to perform this task.

71. How can we estimate the entropy of the English language?

N-grams can estimate the entropy of the English language. The entropy of a letter is calculated by knowing the entropy of all the previous letters.

72. What is Latent Dirichlet Allocation?

Latent Dirichlet Allocation is a topic modeling method where each topic represents a set of words, and every document is made of various words.

73. What are the conditional random fields?

Conditional Random Fields (CRFs ) are a collection of statistical modeling methods. It is used for pattern recognition and structure prediction.

74. What are the hidden Markov random fields?

Hidden Markov Random fields are a derivation of the Hidden Markov Model. It is a process generated by a Markov chain, whose state sequence can only be observed by a sequence of observations.

75. What is a coreference resolution?

Coreference resolution is the process of collecting all the expressions that are referring to the same entity in a text. It is used in information extraction, document summarization, and question answering.

76. What is PAC learning?

Probably Approximately Correct learning is a mathematical analysis framework. It is used for the analysis of generalization error of the learning algorithms.

77. What is sequence learning?

Sequence learning is a method of learning where both input and output are sequences.

78. What is an ensemble method?

The ensemble method uses multiple learning algorithms to get enhanced and more accurate performance compared to the performance of an algorithm alone.

So these were the most frequently asked NLP interview questions, prepare them well, and increase your chances of getting selected.

Tips To Crack An NLP Interview

- Do basic research on the organization that you are applying to. An employer will surely assess your interest in the role based on your knowledge of the firm.

- Keep the documents organized as this shows your sincerity towards the work.

- Be punctual as it is highly required in the corporate world.

- Don’t fiddle with anything in front of the interviewer. It shows a lack of confidence and is not a positive attitude.

- Be honest if you don’t know anything. Try to answer that question most logically based on your knowledge and understanding.

- Keep a good posture and use hand gestures to explain stuff.

- Listen to the interviewer and answer only when asked to do so.

- Maintain a smile to keep the interview engaging.

- Read the list of Interview Questions on NLP and prepare for the interview properly. Preparing for these common NLP interview questions will boost your knowledge about the subject and your confidence.

Related topics you might like to read:

1. Top 40 Deep Learning Interview Questions and Answers

2. Top 45 Machine Learning Interview Questions and Answers

3. Top 60 Artificial Intelligence Interview Questions & Answers

![How Many NLP Interview Questions Can You Answer? [2024 Edition]](https://www.analytixlabs.co.in/blog/wp-content/uploads/2020/11/Blog-Banner-9-1-3.jpg)

![Deep Learning Interview Guide: Top 50+ Deep Learning Interview Questions [With Answers]](https://www.analytixlabs.co.in/blog/wp-content/uploads/2021/11/1-8.jpg)