Organizations and individuals today churn out petabytes of data daily, which serves various essential functions for businesses to thrive, governments to function, and individuals to have a better life.

Given the vast amount of data and the complexity of data science, numerous processes are involved in using data. To ensure that none of the processes go wrong, data scientists and engineers have started to focus on the data pipeline.

In this article, you will learn about data pipeline, its meaning, and its numerous associated aspects, such as its types, examples, benefits, challenges, and much more. Let’s start this journey by understanding what a data pipeline means.

What is a Data Pipeline?

A data pipeline (sometimes called a data engineering pipeline) is a digital pipe inside which data flows. It is a method where raw data is ingested from numerous sources and transported to a destination, typically a data store (e.g., a data warehouse or data lake) .

Several data processing steps occur from the data source to its destination. Each step returns output data, which becomes the input data for the next. These steps can run sequentially or even parallel if necessary.

Data pipelines are essential for data-driven enterprises, automating and scaling repetitive tasks in data flow. Data corruption, redundant information, and bottlenecks causing latency often arise when moving data from point A to B.

Crafting efficient pipelines is crucial for data science professionals, supporting tasks like data collection, cleaning, transformation, and integration. These pipelines are pivotal in feeding data to various users, including data scientists, business analysts, executives, and operational teams, for purposes such as machine learning models, BI dashboards, operational monitoring, and alerting systems.

To expand your understanding of data pipelines, you must also understand how this technology field has evolved, but first, a short note –

Explore our signature Data Science and Analytics courses that cover the most important industry-relevant tools and prepare you for real-time challenges. Enroll and take a step towards transforming your career today. We also have comprehensive and industry-relevant courses in machine learning, AI engineering, and Deep Learning. Explore our wide range of courses. P.S. Check out our upcoming batches or book a free demo with us. Also, check out our exclusive enrollment offers

Evolution of Data Pipelines

Data pipelines allow data to move from one source to another and transform them. This journey is frequently intricate and rarely straightforward because data needs to be extracted from multiple sources, transformed, reorganized, and combined at various stages before reaching its destination.

Over the years, the data has grown complex (in terms of volume, variety, and velocity), and so have the related technologies that deal with it, causing the data pipelines to evolve rapidly.

-

Pre-2017

Earlier data used to be hosted on non-scalable on-premises databases, providing limited computational capability. This caused data engineers to spend much time optimizing queries and modeling data. Data pipelines were created by hardcoding, and their scope was limited to cleaning, normalizing, and transforming data before an ETL pattern was used to load them to data.

-

2011-2017

From 2011 to 2017, the Haoop era was when organizations began to utilize Hadoop’s parallel and distributed processing. This was due to the rapidly increasing data size, which made it difficult to move and process it using legacy methods.

-

Modern Era

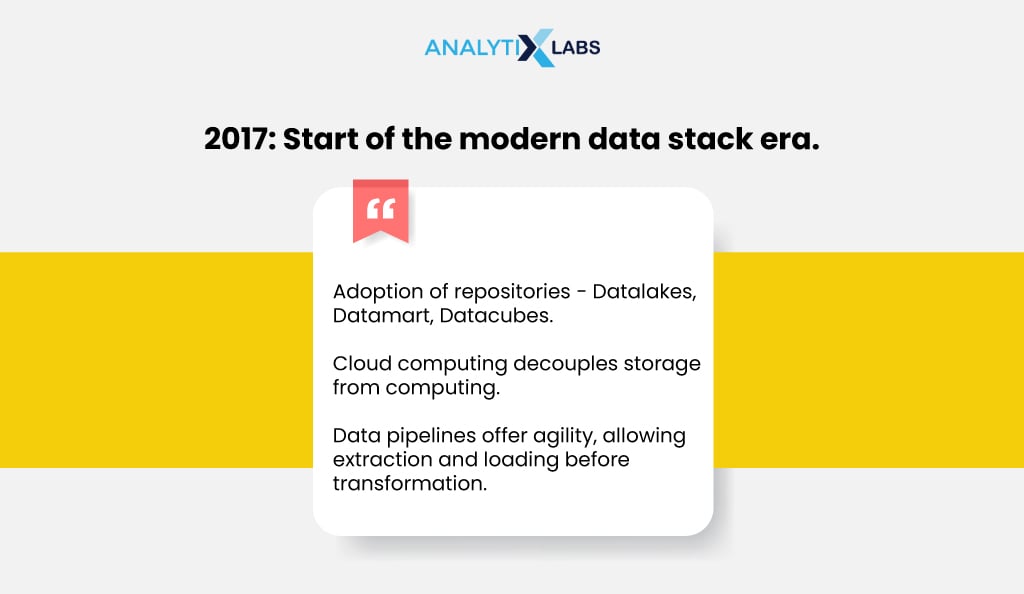

In 2017, the modern data stack era commenced with the widespread adoption of data repositories like Datalakes, Datamart, and Datacubes, coupled with the rise of cloud computing. This enabled organizations to separate storage from computing, liberating them from physical computing constraints and associated costs.

Consequently, today’s data pipeline architecture facilitates agility and speed, allowing data extraction and loading before transformation. The flexible ETL design pattern and cloud services’ scalability have expanded the data pipeline’s utility for widespread applications, including machine learning, analytics, and experimentation.

To completely comprehend the meaning of a data pipeline, you must understand how it actually works.

How does a Data Pipeline work?

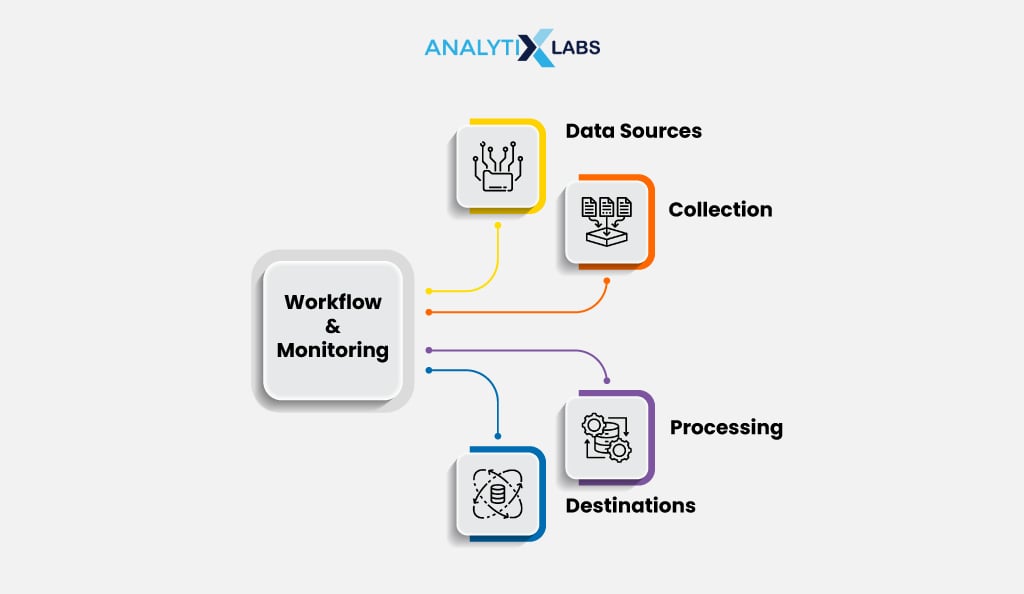

There are numerous ways to do data pipelining. However, every data pipeline follows a few generic steps. Let’s understand them.

1) Acquisition

The first step in a data pipeline is acquisition, where data from numerous sources (mainly databases) is brought into the pipeline. Suppose you are creating a data pipeline for an e-commerce website and moving data involving smartphone sales.

In such a scenario, you might include demographic data, transaction data, and product details using features like customer_id, product_id, transaction_id, time_stamp, etc.

2) Governance

After acquiring data, organizations must prioritize data governance. Data governance ensures that data quality and security are upheld for widespread use.

For instance, an organization handling healthcare data must securely manage patients’ private information in compliance with government-defined retention and usage policies (e.g., GDPR, HIPAA, etc.).

3) Transformation

When the data is ready, the next step involves transforming data. While this step is typically associated with changing the data structure (typically unstructured to structured), several processes are performed at this stage. A few of these include

-

Standardization

Data standardization includes scaling data (if required) and reformatting data such that different datasets follow a common structure.

-

Deduplication

Redundant information is removed so the data is not unnecessarily complex and big.

-

Verification

Various automated checks are performed on data to ensure that the data is usable, correct, and has no anomalies. Any identified issues are flagged so that further investigation can take place.

-

Cleaning

Data cleaning is a loose term. This means several kinds of actions are taken on the data to make it of better quality and ready for downstream applications. Common data cleaning steps include missing value treatment, outlier capping, etc.

-

Enrichment

More information can be extracted for the same data by enriching it. Data enrichment involves adding information to the data by manipulating it or adding information from another place.

For example, key performance index features can be derived using the data’s preexisting features to examine product performance. Another example is adding geographic information from a different data source to customer data, enabling location-based marketing campaigns.

-

Aggregation

Data aggregation is among the most crucial aspects of the data pipeline. In this process, different standardized, verified, cleaned, and enriched data are collated to provide the user with consolidated information. Typically, this aggregated data is what the data pipeline consumers use.

Other transformation steps include sorting, filtering, sampling, etc.

4) Dependency

Dependencies are often overlooked in data pipelines, where not everything proceeds seamlessly. Changes occur sequentially, and data flow can encounter roadblocks, causing delays. Eliminating blockers may not be immediate due to technical or business dependencies.

For instance, a pipeline might wait for a central queue to fill up or halt for cross-verification by another business unit. Acknowledging and addressing these dependencies is crucial in designing effective data pipelines.

5) Validation

The final data is now checked to confirm that it is as per the requirements of the application or its defined users.

6) Loading

The destination of a data pipeline, also referred to as the data sink, serves as the endpoint where the final validated data is stored. This includes loading data onto platforms like data warehouses, data lakes, and data lakehouses (which combine elements of data warehouses and data lakes).

Storing data in these destinations allows for later retrieval and distribution to authorized parties. The data pipeline might also serve a business intelligence or data analysis application, making it crucial for their smooth operations.

Types of Data Pipeline Architecture

There are multiple data pipeline solutions out there that you can choose from when designing your own. Some of the most common types of data pipelines are the following.

-

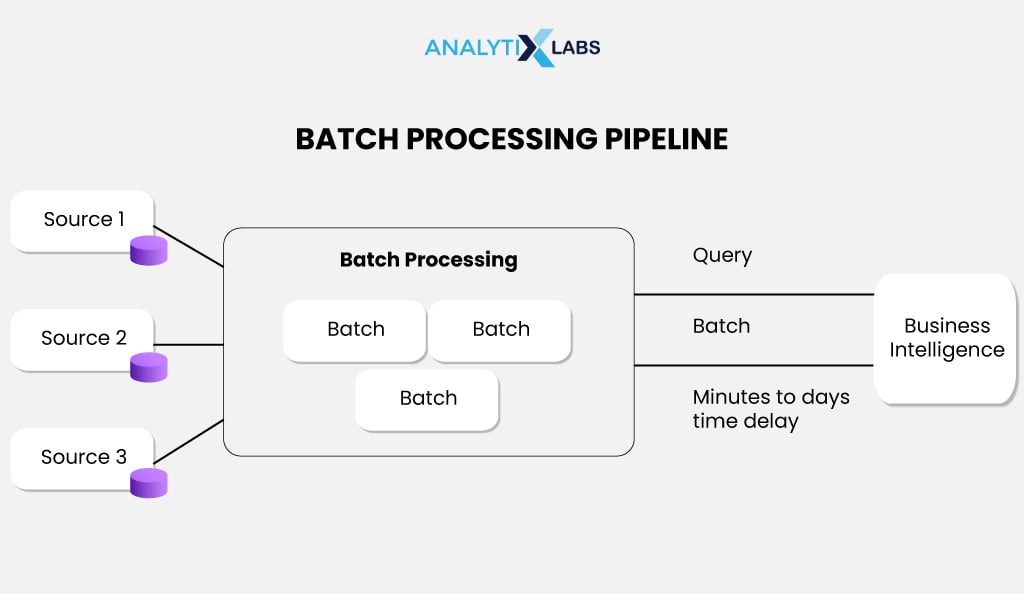

Batch

Organizations use a batch-processing type of data pipeline when a large volume of data needs to be moved at regularly scheduled intervals. In batch data pipelines, the pipeline can sometimes take hours to even days to execute, especially when dealing with large data sizes.

These pipelines are frequently scheduled to run during off-peak hours, such as nights or weekends, when user activity is low. They are utilized when immediate data delivery to the business application or end user is not a priority.

For example, such an architecture can be used to integrate marketing data into a data lake for data analytics work. Common tools for creating such data pipelines are Informatica PowerCenter and IBM InfoSphere DataStage.

-

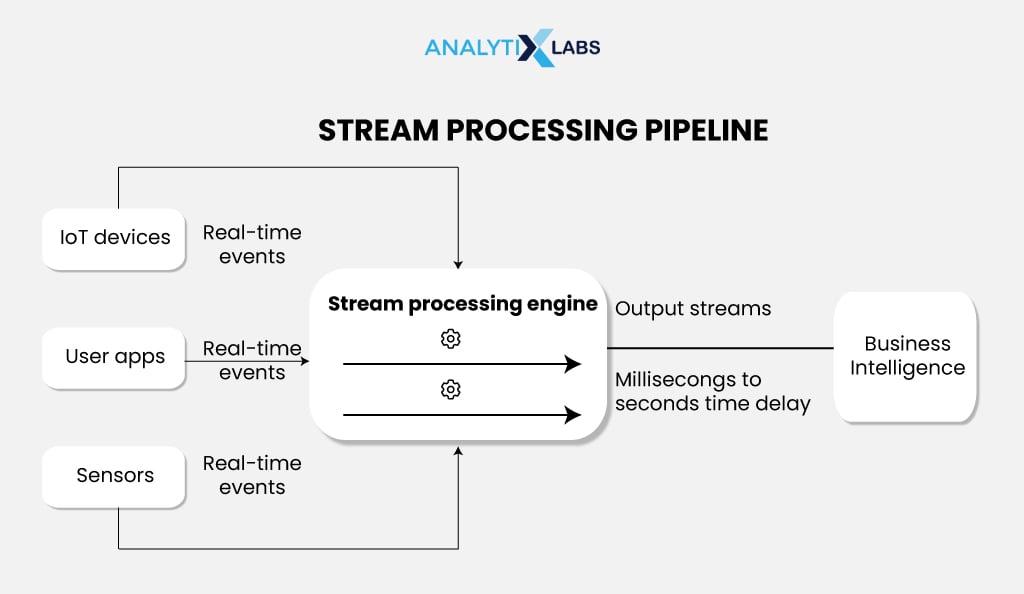

Real-time

Real-time data pipelines, also known as streaming data pipelines, are used when data is collected from streaming sources. Typical examples of streaming data include the IoT (Internet of Things), financial markets, social media feeds, etc. In such a data pipeline architecture, it is possible to quickly capture and process the data before sending it to the downstream applications or users.

Unlike batch processing, real-time data pipelines update metrics, reports, and summary statistics with every event, making them ideal for organizations requiring immediate insights, such as those in telematics or fleet management.

However, this architecture is costlier and more technically complex than batch processing, demanding advanced infrastructure. Popular tools for creating real-time data pipelines include Confluent, Hevo Data, and StreamSets.

-

Lambda

Another type of data pipeline is the lambda pipeline, which combines the batch and real-time methods mentioned above. While such an architecture is great for big data environments involving analytical applications, it isn’t easy to design, implement, and maintain.

-

Cloud-native

Many organizations today are moving to cloud-based functions to create data pipelines. This has provided the industry with another type of data pipeline known as cloud-native, which deals with cloud data. In such a type, cloud data, for example, data from an AWS bucket or Azure container, is fed into the pipeline.

The advantage of the cloud-native pipeline is that it is less expensive for businesses as it saves the cost of maintaining the expensive infrastructure needed for hosting the pipeline.

-

Open Source

Creating a data pipeline is an expensive task, as most of the tools that allow you to create these pipelines are provided by commercial vendors. However, open-source data pipeline solutions provide alternatives to such tools.

While less expensive than their commercial peers, the open-source solutions require expertise to develop, utilize, and expand their functionalities. Apache Kafka, Apache Airflow, and Talend are common open-source tools for creating data pipelines.

-

Kappa

This type of data pipeline’s architecture is similar to lambda. However, the data is ingested in real-time and processed only once.

-

Microservices

In such a data pipeline, independently deployable and loosely coupled services are used to process data.

-

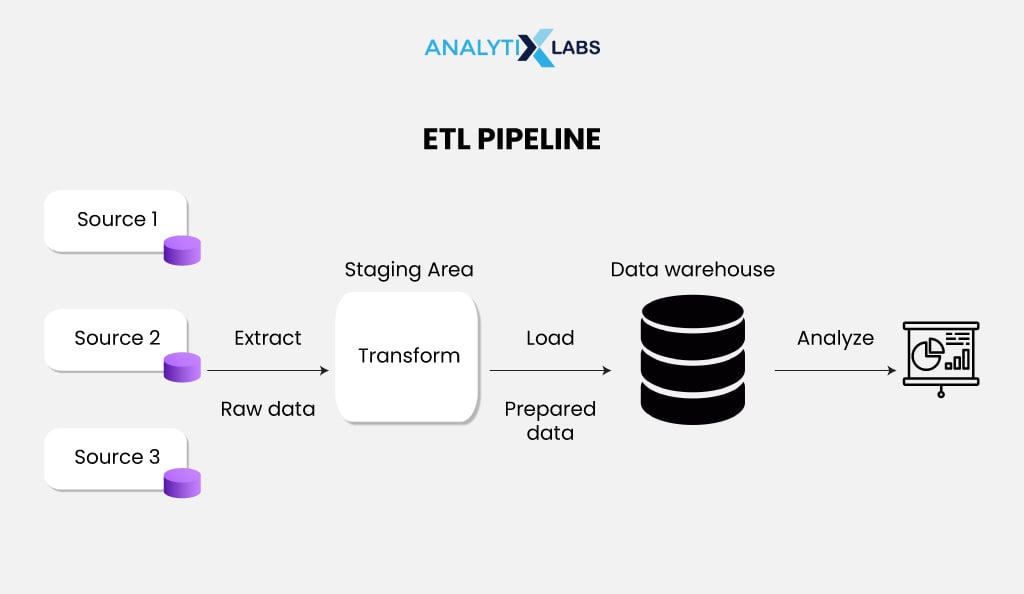

ETL

ETL data pipeline is the most commonly used data pipeline architecture and has been considered a standard for decades. ETL involves extracting raw data from diverse sources, converting it into a predefined format, and loading it into a target system, typically a data mart or enterprise data warehouse.

It’s widely used for tasks like migrating data into a data warehouse from legacy systems, consolidating data from multiple touchpoints into a single place (e.g., CRM), or joining disparate datasets for deeper analytics.

Remember that ETL pipelines require rebuilding whenever business rules, data formats, or similar factors change.

However, another type of data pipeline architecture is available to address this issue: the ELT data pipeline.

-

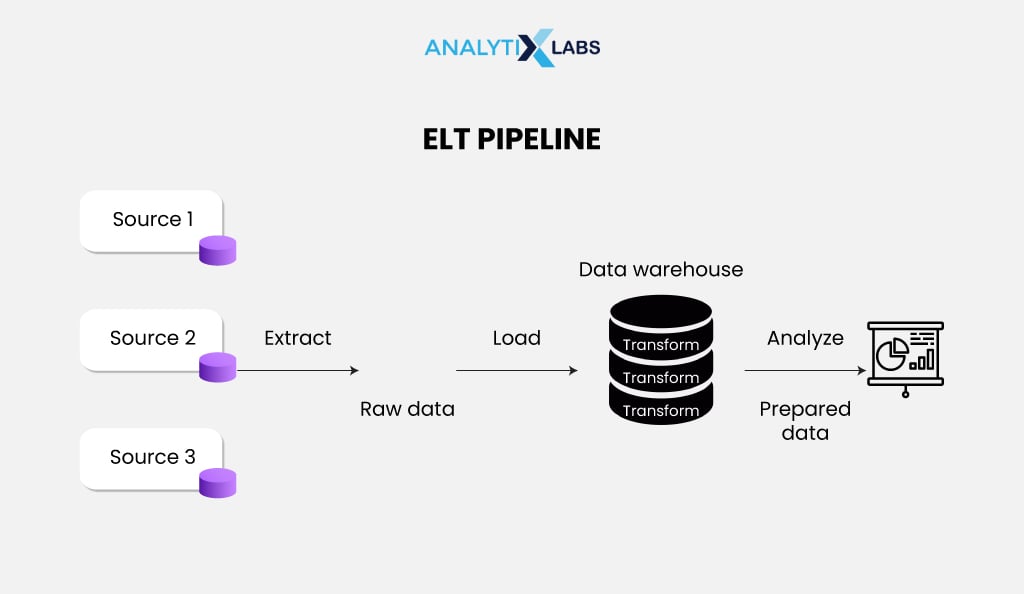

ELT

Another common way of creating a data processing pipeline is using ELT. While ETL stands for extraction, transformation, and loading, ELT, as the term suggests, refers to the sequence of steps where loading happens before transformation.

Switching the sequence from ETL to ELT, where loading precedes transformation, may seem trivial but has significant implications. By first loading data into a data lake or warehouse, users gain flexibility in structuring and processing data as needed, whether fully or partially, and at any frequency required.

ELT is particularly useful when dealing with uncertainty about data handling or large datasets. Despite its advantages over ETL, ELT faces challenges due to its relative immaturity and scarcity of tools and expertise needed for development.

-

Raw Data Load

This type of data pipeline involves moving and loading raw data between two locations. A typical example would be moving raw data between databases or on-premise data centers to the cloud.

One-time operations benefit from such pipelines, but they’re discouraged from recurring solutions. They primarily focus on extraction and loading processes, often considered time-consuming and slow when handling large data volumes.

-

CDC

The CDC type of data pipeline uses the ETL batch processing approach. The difference is that it detects changes during the process and sends the information about such changes to message queues for downstream processing.

The big data processing pipeline is another kind that is not mentioned in the above list. Below, we will explain in detail the role of such a pipeline, as it is a crucial type of data pipeline and requires a separate discussion.

What is a Data Pipeline in Big Data?

Data pipelines play a major role in big data. Let’s explore this role and understand how data pipelines benefit the domain of big data.

-

What is Big Data?

Big data refers to massive amounts of data that are high in volume and velocity. Such data can be in various types, sizes, and formats. Today, almost all kinds of organizations generate big data in many known and unknown types/formats.

Due to their sheer size and complexity, companies today find it difficult to handle, manipulate, and analyze data to create reports and find insights. The solution is the development of a data pipeline.

Also read: Top 12 Big Data Skills You Must Have In 2024 and Beyond

-

What is a Big Data Pipeline?

As data pipelines separate computing from storage, big data processing pipelines can focus on ingesting and analyzing big data. While the big data pipeline is similar to the other types of data pipelines discussed earlier, the difference is that the scope is bigger here.

For example, data pipelines in big data deal with huge amounts of data (often in gigabytes) coming from numerous sources (that can often exceed hundreds). The data such pipelines deal with greatly vary in format (it can be structured, semistructured, or unstructured) and are often produced at high velocity.

-

Benefits of Big Data Pipeline

Using data pipelines in big data is beneficial because it enables better event framework design, helps maintain data persistence, eases the scalability of coding, and provides a serialization framework.

The workflow is better managed due to automated data pipelines with scalability factors. Other benefits of big data pipelines include high fault tolerance, the ability to manage real-time data tracing, and automated processing, which defines proper task flow from job to job, location to location, and work to work.

-

Uses of Big Data Pipeline

In today’s work, big data pipelines have multiple uses. For example, they can be used to create forecasting systems where teams like financing and marketing can easily aggregate data to report back to customers or manage product usage. Other uses include ad marketing, CRM, strategy automation, etc.

-

Tools for Big Data Pipeline

Apache Spark, Hevo Data, Astera Centerprise, Keboola, and Etleap are common tools for building big data pipelines.

It’s now time to familiarize yourself with some concrete data pipeline examples, as this will allow you to understand how, in real life, such pipelines are used by organizations.

-

Data Pipeline Architecture Examples

A data pipeline can be made using the abovementioned types in thousands of ways. Let’s look at some data pipeline architecture examples to give you some ideas on how a data pipeline can benefit you and your organization.

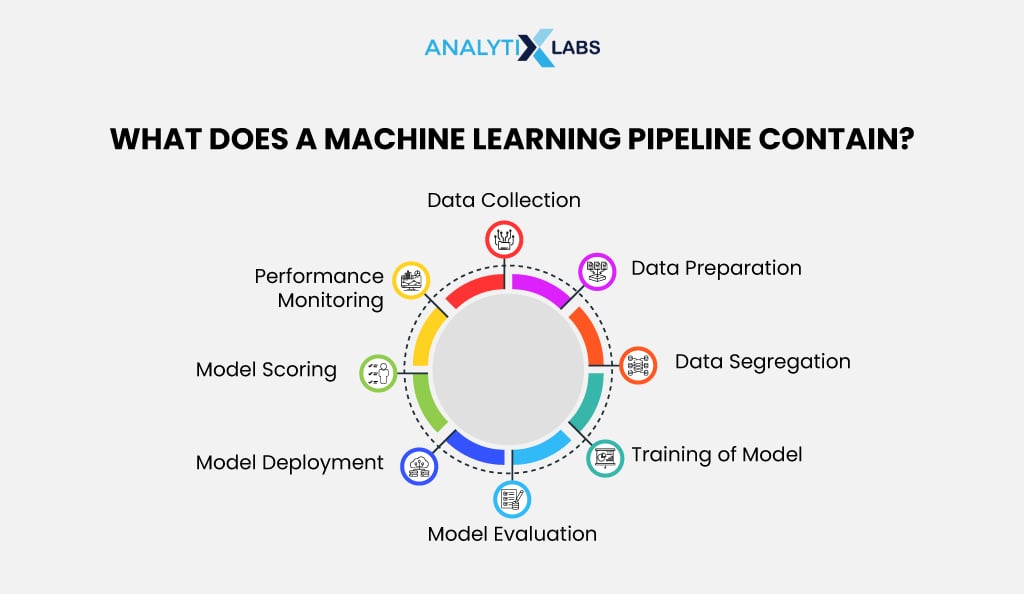

Example #1: AI and ML Pipeline

Machine learning (ML) and artificial intelligence (AI) are widely discussed. Therefore, an AI/ML pipeline is an example of a must-know data pipeline. To create effective ML/AI models, multiple versions of the same mode need to be created, requiring multiple times of data ingestion and processing.

Due to data pipelines, however, a modular service can be created that allows users to select the data pipeline components for quickly developing a model.

Also read:

- Why Should You Learn Machine Learning: Its Importance, Working, and Roles

- Advantages and Disadvantages of Artificial Intelligence

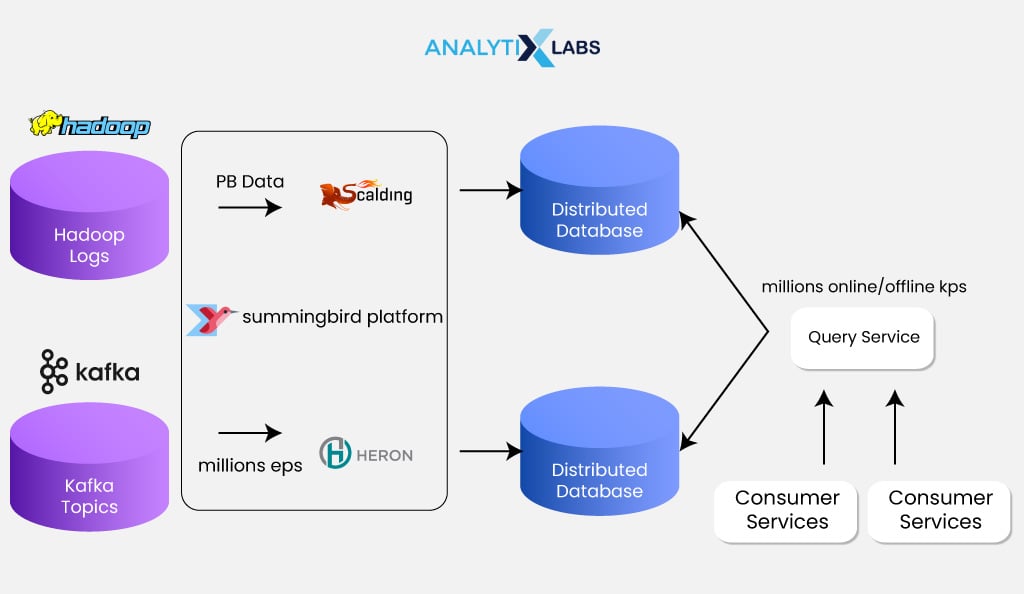

Example #2: Social Media Data Pipeline

Another instance of data pipeline architecture is the social media data pipeline, which streams data from various platforms such as Facebook and X (formerly Twitter) for analysis. This pipeline captures, stores, and analyzes every interaction, including likes, dislikes, shares, posts, comments, and more, in real time.

This allows companies to understand customer behavior and market trends, detect and respond to emergencies, flag irregularities, enforce regulations and policies, etc. For example, X’s (Twitter’s) social media data pipeline uses Heron for streaming, TSAR for batch and real-time processing, and the Data Access Layer for data consumption and discovery.

Example #3: Aviation Data Pipeline

Aviation companies employ data pipelines to constantly monitor their operations. The goal is to extract real-time data from multiple touchpoints, such as ticketing portals, boarding gates, etc., and have a holistic view of the customer experience.

For example, JetBlu’s data pipeline takes real-time data from multiple sources using Snowflake Task rather than a traditional pipeline structure. Data ingestion is done through FiveTran, while Monte Carlo monitors data quality, and Datbricks supports the ML and AI use cases.

Other examples of data pipeline architecture include e-commerce data pipelines, healthcare facilities data pipelines, and autonomous driving systems data pipelines.

Now, let’s discuss how to build data pipelines effectively. Before diving into coding, it’s essential to understand the key considerations, including benefits, challenges, and best practices. Let’s start by exploring these major considerations.

Data Pipeline Considerations

When building data pipelines, a data engineer needs to consider many things. Answering a few crucial questions is a great way to ensure you have considered all major aspects of the process.

- Does your pipeline need to handle real-time (streaming) data?

- What is the velocity (rate of data) do you expect?

- What types of processing need to happen?

- How much processing needs to happen in the data pipeline?

- Is the data being generated on-prem or cloud?

- Where does the data need to go?

- Do you plan to build the pipeline with microservices?

- What are the technologies your team is already well-versed in data pipeline maintenance or programming?

You can create an effective data pipeline by answering all these questions and considering their answers.

Often, you need to assess whether building data pipelines is beneficial for your organization or project. To do this assessment, you must know the various advantages data pipelines provide.

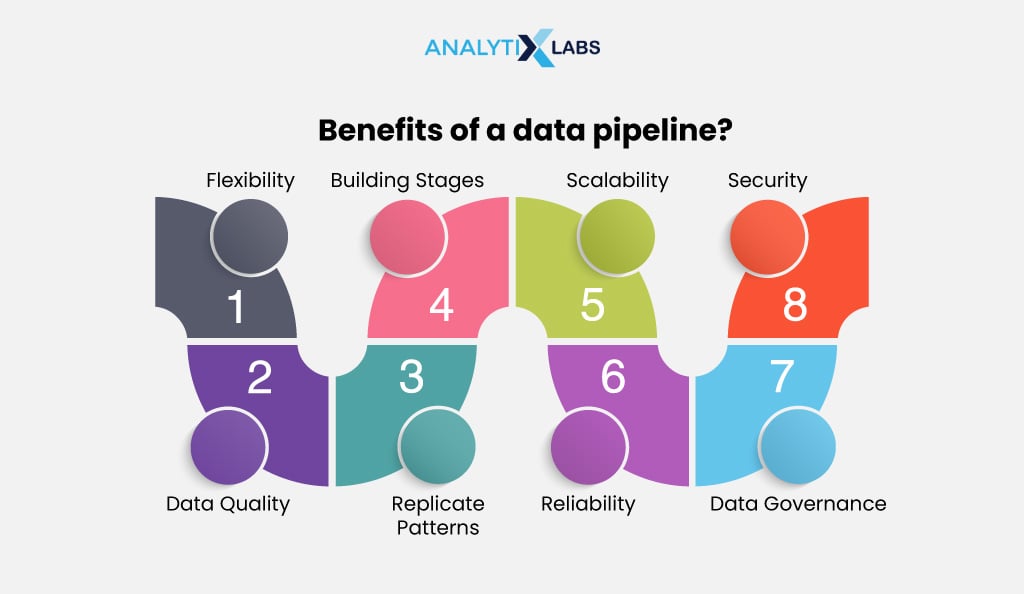

Benefits of a Data Pipeline

Creating a data pipe has numerous benefits, no matter what project you are involved in. Some of the key advantages of using a data pipeline are as follows-

-

Flexibility

Data pipelines provide a flexible and agile framework, allowing companies to respond quickly to changes in user data needs or data sources. While traditional pipelines were slow and often difficult to debug, causing delays, modern data pipelines, partly due to the cloud, provide instant elasticity at a fraction of the cost.

-

Data Quality

The data pipeline helps refine and clean the data (if transformation is involved), which increases its quality. Quality data makes the information gained from it reliable, accurate, and consistent.

-

Replicate Patterns

When an extensive architecture is created for a data pipeline, each section becomes repurposable and reusable for new data flows. Consequently, data pipelines effectively address the challenge of moving and processing data for specific projects and other projects requiring similar processes, helping to save time and cost.

-

Building Stages

It is possible to build data pipelines incrementally. Gradual scaling up allows companies to develop such pipelines and immediately reap the benefits. As the company’s resources and demands for dealing with complex data grow, more effort can be put into building the pipeline further.

-

Scalability

Data pipelines can deal with large amounts of data. This enables companies to scale their data processing and analyzing capabilities as their data size grows.

-

Reliability

A well-designed data pipeline guarantees consistent, reliable, and accurate data processing. Automation, at its core, minimizes the likelihood of human-induced inaccuracies and errors.

-

Data Governance

A data pipeline can easily implement many data governance practices, such as cataloging, lineage, and quality.

Furthermore, the data pipeline is iterative, so adjustments can be made whenever issues arise in data processing. This iterative approach allows continuous improvements, gradually enhancing the data’s accuracy, reliability, and completeness.

-

Security

Data pipeline provides a framework for organizations to implement different security measures, such as access controls and encryption, to protect sensitive data.

As you can see, using a data pipeline has several benefits. However, organizations face many challenges when developing, maintaining, and using data pipelines. The next section discusses some of them.

Challenges of Data Pipelines

While creating and maintaining a data pipeline, organizations face several challenges. You can think of it this way: a data pipeline is like a water pipeline in a real-world plumbing system. It can break, malfunction, and require constant maintenance. Some of the most prominent issues faced by businesses when working with data pipelines are as follows-

-

Complexity

Data pipelines can become highly complex, especially in large companies, making their understanding and management complex. When data pipelines work at a scale, simple activities like knowing the pipelines in use, the applications and users relying on them, remembering their peculiarities, migrating them to the cloud, and complying with regulations can become highly complicated.

-

Cost

Building pipelines, especially complex ones at a scale, is costly. The technologies are expensive, and highly trained and technical human resources are needed to create and work on such pipelines.

In addition, the demand for data analysis, technological advancements, and the need to migrate to the cloud are constantly evolving, requiring data engineers to create new pipelines. This adds to the cost of running data pipelines.

-

Efficiency

A data pipeline might slow query performance depending on how data transfers and replicates within an organization. For instance, the pipeline could slow down if multiple simultaneous data requests or the data size is significant. This is especially true when data virtualization or multiple data replicas are involved.

Although data pipelines present numerous challenges, experts have identified many best practices to tackle the aforementioned issues over the years.

Best Practices to Build A Data Pipeline

When you, as a data science professional, build data pipelines, there are best practices you should follow to overcome the disadvantages and challenges data pipelines face. Some of the most crucial best practices one must remember are as follows-

-

Choosing Right Pipelines

Choose the pipeline based on your data handling needs. If you require continuous processing of data, then use continuous pipelines as provided by Spark Streaming. You can go for AWS Lambda and create an Event-driven pipeline that triggers when an event arrives; similarly, batch pipelines should be adopted when data latency is not an issue.

-

Parallelizing Data Flow

If the input datasets are not dependent on each other, try a concurrent data flow. This can significantly reduce the runtime of data pipelines.

-

Applying Data Quality Checks

Data pipelines can sometimes lead to erroneous data inclusion, especially during processing. To maintain data quality, schema-based and other types of checks must be employed at various points in the pipeline.

-

Creating Generic Pipelines

Design data pipelines with modularity in mind, allowing reusable code components to be repurposed in future builds if similar pipelines are needed.

-

Monitoring and Alerting

It is difficult to monitor the numerous runs of the data pipeline. As a pipeline owner, you need to know how effective the pipeline is, where it is failing, what issues the users face, etc.

One way is to set email notifications that constantly update you on job successes and failures. This enables shorter response times in case of a pipeline issue. Logs must also be maintained, which can help troubleshoot any issues.

-

Implementing Documentation

A proper document explaining the data flow through diagrams must be maintained. In addition, the code must have proper comments. This can help new team members quickly understand the architecture and avoid making mistakes.

-

Design Modular Pipelines

As the user’s demands change, so does the data pipeline. Therefore, you must create modular and automated pipelines that can be easily changed. This helps keep maintenance and redevelopment costs low and facilitates future integration.

-

Use Data Pipeline Framework

A smart way of creating a data pipeline is to use pre-existing data pipeline frameworks such as Apacke Kafka or Apacke NiFi. This can help in simplifying the development process.

-

Message Queue

Using message queues to decouple various pipeline stages enhances the pipeline’s resilience to failure.

-

Version Control

Version control should be used for the pipeline code and configuration. This can help track changes and perform a rollback if necessary.

-

Scalable Storage

When managing substantial data volumes, scalable storage solutions such as distributed file systems or cloud-based data lakes are advisable.

-

Test and Deploy

Thorough testing of the data pipeline is essential before deploying it into production. Additionally, continuous performance monitoring is crucial even after deployment.

Next, we will discuss some of the most crucial tools to develop data pipelines.

Data Pipeline Tools and Technologies

Several tools are involved in building and maintaining data pipelines.

- Data Warehouses like Amazon Redshift, Google BigQuery, Snowflake, Azure Synapse, and Teradata act as central repositories to store the processed (transformed) data for particular purposes. These tools support ETL as well as ELT processes. In addition, they allow for stream data loading, making them crucial for data pipelines.

- Data Lakes allow you to store raw data in native formats. They’re commonly used to develop ELT-based big data pipelines, which companies use for their analytical and machine learning projects. Most cloud platforms, such as AWS, GCP, and Azure, offer data lakes.

- Batch Workflow Schedulers like Luigi or Azkaban allow users to monitor and automate pipeline workflows. Workflows can be programmatically created in the form of tasks using such tools.

- Real-time data streaming tools typically used for data pipeline development are Apache Kafka, Apache Storm, Amazon Kinesis, Azure Stream Analytics, Google Data Flow, SQLstream, and IBM Streaming Analytics. They allow the processing of information generated continuously by sources like IoT (Internet of Things), IoMT (Internet of Medical Things), and machinery sensors.

- ETL tools like Oracle Data Integrator, IBM DataStage Informatica Power Center, and Talend Open Studio are also used for data pipeline development.

- Big Data Tools are commonly used in data pipelines dealing with large data volumes. These tools include Hadoop and Spark (for batch processing), Spark streaming analytics services (or expanding the capabilities of main Spark), Apache Airflow and Apache Oozie (for scheduling and monitoring batch jobs), Apache HBase and Apache Cassandra (for storing and managing large datasets on NoSQL databases).

- SQL is a programming language used in data pipelines to manage data available in relational databases.

- Scripting languages like Python are also commonly used.

Also read: Datalake vs. Data Warehouse

Before completing this article, let’s address an issue faced by individuals learning about data pipeline for the first time: understanding how it differs from ETL.

Data Pipeline vs. ETL

A common mistake individuals make when learning about data pipelines is using the terms data pipeline and ETL interchangeably. They are, however, two different things.

ETL (Extract, Transform, Load) is a fundamental technology in data processing pipelines. It involves batch processing to move data from a source to a target system, such as a data warehouse or data bank. During this process, various transformations, such as aggregation, filtering, and sampling, are applied to the data.

Data pipelines also entail data movement but may or may not involve transformation. Organizations commonly use them for reporting, data analysis, and machine learning tasks. Data pipelines typically operate with a continuous data flow, while ETL processes usually run on a fixed schedule.

Data pipelines may not always conclude with loading data into a data warehouse or bank; they can load data into multiple destination systems, such as AWS buckets or data lakes.

Conclusion

The need for data pipelines is on the rise. The value of global data pipeline tools, which is expected to grow at a CAGR of 20.3% to $17.6 billion by 2027, can estimate this.

Despite challenges such as inadequate workforce skills, knowledge gaps, and data downtime issues, the market is progressively embracing modern data pipelines to ensure seamless operations.

In the future, data pipelines will become quintessential and crucial tools for handling and analyzing massive datasets. You, as a data science professional, must learn more about data pipelines as they allow companies to automate and streamline the data transformation process, allowing them to save time and resources.

FAQs:

-

Is the data pipeline an ETL?

No, data pipeline and ETL are different terms. ETL means extracting, transforming, and loading data and performing all such operations. The transformation process may be incorporated into the data pipeline. Also, ETL focuses more on individual batches of data than data pipelines, which emphasize working with ongoing data streams in real-time.

-

What is an example of a data pipeline?

Numerous examples of data pipelines exist. One such example is the music streaming platform Spotify, which uses data pipelines to analyze data to understand user preferences and recommend music.

-

Is SQL a data pipeline?

Consumers typically use SQL, a data query language, to extract relevant data at the end of the data pipeline process.

-

What is a data pipeline in AWS?

AWS is a cloud platform that provides an AWS data pipeline, a web service that moves data between various AWS computing and storage services at specified intervals.