Recent research demonstrates progress in strengthening detection, sometimes by looking past the minor signs of specific generation tools and instead leveraging underlying physical and biological cues that are challenging for AI to replicate. A study conducted by Christopher Doss and colleagues found that only 46% of adults can tell the difference between the types of deepfakes, real and deepfake videos.

Reliable media authentication is crucial in the digital age. Given the rising incidence of synthetic content, it is essential to have systems that can confirm the legitimacy of videos, audio, photos, and text.

This article will go over what is deepfake, the types of deepfakes, evolution, and advancements in the media authentication domain.

Deepfake – What is it?

It is a piece of media that artificial intelligence (AI) created but purposefully altered using deep neural networks (DNNs) to change a specific person’s identity. This media file typically features a human subject in the form of an image, video, or audio.

It is one of the reasons why, as time goes on, computer scientists develop better ways to algorithmically produce text, images, audio, and video—typically for more positive purposes like enabling artists to realize their visions—as well as counter-algorithms to identify such synthetic content.

How does authentication help?

Authentication helps stop the spread of false information and fake news by ensuring that consumers can rely on the content they consume. Furthermore, trustworthy media authentication shields people and organizations from possible reputational harm from modified or fabricated material.

Deep learning has made significant contributions to the modernization of media authentication. Deep learning algorithms are good at spotting modified or falsely presented content because they can examine and uncover data trends.

Also read: Fundamental Concepts of Deep Learning and Neural Network

These algorithms can develop a highly accurate ability to distinguish between actual and artificial information. They can achieve this by training on massive amounts of real and altered media. This technique can significantly improve media authentication processes’ efficiency and efficacy. That can offer a solid barrier against the spread of false information.

How to Understand Deepfakes?

The term “deepfake” has become popular in digital deception, sparking worry and interest. A picture or video altered using machine learning algorithms is called a deepfake.

In other words, the computer has been “taught” to replace a person’s face with a different one. Other societal and security concerns this morphing technology brings include video tampering and fake news.

Exploring the Types of Deepfakes

A deepfake is fundamentally a piece of synthetic media that uses AI capabilities to accurately and convincingly alter or replace current information. While many deepfakes exist, including audio and video manipulation, face swaps and voice synthesis are the most popular.

(1) Face swaps

This type of deepfake involves putting one person’s face on another person’s body, making a perfect image that is hard to spot. Complex algorithms carefully look at facial features, expressions, and lighting to produce a real result.

(2) Voice synthesis

Deepfake technology can also change sounds so that a person’s voice can be used to say things they have never said before. AI systems may produce speech that closely resembles the target voice by analyzing speech patterns and tones, further obscuring the distinction between fact and fiction.

Artificial intelligence uses the recordings to make a new audio recording that sounds like the speaker’s voice.

This type of artificial voice simulation is shown through the deepfake of Eminem’s voice that David Guetta did at one of his concerts. The DJ used Eminem’s deepfake voice to perform a verse. It was made by combining two AI tools that generate music.

(3) Generative adversarial networks

Central to creating deepfakes is a cutting-edge technology known as Generative Adversarial Networks (GANs). GANs consist of two neural networks: a generator and a discriminator.

The generator crafts synthetic content while the discriminator evaluates its authenticity. Through a continuous feedback loop, these networks refine their abilities, resulting in increasingly convincing deepfake outputs.

This technology uses immense datasets to understand and replicate intricate patterns in human features, expressions, and voices. The capacity of GANs to produce content that seems extremely legitimate comes from learning from tens of thousands of instances, which can make it difficult to tell the difference between real and artificial content.

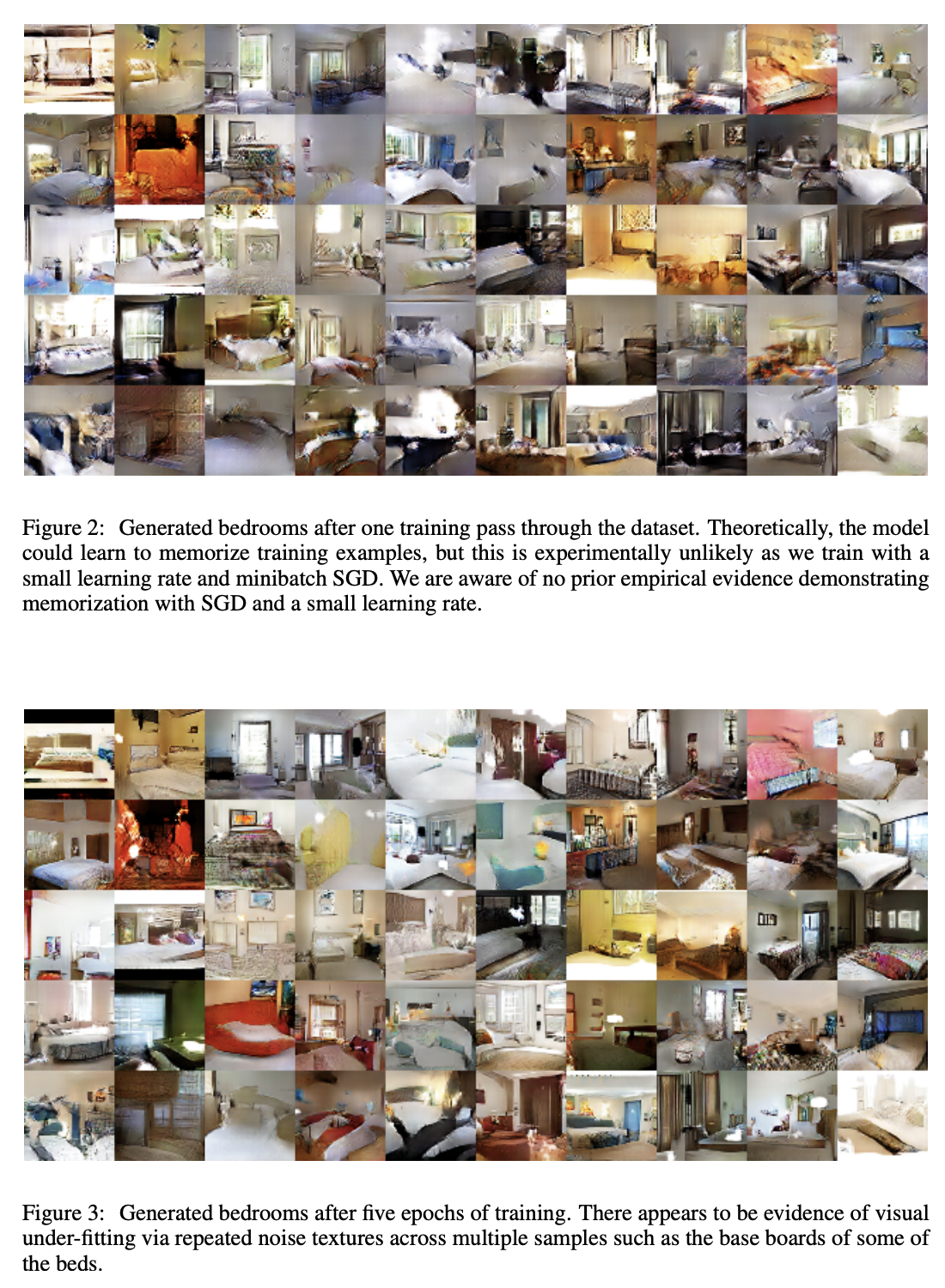

The 2015 paper “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks“ by Alec Radford et al. used a tool called DCGAN to show how to train stable GANs at a large scale. They use the tool to show ways to develop new examples of bedrooms.

Notable instances of Deepfakes

The development of AI and deep learning has allowed us to create realistic representations of people, places, and objects computationally. One use of this technology is deepfakes, which use machine learning to make it possible to overlay fresh faces on old footage.

In March 2022, a video of Ukrainian President Volodymyr Zelensky requesting that his soldiers lay down their weapons in the face of a Russian invasion surfaced online.

A similar attempt could someday have severe geopolitical repercussions since artificial content is becoming easier to manufacture and more convincing. However, the AI-created video was of poor quality, and the hoax was swiftly exposed.

Another example is a famous TikTok account about Tom Cruise deepfakes—@deeptomcruise—which have become increasingly popular over the past few years.

The videos still have that uncanny valley look, but the account owner has perfected the actor’s voice and mannerisms. The TikTok account uses a deepfake app to make some of the most believable deepfakes to date.

The Urgent Need for Reliable Authentication

Deepfake technology is a hotly debated topic. The main point is that deepfakes can be used to spread false information and cause societal problems. It could affect the community and its well-being by making online scams more common. In this age of information, spreading false information is a big problem that has effects beyond lying.

Threats of Using Deepfakes

Using deepfakes can lead to threats to personal and organizational reputation, including:

- Loss of trust

- Diminished credibility

- Crisis amplification

- Potential legal and ethical ramifications

- Defamation and libel

- Privacy infringement

- Social responsibility and accountability

Misinformation can cause reputational harm, degrade a person’s image, and result in financial obligations. Additionally, it presents ethical issues and could result in legal charges for privacy infringement.

Accountability and social responsibility are essential components in upholding moral behavior. People and organizations that deliberately help in the propagation of false information may run into moral problems related to their social obligations.

In an increasingly interconnected world, it is crucial to authenticate information. We should push for media literacy and respect the ideals of truth and integrity to solve this complicated situation.

Evolution of Deep Learning in Media Authentication

The media authentication industry has undergone various changes due to deep learning, from traditional techniques to cutting-edge ones.

The first method of determining whether something is real involves rule-based techniques and hand-crafted features. However, these techniques frequently fell short due to complicated alterations and brand-new issues.

Deep learning revolutionized the game thanks to its neural networks and data-processing prowess. It allowed media identification to enter a new, more challenging age.

Also read: How Mastery of Deep Learning can Trump over Machine Learning Expertise

Sakorn Mekruksavanich and Anuchit Jitpattanakul conducted an experiment that discovered deep learning approaches for continuous authentication based on the activity patterns made by mobile users. Their research showed that the only way to make continuous authentication work is to keep watching how people act. Sensors are necessary for continuous authentication based on the user’s behavioral biometrics.

Deep learning-based methods can adapt to changing data, learn from it, handle constantly changing forgery techniques, give amazing accuracy, and speed up identification. However, deep learning has some problems, such as the risk of overfitting and the need for data.

Deep learning is still a strong ally in our efforts to push media authentication to new heights, which affects the development of secure digital material.

Types of Deepfakes: Techniques of Detecting Deepfakes

The rise of deepfakes has caused serious worries about misinformation and authenticity. We are at a time when digital illusions make distinguishing fact and fiction challenging. As these artificial creatures get smarter, it’s important to have effective methods to find them.

Enter the world of sophisticated deepfake detection methods, where tools and creative strategies are used to stop online fraud. In this investigation, we look into the complicated field of deepfake detection. We will reveal the methods that claim to keep reality and trust in a digital world that changes quickly.

(1) Facial and body movements

Facial and body movement analysis, a silent conversation between technology and human expression, reveals the subtleties that show the secrets of authenticity.

Experts can protect themselves against the advanced practice of deepfaking by reading facial features, micro-expressions, gaze monitoring, and blink patterns. It makes sure that the truth comes out from behind the digital curtain.

-

Facial landmarks and micro-expressions

Understanding how people talk and spot deep fakes depends significantly on how their faces and bodies move. Stanford University and University of California researchers developed the Phoneme-Viseme Mismatch method.

This method looks at videos with intelligent AI algorithms to find differences between how words are spoken (phonemes) and how the mouth moves (visemes). This is a common problem with deepfakes because AI has trouble matching spoken words with mouth movements. A mismatch is a strong sign that the video is a deepfake if it is found.

These movements—facial signs, microexpressions, gaze tracking, and blink patterns—give clues about feelings and responses, which make them valuable tools for spotting false information. These movements show real emotions that are hard for deepfake makers to copy exactly.

-

Gaze tracking and blink patterns

Gaze tracking technology uses eye movements to assess authenticity, as genuine gaze patterns mirror human behavior and can be used to differentiate between authentic and manipulated content.

Blink patterns reveal intricate details about an individual’s physiological and psychological state, offering insights into emotional fluctuations and cognitive processes.

(2) Audio analysis

Audio analysis is more than just deciphering sound; it plays a symphony of authenticity, distinguishing between genuine and manipulated speech. Through its unwavering pursuit of truth, audio analysis remains a steadfast ally in the quest for authenticity.

In a time when deception attempts to dance in the digital shadows, it upholds the integrity of spoken words by closely examining the following:

-

Voice modulation and synthesis detection

Audio analysis is a powerful tool in combating digital deception, as it helps uncover the authenticity of voices. That can be through techniques such as voice modulation, synthesis, prosody, and speech anomalies.

Voice modulation analysis deciphers the natural ebb and flow of speech, examining the harmonious cadence of genuine voices. Synthesis detection identifies artificial creations, revealing traces of digital origins.

-

Prosody and speech anomalies

Effective communication relies on prosody and speech irregularities, and deepfake developers frequently struggle to capture the subtle prosody that distinguishes human interaction.

Experts can identify abnormalities that may indicate manipulation or fabrication by analyzing these tiny patterns. Breadcrumbs that reveal the legitimacy of audio recordings can be found in speech irregularities such as pronunciation, pauses, and voice rhythms.

(3) Behavioral analysis

Behavioral analysis is a powerful tool for achieving authenticity in the digital world. It looks at movements and contextual elements to reveal intentions and motivations.

-

Mouse movement and interaction patterns

Mouse movement analysis deciphers the rhythms and quirks in individuals’ digital interactions. It uncovers the story behind the cursor and defines the authentic user experience.

Interaction patterns enrich the narrative, vividly depicting genuine human behavior. The behavioral analysis identifies the symphony of interactions that set real users apart from synthetic impersonators.

-

Anomaly detection in contextual elements

Anomaly detection in contextual elements is another crucial aspect of behavioral analysis. Often minute yet significant, these anomalies serve as digital breadcrumbs that lead experts to the heart of authenticity. Behavioral analysis meticulously deciphers these irregularities to expose the truth within the digital narrative.

To achieve authenticity in the digital world, behavioral analysis is a strong tool that examines the following to reveal intentions and motivations.

- Mouse movements.

- Interaction patterns

- Anomaly detection

- Contextual factors

Dataset and Data Model Development

Creating datasets and models is essential for navigating the digital charade, where deception frequently hides sincerity. Experts build a rich tapestry of actual and modified media by curating different and sizable deepfake datasets. It enables algorithms to learn the minute details that separate real from artificial media.

Training deep learning models

Convolutional Neural Networks and Recurrent Neural Networks, two deep learning models, are taught to recognize authenticity. RNNs explore sequential data, while CNNs are adept at deciphering visual patterns.

These neural developers assist models in detecting minute traces of digital manipulation, such as speech intonation and facial expressions.

Transfer learning and fine-tuning for enhanced performance

Transfer learning and fine-tuning for better performance let experts use what they’ve learned to solve the problems of spotting deepfakes. Pre-trained models enable experts to transfer knowledge from one task to the challenges of deepfake detection. It enables them to become experts in spotting elusive signs of manipulation.

Developing datasets and models is an art that creates the perspective people use to judge digital authenticity. Experts arm themselves with practical tools to distinguish reality from the digital mirage by:

- Collecting a variety of datasets

- Training neural models

- Honing their skills through transfer learning and fine-tuning

Deepfake Detection Tools and Platforms

Tools and platforms for finding deepfakes are more than just software; they are sentinels of truth. The spread of false information can’t move these tools.

With a wide range of options, from commercial to open-source tools, they all work together to find the right balance between accuracy and ease of use. Their inclusion in social media and content platforms adds another layer of protection. It keeps the digital world safe for accurate information.

Commercial and Open-Source Tools

Most of the tools on the market that are meant to find hidden truths in digital content are commercial or open-source. They have their algorithms and methods to differentiate real from fake. These instruments—from AI-driven software to specialized platforms—seek to remove the idea of a digital illusion.

-

Commercial Tools

Commercial deepfake detection tools are a market where innovation and technology coexist. They accurately analyze digital content using cutting-edge algorithms and machine learning.

These tools offer real-time monitoring, user-friendly interfaces, and workflow integration to meet the varied needs of individuals and businesses. They come with strong support, regular updates, and promises to stay on the cutting edge of technology.

However, these resources might require subscription plans or licensing fees. It makes them an investment for people in the search for digital truth.

-

Open-source Tools

Open-source deepfake detection solutions offer accessibility and collaboration. They empower individuals, researchers, and organizations to explore, modify, and contribute to advancing technology. These tools draw upon the collective wisdom of diverse minds, offering a platform for experimentation, research, and customization.

Despite lacking the polished sheen of commercial tools, they often provide inclusivity and promise to fight against deepfakes. Open-source tools empower the hands of the many, fostering innovation and empowering the fight against deepfakes.

Detection Accuracy and User-Friendliness

Their effectiveness at detecting threats and usability have been frequently compared. A few emphasize being user-friendly for people with varying levels of technical knowledge.

Some tools claim to be accurate enough to decipher even the most complex manipulations. As the craft of creating deepfakes improves, the delicate balance between being easy to spot and easy-to-use shifts.

Integration of Deepfake Detection Into Social Media

To fully add deepfake detection to social media and content platforms, you need to go through a process of integration. The uploaded content is examined by algorithms, which search through the images, videos, and audio for indications of manipulation. Integration acts as a shield, protecting the integrity of digital interactions and improving user-generated content’s accuracy.

Deepfake detection tools and platforms are more than mere software – they are sentinels of truth. These tools stand firm against the tide of misinformation. With a wide range of options, from commercial to open-source tools, they all work together to find the right balance between accuracy and ease of use.

Their use in social media and content platforms adds a layer of protection. It ensures that the digital world stays a secure refuge for real information.

Ethical and Privacy Concerns

The emergence of deepfakes has raised ethical and privacy concerns as the boundaries between reality and digital artistry blur. The need for moral standards and laws to prevent the potential misuse of this technology is increasing as deepfakes become increasingly sophisticated.

Media Authenticity and User Privacy

It’s essential to strike a balance between user privacy and media authenticity. Deepfake detection increases media transparency without violating the privacy of those depicted in the content. Experts are challenged to create detection techniques that uphold the sanctity of authenticity and privacy in light of this morally difficult situation.

Implications of Deepfake Detection

Deepfake detection has serious effects on people’s rights to privacy. Digital creations are carefully looked at by algorithms, which ask if they get in the way of creativity and the right to change content. Finding a balance between keeping people from lying and letting them be creative is a philosophical problem that needs careful thought.

Biases and Fairness Concerns In Detection Algorithms

As deepfake detection algorithms change, concerns about bias and fairness arise. Some groups may be affected more than others, or existing biases may be strengthened. Regarding algorithmic detection, trying to be fair becomes a moral imperative that requires thorough evaluation and improvement.

Nowadays, having a moral compass is essential because society has to deal with conflicting media reliability and user privacy. How deepfakes will be used depends on what we decide today. It sets the moral compass for online interactions and ensures authenticity stays a cornerstone without sacrificing basic rights.

Future Trends and Challenges

Deepfake technology’s future is exciting and hard to predict because technology always improves. Along with changing technology, detection methods continue to evolve to work with new media. The battle between telling and finding out the truth turns into a high-stakes chess game that both sides work hard to win.

Evolution of Deepfake Technology and Adaptation of Detection Techniques

As new media types become easy to manipulate, detection methods must change and improve to keep up with deception. Experts must change and develop new ideas, expanding their analytical skills.

Due to the constant development of deepfake technology, experts must always get better at spotting misinformation and stopping it from spreading. Experts can ensure the truth wins this ongoing battle between lies and deception. They should keep coming up with new ways of discovering things and keep up with the latest changes in media use.

Collaborative Efforts

To combat deepfakes, researchers, technology companies, and policymakers must work together. They must all unite to form a unified front against the digital tide of deception.

They should have a strong ecosystem in which detection innovations are combined with regulatory frameworks that deter malicious intent. This harmonious symphony of collaboration protects the digital landscape. It ensures that technology’s promises are realized for the greater good.

As deepfake technology keeps getting better and better, detection methods are getting better and better to keep up with it. Collaboration amplifies the effects of each person’s work, making a strong barrier against the threat of deception.

Our decisions now set the stage for a future where honesty rules. It can keep our digital interactions private and help make the world safer and more open.

Conclusion

Deepfakes and media authentication based on deep learning face a big problem in the digital age. We have shown how vital media authentication is for maintaining reputations, trust, and digital interactions. Deep learning is important for spotting manipulation and keeping trust. It can decode visual and auditory cues that humans often struggle with.

Collective action is required from academics, technologists, decision-makers, and the general public to combat deepfakes. Media authentication orchestrates the harmonic interaction between technology and reality, ensuring authenticity rises above deception.

In conclusion, media authentication is essential for upholding digital interactions, preserving trust, and protecting reputations. A more accurate and reliable digital environment depends on ongoing expert research, development, and awareness.

Types of Deepfakes: FAQs

- What are deepfakes, and why is media authentication important?

Deepfakes are changes to digital media that look and sound real. They are often made with the help of artificial intelligence. Media authentication is the most important way to check digital content’s authenticity and ensure that what we see and hear is real and hasn’t been tampered with.

- How does deep learning play a role in detecting deepfakes?

Deep learning, a type of artificial intelligence, is one of the most important ways to find deepfakes. It involves teaching complex neural networks to find patterns and outliers in digital media. Deep learning models can find small differences that might not be obvious to the human eye or ear. This makes them important tools in the fight against deception.

- What types of deepfakes can be detected using deep learning-based authentication?

Deep learning-based authentication can identify a variety of deepfakes, including face swaps, voice synthesis, and even multi-modal manipulations where both visual and auditory components are changed.

- How do deep learning models differentiate between authentic and manipulated content?

Deep learning models are trained on large data sets that include real and fake information. They learn to spot the different patterns, distortions, and “artifacts” made when the data is manipulated. The models can spot possible deepfakes by comparing these patterns to what the real media expects.

![Deep Learning Interview Guide: Top 50+ Deep Learning Interview Questions [With Answers]](https://www.analytixlabs.co.in/blog/wp-content/uploads/2021/11/1-8.jpg)