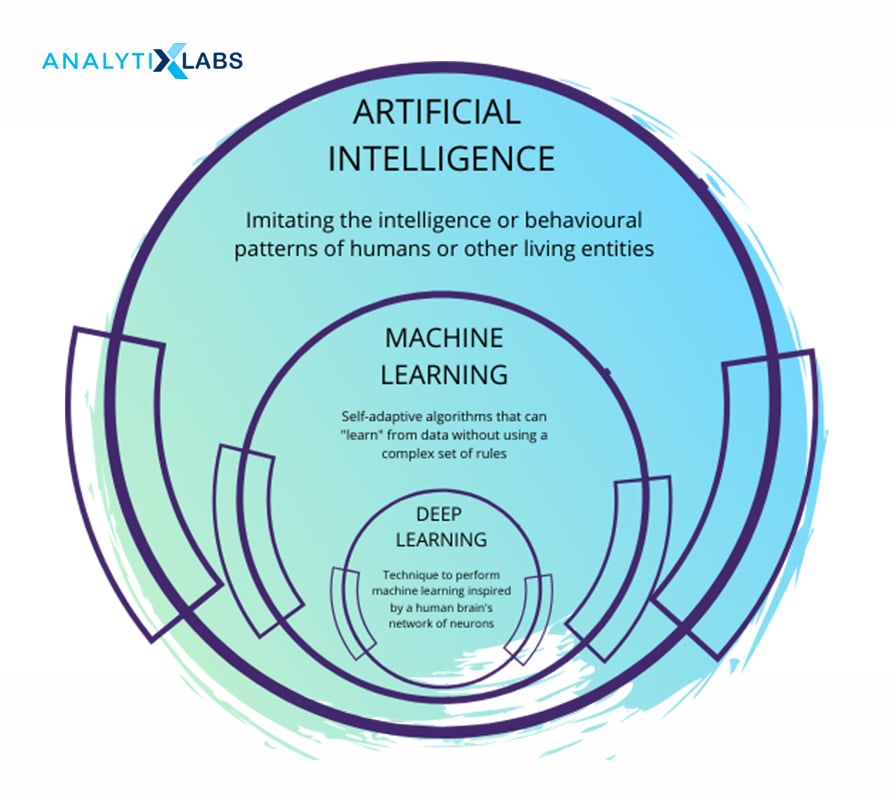

AI revolutionizes daily life, automating businesses and enhancing energy control through smart devices. It mirrors human cognition, training machines in continuous learning, reasoning, and self-improvement, akin to the human mind. The rapid evolution of AI owes much to Machine Learning (ML) advancements, with Deep Learning (DL) as the cornerstone, raising the discussion of Machine Learning vs Deep Learning.

With the increasing impact of AI and its seamless functionalities are making businesses across all industries rapidly adopting AI-powered technology –

- PwC’s 2022 AI Business Survey shows that companies are unlocking AI for modernizing systems, business transformation, and better decision-making.

- Also, the McKinsey Technology Trends Outlook 2022 Report listed Applied AI and industrializing Machine Learning among the top 14 trends to boost productivity.

AI’s success has ML and Deep Learning as its base. AI operates on ML algorithms, particularly leveraging Artificial Neural Networks (ANNs) in Deep Learning. ANNs train machines akin to human brain neural networks, enabling independent intelligent decision-making. Common real-time applications include:

- Voice Assistants and Speech Processing

- Automotive and Self-Driving Cars

- Image and Facial Recognition

- Text Mining and Translation

- Fraud Detection in BFSI

While both Machine Learning and Deep Learning are integral to AI, Deep Learning functions as the core of Machine Learning, constituting a sub-domain. AI operates on the logical structure of ML algorithms, specifically Artificial Neural Networks (ANNs), which emulate human intelligence.

This article explores the thin-line differences between machine and deep learning, delving into their workings and concepts.

What is Machine Learning?

Machine Learning is a comprehensive study wherein computers learn how to perform day-to-day operations like humans without being instructed. It is competent to self-reform the generated outcomes by learning from previous errors and discrepancies.

ML largely depends on the quality of input data. And it encompasses various statistical standards, algorithms, models, and tools for deducing projections.

The significant applications of ML are in retail, marketing, healthcare, finance, transportation, manufacturing, transcription, IT, cybersecurity, agriculture, and media.

Some business use cases include-

- Anomaly detection in network logs

- Sentiment analysis

- Email monitoring

- Dynamic pricing and product recommendation

- 24X7 customer chatbot support

- Weather forecasting

- Traffic alerts

- Stock market projection

- Social media analysis

- News Classification

How Does Machine Learning Work?

Before understanding its workflow, note that the operation of machine learning is different compared to deep learning. ML majorly performs in the five steps as follows –

- Collects the historical data

It is the foundation step wherein ML inputs the latest updated, reliable, and quality data corresponding to the problem statement.

Further, ML thoroughly analyzes the collected data’s records, observations, and experiences to identify trends and patterns.

- Prepares the data

It mainly involves three operations – data wrangling, data exploration, and data pre-processing for Machine Learning success.

After that, the resultant data is split into training (to learn from) and testing (to check prediction accuracy) datasets.

- Builds the ML model

Based on the problem statement, ML builds the predictive model by classifying the training dataset into the suitable ML type.

Such as Supervised, Unsupervised, Semi-supervised, or Reinforcement Learning.

- Trains and tests the model

The developed model utilizes the appropriate ML algorithm to produce better predictions and improve learning capabilities. This trial and error process iterates until it delivers the desired accuracy level.

Once the model is successfully trained, it runs through the testing dataset. Then, different test cases are applied to the model to check its precision level.

- Deploys model for practical applications

Before the final deployment in the real world, ML evaluates the scope of refining the model by hyperparameter tuning.

Eventually, when the model’s performance reaches optimal accuracy, it is set up in a concurrent environment to make predictions.

What is Deep Learning?

Deep learning functions on the neural network architecture to closely simulate human brainpower end-to-end in computer systems. Though it is the principal part of ML, the core program of Deep learning differs from it.

It works on a large amount of quality data to generate high-precision results, considering the following prime goals –

- Achieve the intended purpose of the application

- Foster continual innovation to increase learning capabilities

- Optimize outcomes to enhance the accuracy level

DL has strengthened the following ML implications –

- Data analytics solutions for profitable decision-making

- Operations and sales for customer success

- Supply chain management in retail and eCommerce

- Cybersecurity intelligence in aerospace and defense

- Yield production for estimating agriculture metrics

- Medical imaging in the healthcare system

- Risk identification in BFSI

- Automated alerts for quality assurance in manufacturing

- Drug discovery and monitoring in pharmaceuticals

- Disaster, pandemic, and emergency management

How Does Deep Learning Work?

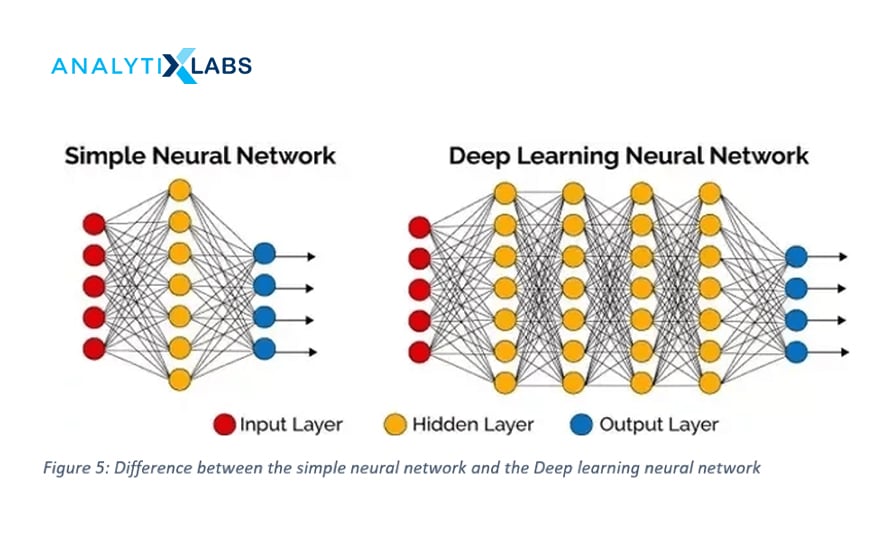

The projection strategy of Deep Learning does not require any feature extraction process. Here, the input data directly undergoes different layers of ANN and predicts the outcome. Let’s understand DL’s 5-step process –

- Defines the suitable network architecture type

The neural network architecture encompasses prominently three layers – an input layer, numerous hidden layers, and an output layer.

Corresponding to the problem case allocated and tasks to be performed, DL defines the best-suited algorithm, such as –

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks (RNNs)

- Sequence to Sequence Models

- Feed-Forward Neural Networks (FFNNs)

- Radial Basis Function Networks (RBFNs)

- Modular Neural Networks (MNNs)

- Long Short Term Memory Networks (LSTMs)

- Generative Adversarial Networks (GANs)

- Multilayer Perceptrons (MLPs)

- Self Organizing Maps (SOMs)

- Deep Belief Networks (DBNs)

- Restricted Boltzmann Machines( RBMs)

- Autoencoders

- Configures the model for training

This is the step in which Machine Learning is involved, as DL does not prepare or classify the training data set.

Instead, it configures the Deep Learning Framework to train the model. And specify the crucial training elements for estimating the model’s accuracy.

- Fits the model on the given training dataset

DL utilizes the fit function to compute the input data, the final prediction, and iterations performed on the training dataset.

Here, the fit function continually evaluates the model’s preciseness by mapping its execution on a fixed number of iterations. With this, it downsizes the possibility of overfitting.

- Estimates model’s precision

Machine Learning and Deep Learning determine the accuracy level similarly.

DL applies variable, random, and un-trained testing datasets to validate the trained model’s effectiveness and deliverability.

- Sets up the model in a real-time environment

The final deployment step, wherein the fully trained model operates on multiple business applications and self-reforms based on concurrent experiences.

As ML and DL are the base of AI, expertise in them is the need of the hour. Before going forward, you can check out our exclusive courses on Deep Learning with Python learning module and machine learning certification course, or book a demo with us.

Difference Between Machine Learning & Deep Learning

Let’s quickly look into the below-mentioned 13 key factors that explicitly brief the difference between ML and DL –

- Subject to AI

The progression in AI is directly proportional to the Machine Learning capabilities. Whereas ML innovations entirely rely on Deep Learning, mainly ANN functionalities. Specifically, ML is the subdomain of AI and superset of DL.

- Type of training dataset

Machine Learning demands structured training datasets due to dependency on multiple data operations. On the contrary, Deep Learning is comfortable with structured and non-structured training datasets.

- Handle data volume

Machine Learning operates on both small and large input data. However, Deep Learning primarily depends on Big Data, i.e., the millions of data points.

- Training and testing time

When comparing ML vs. Deep Learning, the training and testing times differ. ML takes much less time to train the model, but the testing duration is prolonged. On the other hand, DL’s scenario is vice-versa. It takes a long period to train the model. However, the testing phase is short.

- Hardware dependency

Due to low data volume, Machine Learning applications are easily executable on standard CPU-based computer systems. In comparison, Deep Learning applications demand GPU and robust hardware resources.

- Feature extraction

Machine Learning requires feature engineering by domain experts (human support). However, since Deep Learning functions on ANNs, it automatically learns high-level feature extraction on its own.

- Troubleshooting strategy

The phenomenon is different in Machine Learning Vs. Deep Learning. ML breaks down the entire problem into sub-parts. And after resolving each sub-part, it produces the outcome. On the contrary, Deep Learning deduces the result end-to-end.

- Utilize algorithms

Overall, Machine Learning utilizes automated and statistical algorithms to generate predictions. In contrast, Deep Learning uses neural network algorithms to make accurate projections.

- Outcome interpretation

With Machine Learning, domain experts can effortlessly set up, run, and interpret (cause and effect) the results generated. However, its effectiveness may be limited.

In comparison, Deep Learning requires a dedicated setup and produces quality results immediately. However, it is hard to explain the interpretation.

- Output delivery

Machine Learning delivers the output in numerical form. On the other hand, Deep learning provides output in almost all formats, like numbers, texts, sound, etc.

- Data analysis mode

The data analysis approach in ML needs data analyst experts to determine and examine algorithms. Whereas Deep Learning self-defines everything based on its real-time experiences.

- Solve problem cases

Machine Learning best suits simple, straightforward, and less complex problem statements. In contrast, Deep Learning competently takes charge of complex and challenging cases.

- Human support

Machine Learning demands domain expert involvement in crucial steps. At the same time, Deep Learning needs human support in the setup. And the rest of the production line runs with negligible staffing.

Also read: Data Science vs. Machine Learning vs. AI Deep Learning

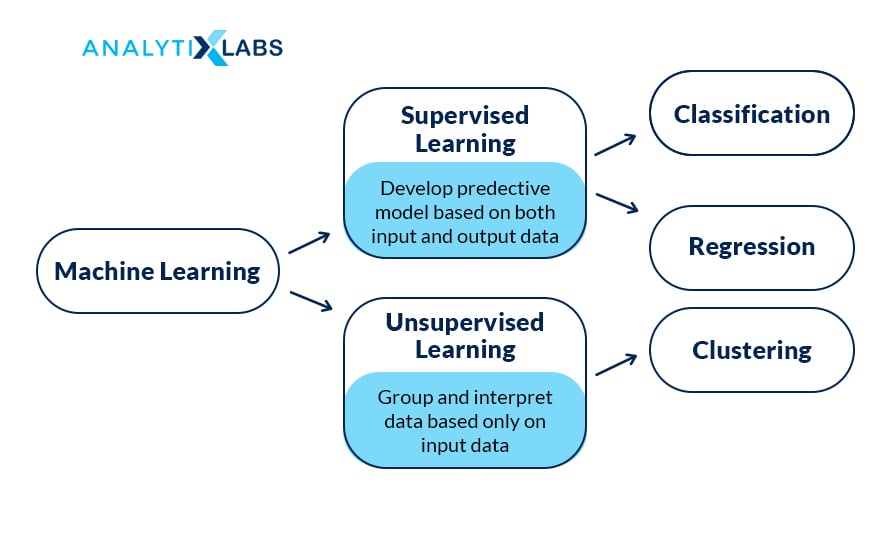

Types of Machine Learning

-

Supervised Machine Learning:

When a machine is provided with labeled data, input and ideal outputs are given for the model to learn, known as supervised learning.

To understand deep learning vs machine learning, know how a machine is made intelligent with supervised learning in ML. The labeled data works as a supervisor to set the parameter for the new dataset to learn and produce valid outputs. Based on the output, you can understand if the training data suits the prototype and gradually get classified data for a particular survey.

For example, you can prepare training data with some symptoms as input and diagnosed disease as the output for a labeled set. The ML model will succeed if the real-time output matches the ideal symptom disease.

-

Unsupervised Machine Learning:

Unlike supervised learning, unsupervised learning only has some inputs. Based on the primary properties of the input, the machine tries to generate a vogue idea and group similar data points into a category. This process is clustering.

Gradually, the model gets refined with a k-means clustering algorithm. Most machines follow this unsupervised learning in real time. One key advantage of this method is its flexibility. Unsupervised machine learning can adapt to different situations efficiently because no strictly labeled data exists. Both machine learning and deep learning require this back-and-forth training to reach maximum accuracy.

For example, online shopping portals repeatedly display similar products based on your past shopping trends and recent product searches.

-

Semi-Supervised Machine Learning:

Both supervised and unsupervised learning have some drawbacks. Supervised learning would be expensive and time-consuming for large data, whereas unsupervised learning has a limited application spectrum.

That’s why semi-supervised learning is introduced. Both labeled and unsupervised data are inserted into the input, letting the ML model learn the properties faster. So, the new data can follow the basic properties of a category while developing its intuitive learning.

From this concept, you can note the difference between ML and DL. At first, one type of learning is selected, and then the ML network adapts any suitable deep learning approach to train the model better.

-

Reinforcement Learning:

The method can be simply termed a trial and error-approach. Here, the agent (or the input data) performs something in an environment. Based on the performance, either it receives some rewards or some penalty.

Just like a game, the agent would train itself with the right and wrong assumptions based on those received rewards and penalties. O-learning is a popular algorithm to use for reinforcement learning in machine learning.

As you get a basic idea of the primary AI learning methods, it’s time to learn about deep learning.

Types of Deep Learning

Knowing the latest approach to deep learning is essential before discussing deep learning vs machine learning. Different types of deep learning models are suitable for multiple complex applications.

-

Feedforward Neural Network

The simplest form of neural network with uni-directional functionalities is a feedforward neural network. It doesn’t need the hidden middle layer of the network compulsorily. So, this network accepts the input with bias (to delay the activation function) and weight (to trigger the activation function) and produces output without forming any loop in between. The activation function here is the Heaviside step function that can only form 0 or 1 as a prediction.

-

Radial basis functions neural networks

In feedforward, due to only one hidden layer, it cannot be used effectively for deep learning with more accurate processing. So, with the radial basis function, the activation function calculates multivariate inputs against an Euclidean distance. It is mainly used for classification in deep learning. As the main difference between machine learning and deep learning, this function performs classification by measuring the input’s similarity to data points from the training set where each neuron stores a particular prototype.

-

Multi-layer Perceptron

As the name suggests, there are multiple layers where each neuron is connected to the next layer’s nodes. Then, with the help of backpropagation, feedback is generated to lower the loss. Gradually, the weights can automatically adjust their value by calculating the difference between training data and predicted values. The difference between ML and DI basically lies in these training approaches. Deep learning is all about performing as accurately as a human brain.

-

Convolution neural network (CNN)

CNN is a type of multi-layer perception with the modification of three-dimensional arrangement. Here, each neuron processes a small part of the visual field, and the network understands the whole image gradually. As a result, the training model can process and learn all the minute details of an image. CNN uses ReLu (used to help resist exponential growth of the computation) as the activation function to perform the non-linear process.

-

Recurrent neural network

As the word recurrent suggests, some steps in this neural network are repeated. Both machine learning and deep learning share a common goal to remember and learn from recent history. Similarly, here, the recurrent layers remember the output of their previous layers and predict the possible output for the upcoming node. When prediction goes wrong, small changes are made gradually during backpropagation. It is useful to model sequential data in less time.

-

Modular neural network

This neural network is a collection of multiple smaller networks that compute some tasks independently. No two modules can interact among themselves during the operation. The summation of all the outputs is the final output of the modular neural network. In ml vs deep learning, note that these deep learning approaches play a key role in model training for large and complex datasets.

-

Sequence-to-sequence models

Remember recurrent neural networks? The S2S model is a combination of two of them. An encoder for the inputs and a decoder for the outputs work simultaneously to process the RNN. However, in the case of RNN, the input and output length are independent, unlike in the sequence-to-sequence model. This basic concept is very useful for understanding machine learning vs deep learning.

Machine Learning and Deep Learning Future Trends

You must have understood the difference between machine and deep learning by now.

Then, it’s time to discuss machine learning vs deep learning further. Both machine learning and deep learning are the two most important concepts of recent technology in automated solutions. From data science to weather forecasts, adaptive technologies to image and text recognition- they have a wide application in modern science.

The core difference between deep learning and machine learning is DL is a subset of ML. Machine learning is the logical process of making a machine more intelligent, whereas DL is the key mathematical algorithm to train the network accordingly.

So, these two fields are strong enough to make potential changes in different sectors, including healthcare, business, manufacturing, predictions, etc. For instance, detecting any disease would take less time in the healthcare industry, and then can start the treatment. In business, it would be more feasible to understand customer requirements, feedback, and market growth. Machine learning and deep learning together would automate manufacturing with robots to a large extent.

Also read: Top Machine Learning Trends To Follow – 2023 Edition

FAQs

- Is Deep Learning or Machine Learning easier?

In sensible terms, deep learning is almost a subset of machine learning. Deep learning, likewise, is machine learning and methods (hence why the phrases are occasionally loosely interchanged). However, its abilities are different.

While fundamental machine learning models are progressively better at accomplishing their particular functions as they take in recent data, they still prefer some human intervention. If an AI algorithm withdraws an incorrect vision, an engineer must walk in and adjust. With a genuine learning model, an algorithm can deduce whether or not a forecast is precise through its neural network—no human assistance is expected.

- Is Deep Learning Replacing Machine Learning?

Machine learning and deep learning are both categories of AI. In short, machine learning is AI that can automatically revise with the least human interference. Deep learning is a subset of machine learning that utilizes artificial neural webs to simulate the learning procedure of the human brain.

Deep learning utilizes a difficult configuration of algorithms designed for the human brain. This facilitates the processing of undeveloped data such as documents, images, and text. Machine Learning is a category of Artificial Intelligence. Deep learning is a particularly difficult part of Machine Learning.

- Is Netflix Machine Learning or Deep Learning?

Netflix utilizes machine learning in almost all of its work to give a seamless understanding to users. After all, the data received by Netflix is vast and encompasses both detailed data, such as thumbs up or thumbs down for a film, and even unspoken data, such as data and locale where users watch a specific content, the duration they watch it for, what device they use, whether they binge-watch it or not, their content preferences, online attitude, etc. All this data can be utilized for machine learning that eventually enhances the bottom line, i.e., receiving more subscribers for Netflix.

- Should I learn Machine Learning before Deep Learning?

Whether you should understand machine learning before deep learning relies on what you want to do. Machine learning is massive; you don’t need to understand everything. But, there are some machine learning ideas that you should be aware of before you leap into deep learning.

You don’t have to understand these concepts first. You can also memorize most stuff on the go while performing deep learning. But having some machine learning knowledge will benefit you a lot.

- What is an example of Deep Learning?

Deep learning applications are employed in enterprises, from automated driving to medical devices.

Automated driving: Automotive investigators utilize deep learning to recognize objects such as stop signs and traffic flashes automatically. In addition, deep learning is used to recognize pedestrians, which enables a decrease in accidents.

Aerospace and defense: Deep learning is utilized to recognize objects from satellites that find locales of interest and specify safe or unsafe zones for troops.