In one of our articles, we have seen what is data engineering, the skills required to be a data engineer, and if you have decided to walk on the path of being a Data Engineer, you need to get adept at the most popular big data analytics tool. Have you thought about the tools used in big data? Which tool is the best for data analytics? Don’t worry, we have got you covered. In this article, we’ll be comparing, analyzing the best available big data tools, detailing the features and uses out for you so that you can choose yourself which tool fits all your needs. Also, we’ll be answering some of the common questions asked related to big data analytics.

AnalytixLabs is India’s top-ranked AI & Data Science Institute and is in its tenth year. Led by a team of IIM, IIT, ISB, and McKinsey alumni, the institute offers a range of data analytics courses with detailed project work that helps an individual to be fit for the professional roles in AI, Data Science, and Data Engineering. With its decade of experience in providing meticulous, practical, and tailored learning. AnalytixLabs has proficiency in making aspirants “industry-ready” professionals.

Let’s start by understanding:

What Is Big Data?

Big Data is primarily a huge volume of data that arrives at high speed and has different sources and formats. The three V’s that characterizes data as big data are:

- High Volume of data (that can be the size of terabytes or petabytes)

- High Velocity, and

- Different Varieties of data sources and formats

Big data broadly falls under three main classes: Structured, Semi-Structured, and Unstructured Data.

Structured data can be used in its original form. It is highly specific, can be processed, stored, and retrieved in a fixed predefined format. Examples are employee or student records in relational data.

Unstructured data does not have a specific format or form and can collect different data types. It takes more processing power and time to analyze the unstructured data. Some examples are text files, logs, emails, survey reports, sensor data, videos, audio recordings, social media-generated data, images, and location coordinates.

Semi-Structured data is a combination of both structured and unstructured data. Such data can have a properly defined format and yet cannot be distinguished to sort and preprocess. Examples are: extensible markup language (XML) files and radio-frequency identification (RFID) data.

The traditional methods have limitations in handling such complex, massive datasets; hence we need specific tools and techniques to manage such kinds of data, which falls under Big Data analytics.

You may also like to read: Characteristics of Big Data | A complete guide

What Is Big Data Analytics?

Big data analytics is the process of extracting meaningful information, including hidden patterns, correlations, market trends, and customer preferences, by analyzing the types of big data sets. When speaking of big data, it is generally viewed in the light of its functionality, which comprises of:

- Data Management

- Data Processing

- Data Analysis, and

- Scalability

Tools used in big data analytics are classified into the following broad spectrums:

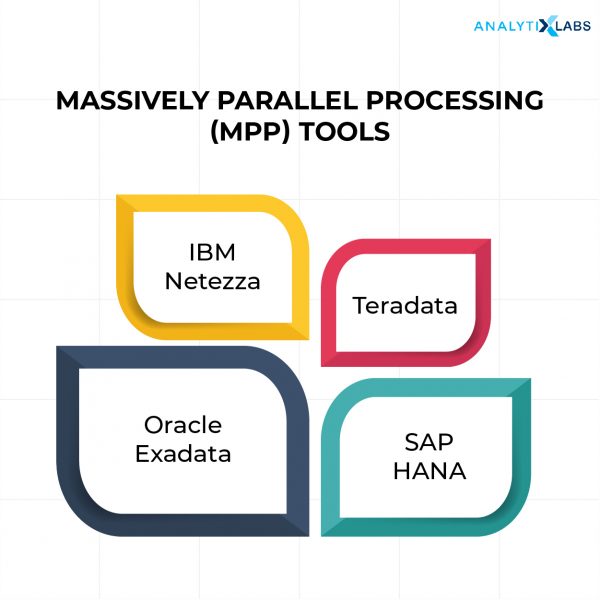

- Massively Parallel Processing (MPP)

The databases based on MPP are Teradata, IBM Netezza, Oracle Exadata, SAP HANA, EMC Greenplum.

2. No-SQL Databases

The No-SQL databases are MongoDB, Cassandra, Oracle No-SQL, CouchDB, Amazon DynamoDB, Azure Cosmos DB, HBase, Neo4j, BigTable, Redis, RavenDB.

3. Distributed Storage and Processing Tools

The top big data processing and distribution tools are Hadoop HDFS, Snowflake, Qubole, Apache Spark, Azure HDInsight, Azure Data Lake, Amazon EMR, Google BigQuery, Google Cloud Dataflow, MS SQL.

4. Cloud Computing Tools

Some of the popular tools used in big data analytics under cloud computing are Amazon Web Services (AWS) has two flavors AWS S3 and AWS Kinesis, Google Cloud, Microsoft Azure, Blob Storage, DataBricks. Oracle, IBM, and Alibaba also have their respective cloud services.

Some of the other big data tools are Hive, Apache Spark, Apache Kafka, Impala, Apache Storm, Drill, ElasticSearch, HCatalog, Oozie, Plotly, Skytree, Lumify. Out of these, we will look at the important big data tools and their respective features under each of the above categories.

There is one more functionality called Data Integration. It is the process of combining data from different sources available and putting it all together in a single, unified view. Data integration steps involve cleansing, ETL (i.e., extract, transform and load), mapping, and transformation. The popular tool for Data Integration is Talend.

Talend is a unified platform that simplifies and automates big data integration. It ingests data from almost any source, and the integrated preparation functionality ensures that the data is in a usable form from the first day itself.

Features of Talend are:

- Talend offers separate products for each solution, including data preparation, data quality, application integration, data management, and big data.

- All these solutions are provided under one umbrella that allows for the customization of the solution as per the requirements.

- Talend provides open-source tools.

- It accelerates movement on a real-time basis.

- Talend streamlines all the DevOps (development and operations) processes and helps Agile DevOps speed up the big data projects.

- It partners with every major cloud provider and data warehouse vendor such as AWS, Microsoft Azure, Google Cloud, Snowflakes, Databricks.

Moving on to the other big data tools…

Massively Parallel Processing (MPP) Tools

Massively Parallel means employing a large number of computer processors (or separate computers) to perform a set of coordinated computations in parallel simultaneously.

In the MPP system, the data and the processing power are split among several different servers (or also called the processing nodes), where each of these servers is independent. The servers are separated in the sense that these do not share the operating system and memory.

This way, MPPs can scale horizontally to add more resources to perform the task and not need to upgrade to expensive servers individually, which means scaling vertically.

So, MPP is a storage structure designed to handle the coordinated (or homogenous) processing tasks by multiple processors parallelly. MPP can have up to 200 or more processors, and these are all interconnected with a high-speed network. These nodes can connect via messaging each other.

Teradata

Teradata is one of the most popular Relational Database Management System (RDBMS) developed by the company named Teradata itself.

- It is a massively parallel open processing system for developing large scale data warehousing applications.

- It is highly scalable and capable of handling large volumes of data (more than 50 petabytes of data).

- It offers support to multiple data warehouse operations at the same time to different clients.

- It is both linearly and horizontally scalable. It can scale up to a maximum of 2048 nodes (i.e., by adding nodes) that increase system performance.

- It can run on Linux, UNIX, and Windows server platforms.

- It is based on the MPP architecture that can divide the large volumes of data into smaller processes across the entire system. All these smaller processors execute in parallel to ensure the task completes quickly. Hence, Teradata has unlimited parallelism.

- Teradata can connect to channel-attached systems such as a mainframe or network-attached systems.

- Teradata supports standard SQL to interact with data stored in the database. It also provides its own extension.

- It has many robust loads and unloads utilities to import and export data from and to the Teradata system, such as FastLoad, MultiLoad, FastExport, and TPT.

- Teradata has a low cost of ownership. It is easy to set up, maintain and administrate.

- It automatically distributes data across processors (into the disks) evenly without any manual intervention.

- All of its resources: Teradata Nodes, Access Module Processors (AMPs), and the associated disks work independently. Its architecture hence is also known as Shared Nothing Architecture.

- Teradata has a very mature parallel optimizer. It can handle up to 64 joins in a query!

IBM Netezza

The Netezza Performance Server data warehouse system includes SQL that is known as IBM Netezza Structured Query Language (SQL). We can use SQL commands to create and manage the Netezza databases, user access, and permissions for the database. It can also be used to query and modify the contents of the databases.

- IBM Netezza, which is part of IBM PureSystems, is an expert integrated system with in-built expertise, integrated by design and simplifies the user experience.

- It has the same key designs of simplicity, speed, scalability, and analytics that are fundamental to Netezza appliances.

- It designs and markets high-performance data warehouse appliances and advanced analytics applications for uses, including enterprise data warehousing, business intelligence, predictive analytics, and business continuity planning.

- It is simple to deploy, optimizes, no tuning, and minimal on-going maintenance required.

- It is also cost-effective having low cost-to-ownership.

Oracle Exadata

Exadata, designed by Oracle, is a database appliance that supports various database systems such as the transactional database system: OLTP (On-line Transaction Processing) and the analytical database system: OLAP (Online Analytical Processing).

- It is easy to deploy solutions for hosting the Oracle Database.

- It simplifies digital transformations, enhances the database performance, and reduces the cost.

- It provides users with enhanced functionality that relates to enterprise-class databases and their respective workloads.

- Its clusters allow for consistent performance that increases the throughput. As load increases on the cluster, the performance remains consistent by utilizing inter-instance and intra-instance parallelism.

- It is suited for data warehousing and consolidation of the database.

SAP HANA

SAP High-Performance ANalytical Appliance (SAP HANA) is an in-memory database developed by SAP. Its primary function as a database server is to store and retrieve data. It is used to analyze the large datasets on a real-time basis that are present entirely in its memory.

- SAP HANA is a combination of HANA database, Data Modeling, HANA Administration, and Data Provisioning in one single suite.

- It is deployable as an on-premise appliance or on the cloud.

- This platform is best suited for both real-time analytics and developing and deploying real-time applications.

- It is based on multi-core architecture in a distributed system environment and on row-column type of data-storage in a database.

- It uses an in-memory Computing Engine (IMCE) to process and analyze large amounts of real-time data.

- Like Teradata, SAP HANA reduces the cost for IT companies to store and maintain large data volumes. It is very cost-effective to own.

MPP databases are in tabular or columnar format that does allow tasks or queries to be processed; however, there is a limitation of MPP. MPPs do not support unstructured data, and even some of the structured databases such as MySQL or PostgreSQL data require some processing to fit the MPP framework. This leads us to the second type of database: the No-SQL database.

NoSQL Data Stores Tools

Not only SQL, or in other words, No-SQL is the database to handle the unstructured data. It is the non-relational database design providing flexible and dynamic schemas for storage and retrieval for such data. A non-relational database means the data is not tabular and does not use structured query language (SQL).

A quick summary differentiating between SQL and No-SQL databases:

| SQL | No-SQL | |

| Type of data | SQL is used for structured data having multi-row transactions. | No-SQL is used for unstructured data such as documents or JSON. |

| Type of databases | SQL databases are relational. | No-SQL databases are non-relational. |

| Schema | SQL uses structured query language and has a predefined schema. | No-SQL databases have dynamic schema. |

| Form of data storage | SQL database stores data in tabular form. | No-SQL databases store data in documents, key-value, graph, or wide-column form. |

The prominent big data analytics tools that use non-relational databases are MongoDB, Cassandra, Oracle No-SQL, and Apache CouchDB. We’ll dive into each one of these and cover their respective features.

MongoDB

Founded in 2007, MongoDB is an open-source No-SQL database.

- It is easy to install and use. You wouldn’t need a coding background to work with this database; however, knowledge of Javascript Object Notation (JSON) is required to understand its tree structure of the collection.

- It supports heterogeneous data and is schema-less. The dynamic schemas mean we can change the blueprint (i.e., the data schema) without changing the existing data.

- The database is developed for high performance, high availability, and easy scalability.

- It is based on the key-value pair (i.e., on BSON format, a binary form of JSON) for document storage and does not use any complex joins.

- MongoDB has the feature of query caching and its long internal storage memory that speeds up the data retrieval from the database.

- It is flexible as we can easily change columns or fields without impacting the existing rows or application performance.

- MongoDB is manageable and user-friendly, meaning this database does not need a data administrator; hence it can be used both by the developers and the administrators.

- Unlike the relational database RDBMS, MongoDB is horizontally scalable. This reduces the workload as no CPU and memory need to be added to the same server. It results in the reduction of cost expenses related to CPU and the maintenance charges as well. Hence, it can quickly scale the business.

- One of the reasons why MongoDB is popular among the developers is because it supports many platforms inclusive of Windows, macOS, Ubuntu, Debian, Solaris.

- The database added a new feature to its list of attributes called MongoDB Atlas. It is a global cloud database technology that allows to deploy a fully managed MongoDB across AWS, Google Cloud, and Azure with its built-in automation for resource, workload optimization and to reduce the time required to handle the database.

- Application Areas: MongoDB is fit for those businesses where the data’s blueprint is not clearly defined or updated frequently. It’s used in various domains: Internet of Things (IoT), Time Series, businesses using real-time analytics, content management systems, mobile apps, eCommerce, payment processing, personalization, catalog, and gaming.

- Key Customers: Adobe, AstraZeneca, Barclays, BBVA, Bosch, Cisco, CERN, eBay, eHarmony, Elsevier, Expedia, Forbes, Foursquare, Gap, Genentech, HSBC, Jaguar Land Rover, KPMG, MetLife, Morgan Stanley, OTTO, Pearson, Porsche, RBS, Sage, Salesforce, SAP, Sega, Sprinklr, Telefonica, Ticketmaster, Under Armour, Verizon Wireless

Apache Cassandra

In 2008, Cassandra was initially developed to power the Facebook inbox search feature. Later in 2009, it became an Apache incubator project.

- It is an open-source, user-available No-SQL database delivering high-availability.

- Cassandra is a peer-to-peer distributed system that comprises a cluster of nodes in which any node can accept a read or write request (i.e., each node has some role)

- This “masterless” feature of Cassandra allows it to offer high scalability. All the nodes are homogenous, which helps create operational simplicity; when new machines are added, it does not interrupt the other applications. Hence, it is easy to scale up to more extensive database architecture.

- Cassandra supports the replication of data to multiple data centers and keeps multiple copies in various locations.

- The database is designed to have a distributed system to deploy a larger number of nodes across the various data centers.

- Its design enhances read and write processing commands and can handle massive data very quickly.

- It is also good at writing time-based log activities, error logging, and sensor data.

- Cassandra is fault-tolerant; that is, it offers zero-point failures. In case a node fails, it is replaced immediately, causing minimal impact on the production performance. It supports the redundancy of the data.

- It provides data protection by its commit log design feature that ensures that the data is not lost. Along with this, it has data backup and restores features.

- It has introduced Cassandra Query Language (CQL), a simple interface to interact with the database.

- Even though Cassandra is non-ACID compliant, it supports some SQL statements such as DDL, DML, and SELECT statements.

- Cassandra supports Hadoop integration with MapReduce and also with Apache Hive and Apache Pig.

- Application Areas: If you want to work with SQL-like data types on a No-SQL database, Cassandra is a good choice. It is a popular pick in the IoT, fraud detection applications, recommendation engines, product catalogs and playlists, and messaging applications, providing fast real-time insights.

- Key Customers: Apple, Netflix, Uber, ING, Intuit, Fidelity, NY Times, Outbrain, BazaarVoice, Best Buy, Comcast, eBay, Hulu, Sky, Pearson Education, Walmart, Microsoft, Macy’s, McDonald’s, Macquarie Bank.

Oracle No-SQL

Oracle had first released the No-SQL database in 2011. In 2018, it also released its cloud service for the unstructured data, called Oracle Autonomous NoSQL Database Cloud (OANDC).

- Oracle No-SQL database is a multi-model, scalable, distributed No-SQL database.

- It is designed to provide highly reliable, flexible, and available data management across a configurable set of storage nodes.

- It supports JSON (i.e document), table (i.e relational databases), key-value API. The users have the deployment option to either run the application running on-premises or as a cloud service with storage based provisions.

- Like MongoDB, Oracle also offers NoSQL Database Cloud Service that fully manages the NoSQL database cloud service for applications that require low latency responses, flexible data models, and elastic scaling for dynamic workloads.

- It supports both fixed schema and schema-less deployment with the ability to interoperate between them.

- It supports integration with Hadoop and also provides support for SQL statements such as DML and DDL commands.

- Application Areas: Used for the Internet of Things (IoT), document or content management, fraud detection, data pertaining to customer views to suggest purchases based on the customer’s past interactions, game coordination.

- Key customers: Large credit card company, Airbus, NTT Docomo, Global rewards company

Apache CouchDB

Released in 2005, this multi-master application was consumed by Apache in 2008.

- Implemented in Erlang language, CouchDB is an open-source, cross-platform, single-node, document-oriented No-SQL database.

- The schema of the data is not a concern as the data is stored in a flexible document-based structure.

- CouchDB allows to run a single logical database server on any number of servers.

- It uses multiple formats and protocols to store, transfer and process the data.

- The focus of CouchDB is on the web as it uses the HTTP protocol to access the documents. The HTTP-based REST API allows communication with the database easily.

- The structure of HTTP resources and methods such as GET, PUT, DELETE are easy to understand, making the document insertion, updation, retrieval, and deletion relatively easy.

- CouchDB stores data (or documents) in JSON format and uses JavaScript as its query language using MapReduce.

- It allows access to the data by defining the Couch Replication Protocol. Using this can copy, share and synchronize the data between the databases and the machines.

- Likewise, Cassandra, CouchDB also offers distributed scaling with fault-tolerant storage.

- Unlike MongoDB and Cassandra, CouchDB is ACID compliant.

Distributed Storage and Processing Tools

A distributed storage system is an infrastructure that splits data across multiple physical servers and more than one data center. It typically takes the form of a cluster of storage units, with a mechanism for data synchronization and coordination between cluster nodes.

On the other hand, distributed processing refers to various computer systems that use more than one computer (or processor) to run an application. This includes parallel processing in which a single computer uses more than one CPU to execute programs.

The tools that fall under this category are:

Hadoop (Cloudera)

Hadoop is an Apache open-source framework. Written in Java, Hadoop is an ecosystem of components that are primarily used to store, process, and analyze big data. The USP of Hadoop is it enables multiple types of analytic workloads to run on the same data, at the same time, and on a massive scale on industry-standard hardware.

Cloudera Distribution Hadoop (CDH) is an open-source Apache Hadoop, offered by Cloudera Inc, a US-based software company. CDH is also a distribution platform for many other Apache products, including Spark, Hive, Impala, Kudu, HBase, and Avro.

The data in Hadoop is stored on inexpensive commodity servers that run as clusters. It allows distributed processing of large datasets across the clusters of computers using simple programming models.

The Hadoop application works in an environment that provides distributed storage and computation across the clusters of computers. It is designed to scale from a single server to thousands of machines, where each offers local computation and storage. Hadoop processes the data in parallel and not on a sequential or serial basis.

Hadoop uses:

- Hadoop Distributed File System (HDFS) for storage of the data. Its distributed file system runs on commodity hardware.

- MapReduce, a parallel programming model for efficient processing of data on large clusters (or nodes) of commodity hardware in a reliable and fault-tolerant manner.

- Hadoop Common is Java libraries and utilities that are required to enable third-party modules to work with Hadoop.

- Hadoop YARN, a framework for resource or job scheduler and cluster resource management

- It is compatible on all platforms as it is based on Java.

- It is highly fault-tolerant and is designed to deploy on low-cost hardware, making Hadoop cost-effective as well.

- Hadoop ensures the high availability of the data.

- It allows for faster data processing and also brings flexibility in the processing of the data.

- Hadoop has a replication mechanism. It creates replication of data in the cluster. This also ensures reliability in the data as the data is stored reliably on the cluster machines despite any machine failures.

- Hadoop’s libraries are designed to detect and handle failures at the application layer, and hence, it does not rely on hardware to provide fault-tolerance and high availability.

- Hadoop is highly scalable. We can add more servers (i.e., horizontal scalable) or increase the hardware capacity of clusters (which is vertical scalable) to achieve high computation power. The servers can be added or removed from the cluster dynamically, and Hadoop can operate continually without any interruption.

Spark (Databricks)

Spark, a product of Apache Software Foundation, was introduced to speed up the computational computing software process of Hadoop. Databricks is an open and unified data analytics platform for data engineering, data science, machine learning, and analytics.

- Apache Spark is a lightning-fast unified analytics engine for big data and machine learning.

- Spark is an open-source, cluster computing, distributed processing system designed for faster computation.

- The uniqueness of Spark is it includes both processing of the data and applying AI algorithms on the data under one roof.

- Built on top of Hadoop’s MapReduce and extends this MapReduce model for more computations, including queries and stream processing.

- Spark is not dependent on Hadoop as it has its own cluster management. Spark uses Hadoop only for its storage purpose.

- Its main characteristic is its in-memory cluster computing that boosts the processing speed of an application in a Hadoop cluster up to 100 times faster and 10 times faster running on disk.

- Spark’s in-memory data processing capabilities are way faster than the disk processing feature of MapReduce. It stores the intermediate processing data in memory as it reduces the number of reads or writes operations to risk.

- Spark was designed to cover a wide range of workloads such as batch applications, iterative algorithms, interactive queries, and streaming.

- It decreases the work of management of maintaining separate tools.

- With the help of Spark, you can write applications in different languages as it supports multiple languages. It offers built-in APIs in Java, Sacla, or Python.

- Apart from supporting MapReduce, Spark also supports SQL queries, data streaming, machine learning, and graph algorithms.

- Spark also works with Hadoop’s HDFS, OpenStack, and Apache Cassandra, both on-premises and in the cloud.

Snowflake

Snowflake is a cloud-based data-warehousing system. It is the only data platform built for the cloud. It offers a cloud-based data storage and analytics service, provided as a Software-as-a-service (SaaS).

- Snowflake database architecture is designed as an entirely new SQL database engine to work with cloud infrastructure.

- Working with Snowflake, we do not need to install the software. We just require SnowSQL to interact with its cloud database server.

- It provides access to the database and tables by Web console, ODBC, JDBC drivers, and third-party connectors.

- Snowflake supports the standard SQL commands and analytics queries.

- It is easy to scale, easy to set up, can handle unstructured data, and supports large datasets.

- The queries are executed very fast on Snowflake architecture.

- It supports sharing data between different accounts and also with other Snowflake accounts.

- Snowflakes also allow importing semi-structured data such as JSON, XML, Avro, ORC, Parquet.

- It provides a special column type as Variant, Array, or Object that stores the semi-structured data.

Apache Storm

Storm is another open-source offering by Apache Software Foundation. It is a free and distributed real-time computation system.

- Storm’s real-time computation capabilities allow it to manage workload with multiple nodes with reference to topology configuration and works well with the Hadoop Distributed File System (HDFS).

- Storm is fast. It has a benchmark record of processing over one million 100 bytes sized tuples per second per node!

- Storm has horizontal scalability, and it assures that one unit of data will be processed at a minimum once.

- It has a built-in fault-tolerant processing system, and it auto-starts on crashing.

- It is written predominantly in the Clojure programming language but can be used with any programming language.

- It integrates with the queueing and database technologies.

- Storm works with Direct Acyclic Graph(DAG) topology and gives the output in JSON format.

- Its topology consumes unbounded streams of data and processes those streams in arbitrarily complex ways, repartitioning those streams between each computation stage.

- The use cases of Storm are real-time analytics, log processing. Online machine learning, continuous computation, distributed Remote Procedure Call (RPC), ETL.

Cloud Computing Tools

When it comes to storing and accessing data, Cloud Computing has been nothing else than a revolution. As the name suggests, the information is stored in a virtual space or cloud and accessed remotely using the internet. The user can be anywhere and need not be in a specific place to retrieve the data. As you can imagine, cloud computing is a convenient tool for viewing and querying a large volume of big data.

We shall look at the features of some of these in detail below.

Amazon Web Services

There are two popular offerings by Amazon under its cloud services, which are Amazon S3 and Amazon Kinesis.

Amazon S3 stands for Simple Storage Service, is a storage service. In short, it is storage for the internet.

- It has a simple web service interface that can be used to store and retrieve any amount of data, at any time and from anywhere on the web.

- S3 has been designed to make web-scale computing easier for developers.

- Amazon provides the developer with access to the same highly scalable, reliable, fast, inexpensive data storage infrastructure to run its own global network of websites.

- It is intentionally built with a minimal feature set which focuses on simplicity and robustness.

- S3 works by creating buckets, which are the fundamental containers for data storage. Each bucket can upload many objects up to the size of five terabytes. Each of the objects is stored and accessed using a unique developer-assigned key.

- S3 allows downloading of the data. It also grants permission to the users for uploading and downloading to maintain the authentication.

- It uses the standard interfaces: REST and SOAP to work with any internet-development toolkit.

Amazon Kinesis is a massively scalable, cloud-based analytics service which is designed for real-time applications.

- It allows the processing of large amounts of gigabytes of data per second on a real-time basis.

- It captures hundreds and thousands of data from sources, including website clickstreams, database event streams, financial transactions, social media feeds, IT logs, and location-tracking events.

- The collected data is available in milliseconds and can be used to enable real-time analytics use cases such as real-time dashboards, real-time anomaly detection, dynamic pricing.

- Post-processing the data, Kinesis distributes the data to its multiple consumers simultaneously.

- The collected data can be easily integrated with the Amazon family of big data services in storage (Amazon S3), Amazon DynamoDB (the No-SQL database for unstructured data), and Amazon RedShift (data warehouse product).

- Kinesis empowers the developers to ingest any amount of data from several sources, scaling these up and down so that it can run on its EC2 instance (which is a virtual server in its Elastic Compute Cloud (EC2) that enables business subscribers to run application programs in the computing environment.)

Google BigQuery

Google BigQuery is a fully-managed, serverless data warehouse that enables scalable analysis over petabytes of data. It is a Platform as a Service that supports querying using ANSI SQL. It also has built-in machine learning capabilities.

- It is a serverless, cost-effective multi-cloud data warehouse designed for business agility.

- It has automatic high scalability and can analyze petabytes of data using ANSI SQL.

- The application performs real-time analytics. It provides both AI and BI solutions under BigQuery ML and BigQuery BI.

- With its Data QnA (private alpha) helps to get the data insights using Natural language processing (NLP).

- It has automatic backup and replication of data. It allows easy restoration and comparison of data.

- It provides solutions for geospatial data types and functions using BigQuery GIS.

- It has flexible data ingestion.

- BigQuery provides strong data governance and security.

- It integrates Apache’s big data ecosystem, allowing existing Hadoop/Spark and Beam workloads to read or write data directly from BigQuery using the Storage API.

- It provides data transfer services from Teradata and Amazon S3.

- Google Cloud Public Datasets offer a repository of more than 100 public datasets across industries. We can store all the public datasets and can query up to one terabyte of data per month at no cost.

- With BigQuery Sandbox, you can get always-free access to the full power of BigQuery subject to certain limits.

Azure HDInsights

Azure HDInsight is an open-source service from Azure. It is a cloud distribution of Hadoop components.

- It is easy, fast, and cost-effective to process massive amounts of data.

- Users can use the framework for Hadoop, Spark, Hive, LLAP, Kafka, Storm, R, and more.

- It is a highly scalable and high-productivity platform for developers and scientists.

- It provides data protection extend on-premises security and governance controls to the cloud.

- It offers enterprise-grade security and monitoring.

- It is used in various ways, including data science, IoT, data warehousing.

- It can be integrated with leading applications.

- Using Azure HDInsights, we can deploy Hadoop in the cloud without purchasing new hardware or paying other up-front costs.

IBM Cloud

IBM Cloud is a set of cloud computing services from IBM. It combines the Platform as a Service (PaaS) and Infrastructure as a Service (IaaS). It offers over 190 cloud services. IBM’s popular AI cloud platforms are Watson Analytics and Cognos Analytics. IBM Watson delivers services such as machine learning, natural language processing (NLP), and visual recognition.

- IBM Cloud’s IaaS allows the organizations to deploy and access the resources such as compute, storage, network over the internet.

- IBM Cloud PaaS, based on the open-source cloud platform Cloud Foundry provides developers with end-to-end solutions for app development, testing, service management deployment from on-premises local to the cloud.

- It offers various resources to compute, including bare-metal servers, virtual servers, serverless computing, and containers, on which enterprises can host their workloads.

- IBM also offers various networks such as a load balancer, a content delivery network (CDN), virtual private network (VPN) tunnels, and firewalls.

- IBM’s platform scales and supports both the small development teams as well as large enterprise businesses.

- Depending on the data structure, you can choose the type of storage, whether an object, block storage, or file.

- The cloud provides both SQL and No-SQL databases and tools for data querying and migration.

- IBM Cloud supports various programming languages, including Java, Node.js, PHP, and Python.

- Its security services include tracking activity, identity and access management, and authentication.

- It offers services to integrate both the on-premises and cloud applications such as API Connect, App-Connect, and IBM Secure Gateway.

- For the data migration to the cloud, IBM provides tools such as IBM Lift CLI and Cloud Mass Data Migration.

Endnotes

Big data is already exponentially growing, it has taken its position in our lives, and we wouldn’t be able to get away with it even if we want to. As the saying goes, when the going gets tough, the tough get going. The data that we are getting is large in size and is also coming faster than ever before! In such times, if even after reading the signs, we do not protect abreast ourselves with the latest tools of big data analytics to handle such voluminous and largely unstructured data, then we are the only ones who will be at a loss. It is more than the need of the hour to learn these big data tools to make better and meaningful decisions related to our business objectives.

FAQs – Frequently Asked Questions

Some of the most common questions are answered below:

- What is big data analytics technology?

Big data analytics technology is extracting meaningful insights by analyzing different types of big datasets that are varied and large in volume. It is used to discover hidden patterns, unknown correlations, market trends, and customer preferences for decision-making.

- What are the tools used in big data analytics?

The big data analytics tools are categorized based on the architecture of the databases, which primarily are Massively Parallel Processing (MPP), No-SQL databases, Distributed Storage and Processing tools, and Cloud Computing tools.

The big data tools are Teradata, Oracle Exadata, IBM Netezza, SAP HANA, EMC Greenplum, MongoDB, Cassandra, Oracle No-SQL, CouchDB, Amazon DynamoDB, Azure Cosmos DB, RavenDB, Neo4j, BigTable, AWS, Microsoft Azure, Google Cloud, Blob Storage, DataBricks, Oracle Cloud, IBM Cloud, Alibaba Cloud, Apache Hadoop, Hive, Apache Spark, Apache Kafka, Impala, Apache Storm, Drill, ElasticSearch, HCatalog, Apache HBase, Oozie, Plotly, Azure HDInsight, Skytree, Lumify, Qubole, Google BigQuery.

- Which tool is best for data analytics?

It’s hard to pick one tool. Each of the tools of big data analytics has its advantages and limitations. We talked in detail about their respective features above.

Teradata is the leader under the massively parallel processing (MPP) category. All of its features, such as unlimited parallelism, highly and linearly scalability, shared network architecture, the mature optimizer, supporting SQL commands, having robust utilities, and having the lowest total-cost-to-ownership, make it top the charts!

MongoDB is the most popular document-based NoSQL database. It is the best fit to integrate hundreds of different data sources and are heterogeneous in nature. It is instrumental when the schema of the data is not defined. Additionally, the database is useful when expecting to read and write operations. It allows to store clickstream data and use it for behavioral analysis.

Apache Spark is the best-distributed storage and processing tool available as it is the lightning-fast unified analytics engine for both data processing and machine learning. Its USP is can process the data and also applying AI algorithms to the data. It also speeds up the Hadoop computational computing software process. One of the main concerns with Hadoop is to maintain the speed in processing the large datasets in terms of waiting time between queries and waiting time to run the program. This is taken care of by Spark.

Amazon Web Services (AWS) has the throne in the world of cloud computing. Started in 2006, it was the first cloud computing and offering infrastructure. It has expanded beyond cloud compute and storage and is now a major player in AI, database, machine learning, and serverless deployments. In recent times, the IBM cloud has been catching up the ladder fast.

In case you have any questions or want to share your opinion, then do reach out to us in the comments below.

You may also like to read:

1. 10 Data Analytics Tools for Everyone | Beginner to Advanced Level

2. 10 Steps to Mastering Python for Data Science | For Beginners