Preface

The advancement in big data technologies is unprecedented. One of the primary reasons is the widespread acceptance of using data science to solve business problems. Thanks to the advancement in computer hardware and the ubiquitous use of the internet, there is a splurge in the data generated every day. As a result, big data and data science are gaining momentum as a means to dig into this huge data chunk and find business-driven insights. There are so many data technologies in play today. Some you may know, some of you don’t know “what is big data technology?”. That is why this article will guide you through the different types of data technologies and feature a handful of examples to make a stance.

Why?

As one thing leads to another, this rise of big data tools and technologies has opened up the demand for data professionals. Companies, big and small, are looking for experts who understand data and have the skill to derive meaningful insights from them. While mastering all facets of big data technologies may seem unrealistic, it is important to have a basic overview of the types of data technologies that are in the league today.

Introduction

The term “big data” refers to a collection of extensive, complicated data collections and volumes that include enormous quantities of data, data management capabilities, social media analytics, and real-time data.

Big data is about data volume, and extensive data set is measured typically in terms of terabytes or petabytes.

The vast collection of data is growing at an exponential pace over time and has led to the development of various big data-based technologies. The use of these techniques for analyzing enormous volumes of data is known as big data analytics. Big Data Technology on the other hand is simply the collection of software utilities meant to analyze, process, and extract information from very complex and massive data sets that traditional data processing software cannot handle.

Want to master it all? Your hard stop is here!

AnalytixLabs is one of the premier Data Analytics Institutes specializing in training individuals and corporates to gain industry-relevant knowledge of Data Science and its related aspects. It is led by a faculty of McKinsey, IIT, IIM, and FMS alumni who have a great level of practical expertise. Being in the education sector for a long enough time and having a wide client base, AnalytixLabs helps young aspirants greatly to have a career in Data Science.

Time to address the elephant in the room. This article will take you through the basics of big data tools and technologies, types of technologies in play, top techniques to implement, and will answer a few commonly asked questions.

What is Big Data Technology?

There is a large number of technologies that constitute big data technologies. While these technologies are distinct from each other, all these technologies involve dealing with a huge amount of data i.e. Big Data. When you sit to study the big data architecture, you will see how vast it is and how little you might know.

On an overall level, big data tools and technologies comprise a number of software and tools that allow the user to analyze, extract, manage, and process a huge amount of data. This data is often complex, huge in size and volume, and is sometimes unstructured.

Big data tools help in managing large and complex data that most traditional tools cannot do. This is because the traditional tools function on local machines or servers, and are designed for small to medium-sized structural data.

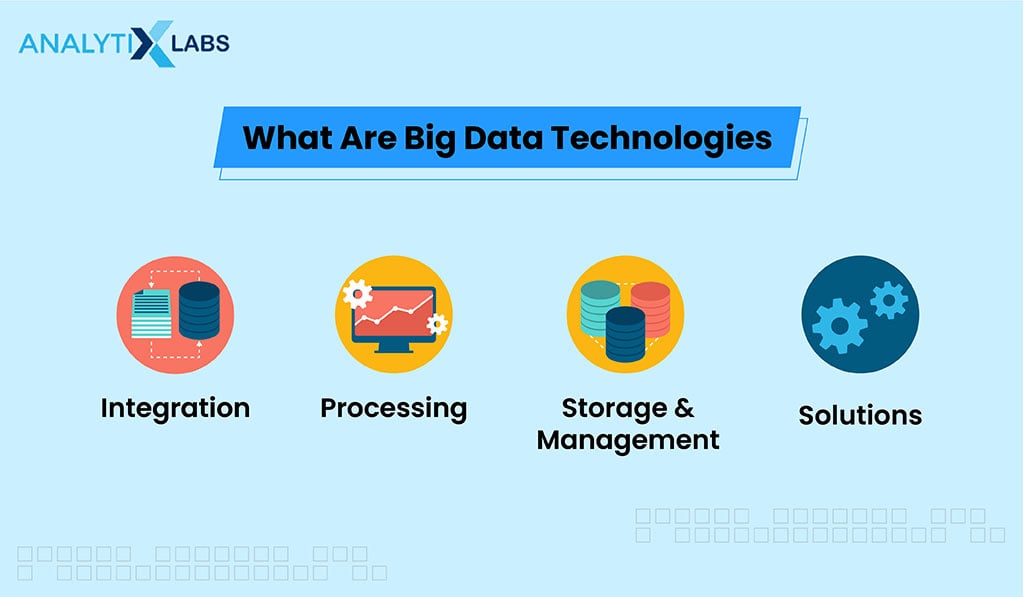

Before getting into categorizing the big data technologies, let us first take a look at the four primary objectives of these tools. This is lead us to the final answer to what you mean by big data technologies.

1. Integration

Big Data Technologies’ biggest objective is to integrate a large amount of data from the source to the user. This includes identifying mechanisms and procedures to stream data seamlessly in the day-to-day work framework. Incorporating a huge amount of data in an effortless manner is the objective of many big data technologies & techniques.

2. Processing

In a way an extension of the Integration aspect of big data technologies, a number of big data technologies are responsible for creating the infrastructure that helps us deal with a large amount of data This is done often by dividing the data into manageable pieces, using sophisticated hardware placed in a remote location and then with the help of integration based tools, giving a user an experience they have when working with small amount of data on their local machine.

3. Storage and Management

While Big Data technologies and tools help integrate a large amount of data in the day-to-day working, they are primarily responsible for the storage of this huge amount of data. These big data technologies help us manage this data, make sure we don’t lose track of it, and ensure that this data is available for use when required.

4. Solutions

A large number of big data technologies and tools provide the users with the necessary options to find the precious insights that can help the organization’s leaders make key decisions and solve business problems. These tools help have the option to integrate with other big data technologies and people with coding prowess can then use these tools to mine, find patterns, run statistical tests, create predictive models, etc on large quantities of data. This can provide the users with information that otherwise would have been easily lost if such big data technologies were not available.

Types of Big Data Technologies

As discussed above, Big Data technologies try to help users store, manage, integrate, process data, and find solutions. The various techniques and technologies available for big data can be by and large divided into two categories– Operational and Analytical.

Operational

It is this side of Big Data Technologies where a large number of Big Data Engineers, IT technicians, and Networking experts come together and are involved in big data processing and storage.

These are those technologies responsible for generating the big data and storing them in an efficient manner that is not responsible for providing any solutions. This data can be generated from social media platforms, shopping sites, hospitalities-based businesses such as ticketing data, or some other data-generating from within the organization.

The organizations in turn are responsible for storing and managing this data. Thus, this is that type of big data technology that emerges from the organization’s day-to-day operations and provides the raw material for the analytics side of big data technologies.

Analytics

This is where Data Analysts and Data scientists come into play. They use those types of Big data technologies that help find business solutions by analyzing a large amount of data. Here common analytics methodologies come into play for example stock marketing predictions, weather forecasting, early identification of disease for insurance companies, etc.

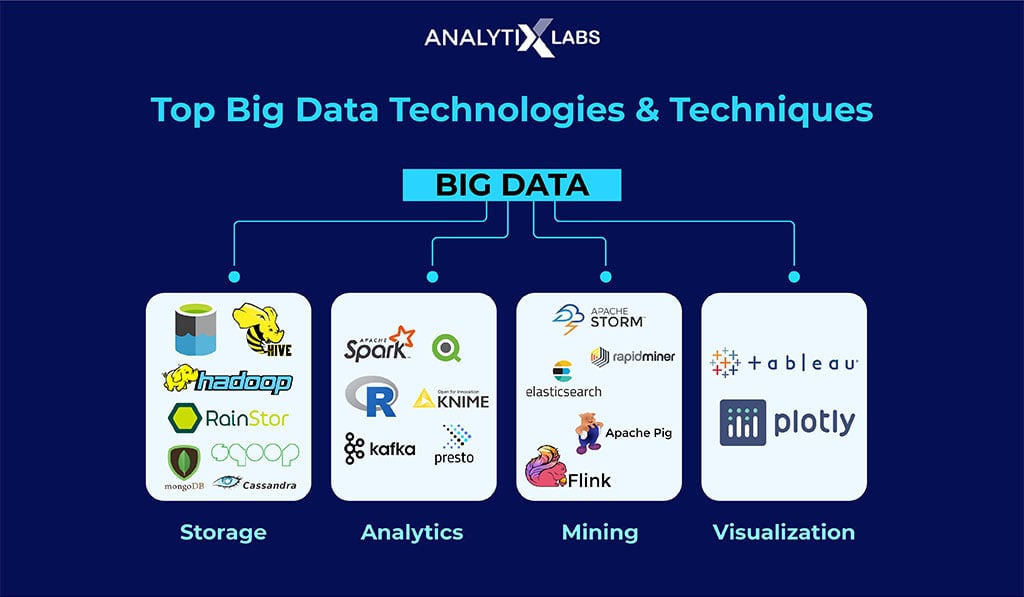

Top Big Data Technologies & Techniques

Big data tools are usually categorized according to their utility. However, putting it more generally – all big data tools and technologies & techniques can be divided into 4 major categories viz. storage, analytics, mining, and visualization.

Data Storage Tools

1. Hadoop

As one of the most common big data tools, Hadoop is used to deal with the data in a clump mode i.e. it breaks down the data into smaller pieces which the user then consumes. Hadoop basically is a system that uses a peculiar distribution system to deal with Big Data. Important components of the HADOOP ecosystem include HDFS (Hadoop Distributed File System), YARN (Yet Another Resource Negotiator), MapReduce, and HadoopCommon.

2. HIVE

HIVE is an important tool in the Big Data tools domain as it allows the user to easily extract, read and write data. It uses a SQL methodology and interface and consequently uses a similar query-based language known as HQL (Hive Query language), supports all SQL data types, and has drivers (JDBC Drivers) and a command line (HIVE Command-Line).

3. MongoDB

This is another tool important for big data as far as storage is concerned. MongoDB is a NoSQL-based database and thus is a bit different from typical RDBMS databases. It doesn’t use the traditional schema and data structure, so it becomes easy to store large amounts of data. MongoDB is particularly famous for its flexibility and performance with the various distributed architectures.

4. Apache Sqoop

This tool is used to move big data to structured data stores such as MYSQL, Oracle from Apache Hadoop, and vice-a-versa. It provides connectors for all the major RDBMS.

5. RainStor

RainStor is a database management software developed by a company with the same name. It provides an enterprise online data archiving solution that works on top of Hadoop and runs natively on the HDFS. It stores large amounts of data using a technique called the Deduplication Techniques.

6. Data Lakes

Data Lakes is used as a consolidated repository for storing all kinds of data i.e. untransformed data is stored using Data Lakes. As there is no prerequisite to convert the data into a structured format before storing it, it makes the process of data accumulation easy and one can use various big data-based tools to perform analytics.

7. Cassandra

It is another big data tool for data storage. It is a NoSQL database and can deal with data spread across several clusters. Due to its scalability, query-based language property, integration with MapReduce, and distributed mechanism, it is often the top choice in the NoSQL databases.

Data Analytics Based Big Data Tools

1. Apache Spark

Spark is one of the most important aspects of Big Data as it allows the user to perform tasks such as batch, interactive or iterative processing, visualization, manipulation, etc. As it uses RAM, it is much quicker than many other older technologies available for big data. Its contender Hadoop is commonly used with structured and batch processing whereas Spark is often used with real-time data making both the tools to be used by organizations simultaneously.

2. R language

R is a programming language often known as a language made by the statisticians for the statisticians. It helps data analysts and scientists to perform statistical tests and create statistical models. However, R can also be used for creating Machine Learning and Deep Learning-based models. R made to this list because it can now be integrated with big data-based technologies.

3. QlikView

A highly powerful BI tool, QlikView helps in generating quick and detailed insights from big data. It has a simple, straightforward user interface helping the user to simply click on data points and perform unrestricted data analytics. It helps in identifying the relationship between features in datasets or among datasets and uses associative data modeling.

4. Qlik Sense

Similar to QlikView, Qlik Sense has a drag and drop User Interface that helps users to create story-based reports quickly.

5. Hunk

Hunk is a Big Data tool that helps explore, analyze, and visualize data in the Hadoop Ecosystem. It allows for drag and Drops analytics, a rich developer environment, customer dashboards, integrated analytics, and fast deployment. In order to analyze the data, it uses the Splunk Search Processing Language.

6. Platfora

It is a subscription-based big data tool that helps in Big Data Analytics. It is an interactive tool that helps the user with raw data and early identification of patterns

7. Kafka

Kafka is a stream-processing platform and is similar to an Enterprise Messaging System. It deals with large amounts of real-time data feeds and publishes them in a distributed format. It is used for data processing, data delivery in real-time, etc.

8. Presto

This tool is often used in big data analytics. It uses the Distributed SQL Query engine which is optimized for running Interactive Analytics Queries. Presto can be used with different data sources like Hive, Cassandra, MYSQL, etc. It helps in pipeline executions, creating user-defined functions, and simple debugging of code. Lastly, the biggest advantage is that it scales large velocity of data and is the reason that companies such as Facebook have built Presto-based applications for their data analytics needs.

9. KNIME

Based on Java, this big data tool helps the user in workflow and data analytics by using sophisticated data mining techniques, data extraction using SQL style queries, predictive analytics, etc. It also has the capability to connect to the common Hadoop distributions and use machine learning algorithms via MLlib integration.

10. Splunk

This big data tool is used to provide quick insights into the data. The user can access Hadoop clusters from virtual indexes, and connect to tools such as Tableau and different databases including Oracle, MYSQL, etc. It helps in dealing with real-time streaming data by helping the user in indexing, capturing, and correlating such data. Additionally, the users can easily gain insights by generating reports, graphs dashboards, etc.

11. Mahout

Mahout allows for the implementation of Machine Learning to Big Data and performs ML-based applications such as Segmentation, Classification, Collaborative Filtering, etc.

Data Mining Based Big Data Tools

1. MapReduce

It is because of MapReduce that it is possible to deal with the volume aspect of Big Data as it employs parallel and distributed algorithms to apply logic to the huge data. Thus MapReduce that plays a crucial role in transforming the otherwise unmanageable data into a manageable structure. The word MapReduce is a portmanteau, as the word represents a combination of two methodologies – Map and Reduce. While Map is responsible for sorting and filtering data, Reduce is pivotal for summarizing and aggregating data.

2. Apache PIG

Developed by Yahoo, PIG works on a query-based language known as Pig Latin which is similar to SQL and is used for structuring, processing, and analyzing big data. It provides ease of programming as it allows the user to create their own custom functions while translating them into MapReduce program in the background.

3. RapidMiner

It can perform a range of functions from ETL to data mining and even predictive modeling and machine learning. RapidMiner is a useful tool as it helps in solving problems faced in business analytics, supports multiple languages, and provides the users with a simple user interactive interface. It is open-source which is one of its biggest advantages as even while being open-sourced it is still generally considered secure.

4. Apache Storm

Storm is an open-source, scalable distributed real-time computational framework. Based on Clojure and Java, it helps in dealing with processing unbounded streams of data.

5. Flink

Apache Flink is designed to deal with both unbounded and bounded streams of data. While bounded data has a definite start and end, its processing is also equated to batch processing. As a result, Flink is a highly scalable distributed processing engine. You can use Flink with data pipelines and data analytics applications.

7. ElasticSearch

Written in Java, it is a common search engine tool used by a number of companies (such as Accenture, StackOverflow, Netflix, etc.) It provides a full-text search engine that is based on the Lucene library and provides an HTTP Web Interface and Schema-free JSON documents.

Data Visualization Based Big Data Tools

1. Tableau

A powerful data visualization tool, Tableau can now be integrated with spreadsheets and even with big data platforms, cloud, relational databases, etc., providing quick insights from raw data. As it is a secure proprietary tool that even allows sharing of dashboards in real-time, the popularity of this tool is ever increasing.

2. Plotly

Plotly is a library that allows the user to create interactive dashboards. It provides API libraries for all the common languages including Python, R MATLAB, and Julia. For example, Plotly Dash is something that helps in creating interactive graphs easily using python.

Trending Big Data Tools and Technologies

There are a number of latest big data tools and technologies that are going to be in high demand. This makes it highly important for data science aspirants, especially those who want to build a career in big data, to understand these latest technologies available for big data and know at least the basics of them. Previously, we covered 16 important big data analytics tools in our blog. Along with those, here are a few more that need your attention.

i) Docker

Docker allows the users to build, test, deploy and run their applications. It uses the concept of containers which is a mechanism for dividing the software into standardized units. It helps the user to package their application such that all aspects of the application such as dependent libraries, system tools, etc are all in one place. Docker helps to standardize the application operations, cut costs and increase the reliability of the whole deployment process.

ii) TensorFlow

The use of AI is trending in Big Data and one of the leading tools in this race is TensorFlow, an ecosystem of recourses, modules, and tools in itself. TensorFlow can be used to create Machine Learning and Deep Learning models that can be applied to Big Data.

iii) Apache Beam

It is used for creating Parallel data processing pipelines. This helps in simplifying large-scale data processing. The user has to build a program defining the pipeline, and these programs can be written in Java, Python, Go, etc.

iv) Kubernetes

This big data tool helps the user to automate, deploy and scale containerized applications across clusters of hosts. Container applications deployed in the cloud can easily be tracked by using Kubernetes.

v) Blockchain

This Big Data technology helps in creating encrypted blocks of data and chains them together making the transaction of digit currency such as bitcoin extremely secure. Blockchain’s potential application in the world of big data is huge – so much that the BFSI domain is all set to encompass the benefits of implementing it.

vi) Airflow

Apache Airflow is a scheduling and pipeline tool. It helps in scheduling and managing complex data pipelines stemming from various sources. In this tool Directed Acyclic Graphs (DAG) are used to represent these workflows. Airflow helps make and handle the data pipelines, which makes ML models become more accurate and efficient.

FAQs- Frequently Asked Questions

1. What are examples of big data?

Common Examples of Big Data include

- Discovering consumer shopping habits

- Personalized marketing

- Fuel optimization tools for the transportation industry

- Monitoring health conditions through data from wearables

- Live road mapping for autonomous vehicles

- Streamlined media streaming

- Real-time data monitoring and cybersecurity protocols

2. Where are Big Data technologies used?

A large number of successful companies use big data. Common Examples of the use of Big data technologies include-

- Netflix, Spotify, and other streaming platforms for recommending movies and songs

- Amex and other Credit Card companies to understand consumer behavior

- Amazon, Flipkart, Walmart, etc for online trading and purchasing

- Hospitality companies for online ticket booking

3. What are the advantages of big data technologies?

While there are a number of advantages, the most common advantages include cost optimization, improving operational efficiency, and fostering competitive pricing.

Conclusion

Businesses are starting to rely more on big data technologies to solve daily problems and make major business decisions. To master technologies available for big data, you must have a fair knowledge of all the basics. This includes understanding how these technologies work and where they lie in the big data framework. Your starting point can be to learn more about the tools mentioned in this article. Good luck and happy learning to you.