Every day, over “2.5 quintillion bytes of data” are created. More than 1,200 petabytes of information are stored by Google, Facebook, Microsoft, and Amazon alone. The data sources are unlimited, from human interaction with machines to virtual networks, where machines are trained to create data themselves now!

In this age and era, where every piece of information that is created has the possibility of impacting a meaningful decision, we cannot leave the opportunity to not leverage upon this data. For this, we need to understand the challenges of such massive data.

Referring to data as ‘huge’ strikes the chord of big data. Yet, Big data is more than just being huge in volume. Earlier, a lot of data was lost because that data was not usable in the relational databases, and only a handful of data was stored in such databases. We need to address different problems when dealing with the data. These problems are listed and explained in the upcoming section. The other way these problems are referred to as the V’s of Big Data. Here, we will look into the veracity definition, each of these problems, along with example veracity.

What is Data Veracity in big data?

The word veracity originates from verax, which means ‘speaking truly’, and veracity represents “conformity with truth or fact”. So, veracity in big data refers to how much the data conform and how accurate and precise the data is. In other words, the data veracity definition checks the quality of the data. It is the level or the intensity by which the data is reliable, consistent, accurate, and trustworthy.

The non-reliable data consists of noisy records, and non-valuable information such as missing values, extreme values, duplicates, and incorrect data types; these will not help solve the business problem nor help a firm make meaningful decisions.

By trustworthy, it means how reliable the data source is, the type of the data, and its processing. We shall look at steps to improve the data quality by eliminating biases, abnormalities, duplicates, discrepancies, and volatility. The interpretation of the big data directly impacts the end results, which are actionable and relevant.

Sources of Data Veracity

There are several sources of the veracity of data. Some of these examples of veracity include:

- Statistical Biases: Data becomes inaccurate because of statistical biases as some data points are given more weightage than others leading to inconsistency in the data or unfavorable Bias or data bias is the error in which some data elements have more weightage than others. This results in inaccurate data when an organization decides on calculated values suffering from statistical Bias.

- Bugs in Application: Data can get distorted due to bugs present within the software or application. Bugs can transform or miscalculate the data.

- Noise: Another source of data veracity is noise in the dataset. Noise is information of no value, such as missing or incomplete data, which creates unnecessarily irrelevant data.

- Outliers or Anomaly: Abnormalities such as outliers or anomalies mean erroneous data points deviating the data from its normalcy. For instance, fraud detection is based on abnormal transactions done using internet banking.

- Uncertainty: Even after taking measures to ensure the quality of the data, there are chances that discrepancies within the data, such as incorrect values, stale or obsolete, or duplicate data, lead to uncertainty.

- Lack of credible data sources: Data lineage is one of the important factors in maintaining the correct data. As the data is collected, captured, extracted, and stored from various sources, it is very difficult to trace the data sources.

The sources of the data veracity lead to a lot of data preprocessing and cleaning. It is important to remove such incorrect data to make it more accurate, consistent, useful, and relevant to drive meaningful insights.

How to ensure low data veracity?

Following are some of the ways to ensure low data veracity:

1) Validate the data sources: It is imperative to validate the data sources as the data is collected from multiple sources. This collected data is further extracted and stored in different databases.

2) Acquiring Knowledge about the data: Gathering as much information as we can about the data, such as what kind of data is needed based on the requirement from where the data will come, how to clean and process it, and what all procedures to apply to the data. This data management will help reduce the data veracity.

The Other V’s of Big Data

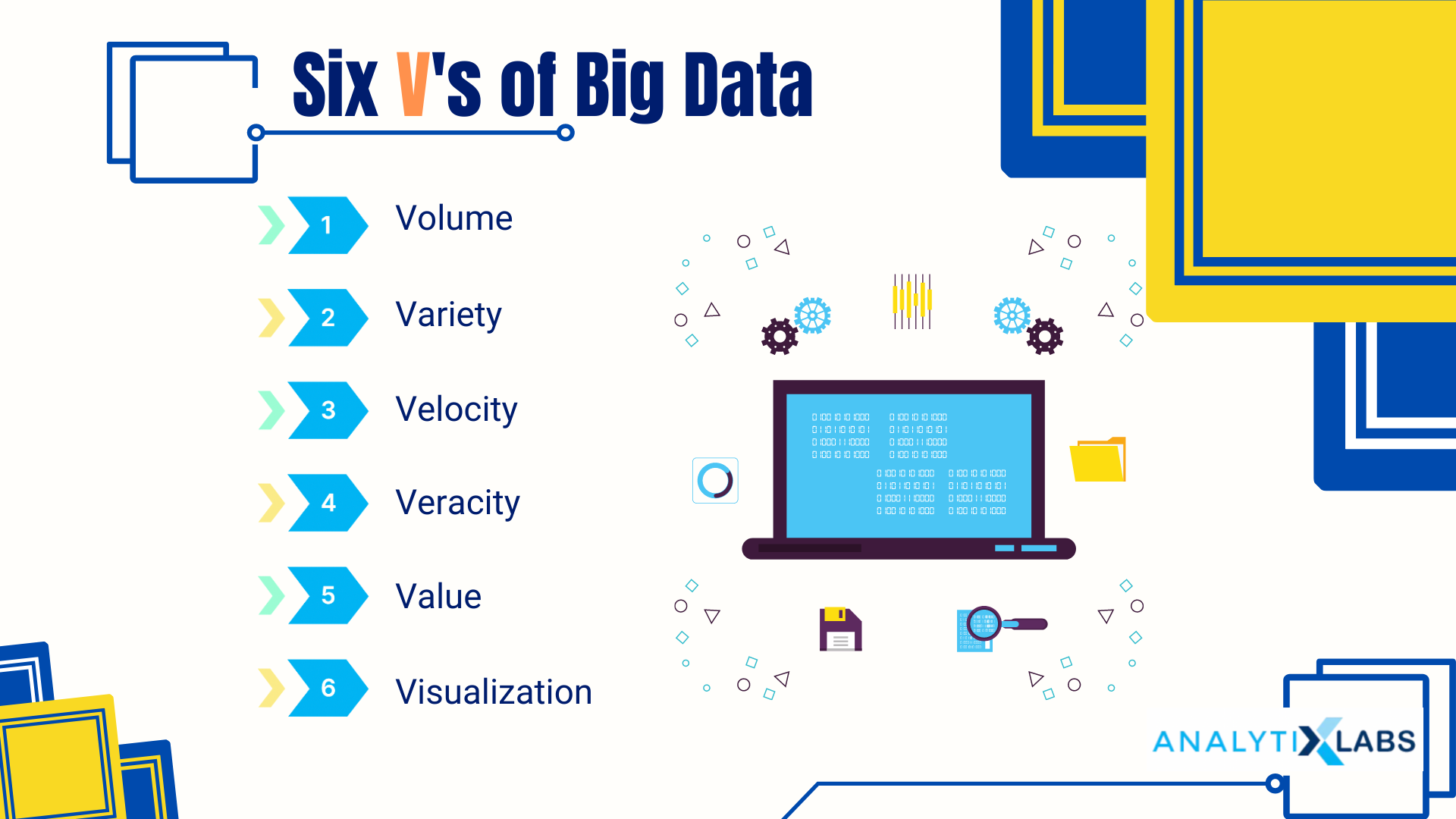

These V’s of the Big Data form the pillars of Big Data by which the data is recognized to be ‘big’. Any data that has the following characteristics is known as Big Data. We shall look into each of these as below:

-

Volume

Big data is synonymous with the term “huge or vast volume of data”, and it is the main characteristic of big data. Nowadays, data generating sources are increasing day by day.

For example, in a supermarket, as compared to the earlier times, the customer and the orders per customer have increased, and the number of products and respective subcategories of the products increased as well. The methods of placing orders have been increasing too from placing an order online or offline.

Earlier the mode of payment was restricted to cash and debit or credit cards, now the payment channels are of multi-types additionally leading to loyalty or reward points. With the expansion of the superstores, their branches, employees, marketing, and financial activities expand to create more data.

Another form of data generated either by click-stream or web data via visiting a website, is the total number of clicks, how much time is spent on a web page, and which all landing pages have been visited. All such information is captured known as touchpoints.

Hence, as can be seen, the “Big” in Big Data resonates with an enormous, voluminous amount of data. The next feature of big data in the pipeline is the variety of the data.

-

Variety

A large amount of data also leads to various types of data sources and categories of data, i.e. both unstructured and structured data. For instance, by capturing different varieties of information, we need different types of technologies to store that data. Structured data i.e., data in tabular format need excel or spreadsheets, and relational databases management systems for storage.

In contrast, unstructured data such as text (emails, tweets, messages, PDFs), images, video and audio data, and monitoring devices require other technology such as non-relational databases. As observed above, the payment gateways have different varieties such as cash, credit, or debit card (POS), internet banking, mobile banking, ATM, UPI, and wallets.

Varieties of data also create problems for analyzing the data as the data is of different formats, in different platforms and, therefore cannot use the same framework for processing the information. Hence, the variety of unstructured data leads to challenges for storage, mining, and analyzing data.

-

Velocity

We know velocity means “the speed at which something moves in a particular direction”. So, here in the context of big data, velocity points to the speed or the rate at which the data is generated.

In the superstore example, earlier there may be 100 orders per hour. Now, with the expanded store with multiple chains, the frequency of the orders could have been increased to 10,000 per hour. So, every order or transaction needs to be stored and authenticated. This means that we need to have a technology or framework to store every piece of information generated by the sources.

Therefore, the data generation rate also adds to the challenges of managing data.

-

Validity or Veracity

Veracity in big data is about validating the data. The small amount of data makes it easier to make data checks, audit, and store it. However, with big data, we cannot ensure whether the quality of the data is correct or not while storing the data because we aren’t validating the data as we are generating and storing it. The reason we are unable to validate the data is because of the velocity of data, the rate at which the data is being generated is very fast.

As the processing of the data takes time and checking of the data consumes some time, we do not have that time allowance to validate the data. As soon as the data is created, it must be stored (or in daily work terms ‘dumped’ somewhere), or it can lead to data loss. Therefore, it is not feasible to check the data at the time of its creation, as the data must be stored immediately to ensure no data loss occurs. So, while storing the data we are not sure of the quality of the data.

-

Value

When referring to the value of the data, we see it from the economic value perspective. A lot of data is created, and we are investing huge amounts of money as well for the collection, storing, and restoration of the data. But, then what kind of value can we drive out of this data? If after investing large amounts of money we cannot drive the meaning or value out of the data because of the complicated data, it is also a problem.

-

Volatility or Variability

The data generation or the velocity and variety are not consistent across times. On a real-time basis, the data is generated at different times and even across different time zones. For example, a job is set up to do monthly cleaning-up activity and is dependent on its predecessor’s job of archiving the data. Unless the archival data job runs, the clean-up job will not run.

The dependencies between the jobs shall create variability within the timings of the jobs run. Additionally, the previous month the clean-up job ran at 9 AM EST and it runs at 9:30 AM EST in the present month. These are ways by which the data generated becomes variable.

In the context of the superstore example, the customer orders before 9 AM could be relatively low compared to the customers placing orders during the day or during the sale time of the year. Hence, the data generation is inconsistent, and there is a lot of variability in the data.

In other words, we can refer to volatility as “the lifetime of data and the rate of change”. Considering volatility and the examples above, the business must decide according to their requirements the prevalence of the data.

-

Visualization

Visualizing such a vast amount of data is a challenge as well. With so much information available, how can we view this data? How to represent such data is an added task.

This brings us to the end of understanding the veracity in big data. Below are some commonly asked questions that we have addressed to offer more clarity on this topic.

Data Veracity: FAQs

1. What are the 5 V’s of big data?

The 5 V’s of big data are:

- Volume: Volume details how big the dataset is by detailing the data elements within a dataset.

- Variety: Variety is the attributes or the characteristics of the data points present in the dataset.

- Velocity: Velocity indicates the rate at which the data is generated.

- Varsity: Varsity indicates how accurate and trustworthy is the data.

- Value: Value details how much value the data adds.

2. What is data veracity?

In Big data, veracity refers to the quality, consistency, accuracy, and trustworthiness of the data.

3. Why is veracity important?

The veracity of the data is essential for validating the data. The speed or the velocity at which the data is created, at that speed it is critical to ensure the quality of the data. Hence, checking the data quality is imperative.

4. What are the sources of the veracity of big data?

The sources of the veracity of big data follow as:

- Statistical Biases

- Bugs in the software

- Noise

- Abnormalities

- Obsolete Data

- Lack of credible data sources or data lineage

- Uncertain or ambiguous data

- Incorrect or Duplicate data

- Stale

- Human Error

- Information Security

5. What is the structure of big data?

Big data is categorized in three ways:

- Structured data

- Unstructured data

- Semi-Structured data

Conclusion

Veracity in big data is essential to understand before using the data. It is critical to investigate the quality of the data along with the other V’s of the big data. High veracity of data refers to the data being in good condition to be used and relied upon. Low veracity refers to the data having a high percentage of non-usable and non-valuable information.

Additional resources you may like: