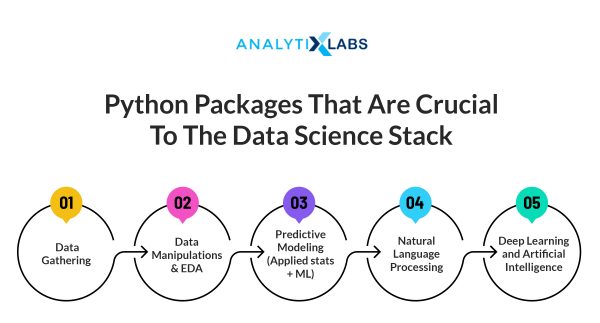

Data Science is the art of getting actionable insights from various forms of data. It is a stack of interconnected tasks – data gathering, data manipulations, data insights, data visualization, statistical analysis, Applied Statistics, Machine Learning, Deep Learning, and AI.

An industry striving to implement a certain set of modules from the above tasks or the entire stack of tasks has numerous tools to get their work done.

Whenever there is an allusion to Data Science and its related happenings around the tech world, one language gets instantly mentioned – Python. It has been repeatedly observed and proved that Python’s compatibility and easy-to-use syntax make it the most popular language in the Data Science realm. Hence there are various Python libraries used for data science extensively.

Python for Data Science has been a tool of choice for new learners, for people who are researching and exploring things, and for the industry-level implementations of the Data Science stack.

Python as a Data Science of choice

Python is an open-source, object-oriented, and general-purpose scripting language – capable of addressing problems and implementing the methodologies involved in a Data Science stack.

Suppose we start to catalog the things that help Python to be the tool of choice. In that case, many features come into the picture – open-source, ease of coding, scripting potential, portability, compatibility, platform independence, and community support – all have played a major role in its rise as one of the top preferred tools.

But one feature that has made the most difference is Python’s Modularity.

Python ecosystem comprises thousands of modules and packages designed to perform tasks, improve existing tasks and add additional capabilities to the general-purpose language that Python is.

Python’s Modularity

Python code can be split across various callable and reusable files called modules, which have an extension of .py, and these files contain reusable functions, classes, variables, etc. Modules can be further grouped inside a folder which is known as the Python package. In a python code, we can import both modules and packages and use their functions.

Packages (and modules) have been instrumental for Python’s versatile usage and application areas. The best part is that many of these packages are developed and maintained by a strong community of enthusiastic users and backed by good corporate support from companies like Google, Facebook, Apache, Microsoft, etc.

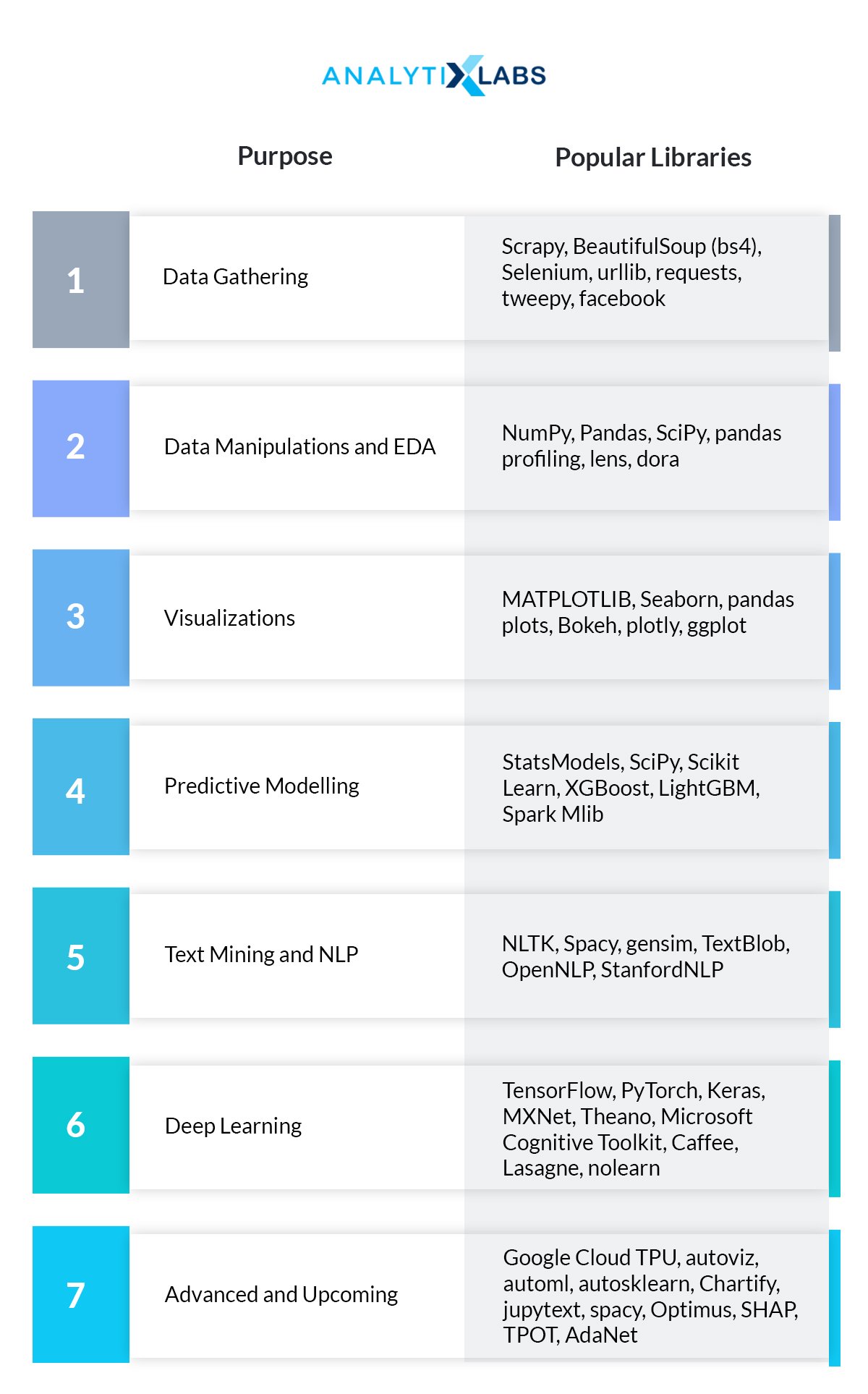

Python Libraries Used for Data Science

In this section we have described the list of important Python libraries used for data science.

Data Gathering

Data collection or gathering is the task of collecting necessary data to proceed with the analysis. This data can include auto-generated data like transactions, customer-related data, and sample data collected via various sampling principles. In this section, we focus on those Python libraries instrumental in collecting data from the Internet.

Scrapy

It’s a high-performance, open-source Python framework meant for large-scale web crawling and scraping. It comes pre-loaded with much common functionality, readily available to the programmer, reducing the coding workload. The user creates a framework known as a spider, which can be deployed on one’s server.

Beautifulsoup

bs4 is a Python library that extracts or pulls information from an HTML/XML file. It makes use of a secondary tool to pull a website’s source code, converts it into a bs4 object (an l-XML or html5lib parser), and then using its attributes, one can extract data out of it.

The bs4 is light and portable instead of the heavier Scrapy, but it’s equally effective and popular.

Data Manipulations and EDA

We all often hit a lot of roadblocks related to the inconsistency in the data. Data can be deficient, erroneous, missing, or unrelated and must be corrected and modified. In this process, we would need to impute missing values, remove outliers, drop redundant records, ensure proper data types, and many more.

Once this hurdle is crossed, we start to explore our data to discover patterns and get a good amount of summarisations of the past data and understand both a single variable and its relationship with one or more remaining variables (hypothesis testing) with the help of summary statistics and graphical representations. This process is commonly referred to as Exploratory Data Analysis.

NumPy

NumPy (Numerical Python) is among the most popular NumPy (Numerical Python) among the most popular python libraries used for data science. It is a signature package for mathematical functions in Python. It supports multi-dim arrays, matrix operations, linear algebra, and fundamental mathematics.

It uses an array object called ndarray – with faster “locality of reference” accessing elements, vectorization, and broadcasting (vectorized mathematics) functions – an added advantage in Data Science operations where speed and resources are paramount.

In short, NumPy is Python’s version of MATLAB. Some of the important tasks in Numpy include but are not limited to –

- Array operations – slicing, filtering etc

- Basic arithmetic operations in arrays

- Basic statistics – central tendency, dispersion, skewness

- Importing and exporting files

Pandas

The major limitation of Python for the data science stack is the lack of native support and vanilla code in dealing with relational data. Pandas is one of the many data analysis libraries in python. This flaw is overcome by pandas, an open-source and flexible data manipulation tool built on top of NumPy and supports relational data. It provides two major data structures – a homogenous dimensional Series, and two, a heterogeneous and two-dimensional, labeled data structure called DataFrame.

Pandas can accomplish all the data manipulation and EDA tasks. Some of the important tasks of pandas are –

- Data importing using its pd.read_xxxx() function

- Filtering, sorting, and removing duplicates

- Selecting, adding, and removing rows and columns

- Imputing missing values, capping outliers

- Data merging and concatenations

- Data reshaping

- Aggregations (groupby())

- Data visualization using its attribute plot(kind=”…”) function built on-top of matplotlib.

- Exporting data, and many more

SciPy library

SciPy refers to the ecosystem of Python libraries and software – NumPy, SciPy, IPython, SymPy, pandas, Matplotlib – used for scientific computing. SciPy library is a part of this ecosystem. It

provides interpolation, statistics, optimization, integration, and linear algebra to be performed on NumPy ndarray and Pandas Series/DataFrame.

The most prominent use of the SciPy (scipy) library is seen in Hypothesis Testing, among other mathematical functions. It provides routines for t-tests, f-test, chi-square tests, etc., via the scipy—stats module.

MATPLOTLIB

Matplotlib is a Python library for creating good quality 2-D visualizations in Python. It’s built over the NumPy library, and it is a part of the broader SciPy ecosystem. Introduced in 2002, matplotlib is the fundamental package. Its capability to generate various graphs – scatter, bar/column graphs, pie charts, Whisker Plots, Histogram – has been instrumental in producing graphics in Python.

Matplotlib’s pyplot module is a collection of functions that creates and modifies various plots. Each pyplot function adds some features to graphics: e.g., creating a plot, creating a sub-plotting area, decorating the plot with labels, adding some text in a plotting area, etc.

Some of the common tasks that are done using pyplot are –

- Define figure, X, and Y axes, multiple plots etc.

- Designated function to get graph e.g. pyplot.scatter(), pyplot.bar(), pyplot.pie() etc.

- Adding axes labels, axes ticks, define axes limits etc.

- Adding titles, sub-titles, sub text.

- Add text/labels over the plot

- Viewing the plot on a interface with .show() function

- Exporting or saving the image as a png/jpg file etc.

Seaborn

It is a graphing library based on matplotlib, which uses the pyplot canvas and modification functions, but it has its own literature of routines. The functions of seaborn are more user-friendly than that of the pyplot.

Since seaborn uses matplotlib internally, it can produce all the fundamental plots needed for EDA and machine learning tasks and add more plots to the arsenal. Apart from the basics, the plots available in seaborn are heatmaps, bubble charts, correlograms, violin plots, word clouds, spider charts, tree plots, Venn diagrams, etc.

Pandas plots

This is a special mention in the visualization section where one can use DataFrame. Plot () function to easily get graphs from the data. Pandas plots use all the pyplot function names in the kind argument (plot () to get various graphs like a bar, pie, scatter, line, etc. The sole requirement here is that the DataFrame needs to have labeled rows and columns or maybe a pivoted form (wide format) data, and we can have an easy plot done.

Pandas Profiling

Pandas profiling is an open-source Python module using exploratory data analysis using just a few lines of code. It generates interactive reports in a lucid web-based format which can be exported or embedded into a web page or an IPython notebook.

Pandas profiling provides various analyses like type, unique values, missing values, quantiles, central tendencies, measures of dispersion, sum, skewness, frequent values, histograms, correlation between variables, count, heat map visualization, and other univariate analysis.

Fewer lines of code can generate a rich analysis report. Still, pandas profiling also gives suitable warnings like missing values, cardinality, zero values, etc., which can be leveraged for machine learning tasks.

Predictive Modeling (Applied stats + ML)

This section deals with predicting future data, or Predictive Modelling, and the Python packages that play a role in it. Predictive modeling is broadly classified into 4 categories -Regression, Classification, Forecasting, segmentation, and sometimes a mixed problem. Python has strong libraries – Scikit Learn, statsmodels, Tensorflow, etc. – that can effectively deal with the above problems.

StatsModels

StatsModels provides classes and functions for implementing and estimating various statistical models for the four processes and conducting statistical tests. It is one of the data analysis libraries in python. Built on top of NumPy and SciPy, it can perform Linear Regression, Generalized Linear Models, and Generalized Estimating Equations. It also provides graphs by making use of the MATPLOTLIB package.

StatsModels supports specifying inputs to their functions using R-style formulas and returns R-style outputs, along with extensive compatibility and outputs for pandas DataFrames. In a sense, switching to Python is much easier for an existing R user. It is easy to create models; its implementation is trouble-free with just a few lines of code, and most important, it presents the output in a manner that is easier to read and understand.

Scikit-learn

The scikit-learn is a Python module based on NumPy and SciPy, which contains simple but efficient tools for predictive data analysis. Scikit-learn provides a range of supervised and unsupervised learning algorithms via a consistent interface in Python and cross-validation functionality to test the estimations.

Scikit-learn has numerous features and focuses on production-level concerns such as code quality, collaboration, documentation, and performance. Some of the models provided by scikit-learn include but are not limited to:

- Feature extraction

- Feature selection: for identifying meaningful variables for supervised learning.

- Dimensionality Reduction: for reducing the number of variables in data using algos like Principal component analysis etc.

- Supervised Models for Regression and Classification based machine learning models.

- Tuning the parameters

- Ensemble methods

- Clustering: for grouping the data into unknown groups by using algorithms like KMeans etc.

- Cross Validation: for estimating the performance of supervised models on unseen data

- Manifold Learning: For summarising and depicting complex multi-dimensional data

- Datasets: for test datasets to investigate model behaviour

XGBoost

An open-source library provides an interface to gradient boosting framework for various languages, including an all-explicit package for Python. It is primarily designed for speed and performance.

XGBoost optimizes the standard GBM algorithm using Parallelization, Regularization, Tree Pruning, weighted Quantile Sketch, and cross-validation. It also has facilities for Continued Training where one can apply further boosting on the already fitted model on new data.

The Python interface for XGboost is provided using the xgboost module, which can automatically perform parallel computation on a single machine that is faster than other GBMs.

LightGBM

Although the XGBoost is built for performance, it has limitations in larger data. This flaw is considerably solved by LightGBM – a distributed algorithm for GBM -, but the difference is that it splits a tree leaf-wise rather than the standard level-wise approach. This reduces processing overhead and reduces the losses caused by the latter, thereby increasing the accuracy.

Apart from the leaf-wise approach, another feature of LightGBM is that it automatically bins a continuous feature to speed up the training process and drastically reduces memory usage. In short, with its ability to fast-train large data and with increased accuracy, LightGBM can be a potential alternative to XGBoost.

Natural Language Processing

NLP refers to a set of processes that work on text data, especially data containing human language (reviews, tweets, transcripts, etc.). An NLU interface usually contains text processing functions for parsing text-based various grammatical components and semantics so that a computer can ultimately understand the human text.

Further down the lane, this processed data can be leveraged for applying various statistical models such as classification, segmentation, topic discovery, etc. Some of the text-processing tasks and functions include:

- Cleaning (spell checks, redundant words, remove unused symbols, etc.)

- Tokenization

- Standardization

- Removing stopwords

- Parts of speech tagging

- Stemming and Lemmatization

- Getting usable matrices like Document Term Matrix, TF-IDF, Feature Vector, etc.

- Creating advanced data structures like Word Vectors (Word2Vec, FastText, etc).

- Statistical and Machine Learning algorithms, viz., Naive Bayes, Support Vector Classifier, kNN Classifier, etc., applied to solve various business problems.

NLTK

Natural Language Tool Kit is the most fundamental library in Python built for the sole purpose of text preprocessing and NLU. It contains robust tokenization, parsing, stemming, tagging, classification, and lemmatization functions. It also contains interfaces and APIs to many corpora (text repositories) and lexical resources, giving the user easy access and building a tool to understand human language.

NLTK, although a robust library, is somehow limited to academics and experimentation, and other modules can prove to be better than it on a production-level implementation.

SpaCy

SpaCy is the latest add-on to the NLP realm. It is built for faster text processing and efficient implementation of the NLP stack. NLTK works traditionally because it treats and processes text like a regular string. SpaCy, on the other hand, uses Word Vectors, which drastically reduces the processing time.

SpaCy follows a pure Object-Oriented approach. It returns a “document object” after processing a text instead of a string, which has tons of functionality via the associated attributes and functions. Despite the speed and power, SpaCy is a bit limited in terms of language support, as it can only support seven languages, as opposed to NLTK, which supports many languages.

Gensim

Gensim is a Python library for NLP but distinctly built for topic modeling, document indexing, and similarity retrieval on large corpora. It has extensive support for word vectors – Word2Vec, FastText, etc., just like SpaCy has. Gensim is designed to use word vectors and can process a text without loading it entirely in the memory.

Gensim has all the pre-processing functions of NLP on the bare basics and has good support for a bag of words, n-grams, etc.

TextBlob

TextBlob is a Python library for text processing that provides a simple API for diving into common NLP tasks such as parts of speech tagging, named entity recognition, etc. But what makes TextBlob stand out more than the existing libraries are two of its high-valued functionalities – sentiment analysis and classification and the other is language translations powered by Google Translate. It can work with text as normal strings and has routines to integrate with WordNet.

Deep Learning and Artificial Intelligence

Deep Learning is a subset of Machine Learning that involves the computer “learning” real-world activities, tasks, actions, etc., using large interconnected data. Deep learning enables computers to perform those tasks that usually require human intelligence – where Neural Networks are trained by example to understand the underlying decision-making process. Typically, the algorithm learns to perform traditional classification and segmentation tasks using images, videos, text, and sound.

For effective deep training, we require a language that is capable of Neural Networks and has a proven success rate in parsing large unstructured data. This is where Python helps – with its packages and data handling, lesser code complexity, easier implementations, portability, and code collaborations. Let’s walk through some of the indispensable packages available for deep learning in Python:

Tensorflow

TensorFlow is an open-source library from Google that encompasses mathematical methods, machine learning algorithms, and Neural Network implementations required for deep learning and AI. It has the mentioned groundbreaking functions and has streamlined architecture that enables the code to be deployed on various CPUs, and GPUs, or maybe, facilitate AI as a service.

Tensorflow architecture enables users to train their data in their environment and deploy it on a network, cloud, or mobile device. One can train the model in different machines as well and then deploy it at multiple destinations. It comes bundled with TensorBoard, a tool to monitor the process stack and watch out for anomalies visually.

TensorFlow simpler workflow and accessibility, availability of pre-trained models, datasets, etc., make it one of the most preferred libraries for Deep Learning.

Keras

Keras is an open-source neural network library built using Python. While TensorFlow deals with many tasks, Keras focuses on high-level, efficient Neural Network APIs. Hence, it is more user-friendly than some of the available DL libraries. Keras has the ability to run on top of various libraries like TensorFlow, R, Microsoft Cognitive Toolkit, Theano, etc.

By its added ease of implementation, Keras is used in academics, research, experimentation, and various competitions held globally. However, one prominent application area where Keras finds its place is in tool development. Keras helps build a stand-alone implementation system, like a deep models-based product or tool for solving a business problem and implementing that as a service.

PyTorch

PyTorch is an open-source machine learning library built using the Torch library developed and maintained by Facebook’s AI Research lab. It is primarily used for computer vision and NLP via its CNN and RNN implementations.

PyTorch is straightforward to implement with its Pythonic way of coding. Hence, it provides improved developer productivity, has easy debugging features, and reinforced support for parallel data processing.

Model optimization is better with PyTorch than others because of its Dynamic Computational Graphs – where the network behavior can be changed during runtime. The process is never left inside a black box because the users can gauge and access every step in the workflow.

PyTorch is as user-friendly as Keras is and relatively lighter than a heavy TensorFlow to perform rapid prototyping. However, it falls short of building production-ready and deployable solutions.

Apache MXNet

Apache MXNet is an open-source deep-learning API, which is used to train, and deploy deep neural networks. Apache Foundation develops it. It is a light, flexible, scalable build supporting CNN, RNN, and LSTM models. MX stands for mix and maximize.

With its Gluon library, MXNet provides a high-level interface that makes it easy to prototype, train, and deploy deep learning models without compromising on the training speed – hence adding a greater performance. As a matter of fact, MXNet-Gluon is proven to be at least 1.5 times faster than TensorFlow.

The prominent application area of Apache MXNet is seen in IoT-based analytics. Its Lazy Evaluation, pruning, quantization, compression, interoperability with Amazon AWS, and other optimization features like acceleration software library such as Intel MKL or NNPACK makes it IoT-friendly.

Theano

Many mathematical operations in the Data Science stack in Python are highly dependent on NumPy, and Deep Learning is no exception. Theano is an optimizing compiler for manipulating and evaluating mathematical expressions, especially matrix data structures, using NumPy codes. Its ease of evaluating mathematical models makes it a tool of choice to build wrapper libraries around Keras and TensorFlow.

Microsoft Cognitive Toolkit

Microsoft Cognitive Toolkit (CNTK) is an open-source library that comprises all the basic building blocks to build a neural network. One of the major differences between Cognitive Toolkit and other libraries is that it has elaborate low-level APIs and high-level APIs. The high-level APIs are meant for an end-user level, easy implementation, and the core low-level APIs can be modified and restructured to implement neural nets better.

CNTK is known for its state-of-the-art batch loading of datasets, making it easy to handle large volumes of data without spending many resources. It can interface with Keras and be well used for production-ready applications in image and video processing, text processing, etc.

MS Cognitive Toolkit is reinforced by its ability to integrate with Azure apps and has APIs for C++ and Java deployment, making it a highly scalable interface. Microsoft Support heavily supports CNTK, and it is a preferred deep learning implementation for organizations that work on Azure Shop.

Final Thoughts

Given the vastness of Python’s capabilities, the extensive development efforts, and the never-ending scope of Data Science in general, it would be rather unfair to state that the above-mentioned packages are absolute boundaries in the Data Science stack. The Python libraries used for data science are vast and comprehensive.

Also, we have numerous helper packages in the stack which can provide quick but crucial help, but owing to its limited usage, we often don’t include them in the discussions.

A beginner to Data Science learning Python can consider that the overwhelming extent of packages is never a reason to worry about. Python code inherently is easy, and the packages are designed to keep their implementation lucid and straightforward.

Since 2019, extensive efforts have been made to democratize AI, auto ML, auto visualizations, and augmented AI. Emerging packages like autoviz, automl, autosklearn, Chartify, jupytext, spacy, Optimus, SHAP, TPOT, and AdaNet give us promising hope in the field of AutoML and auto visualizations – facilitating us to get rid of some of the clichéd and labor-intensive tasks.

If Data Science is a complex art of getting actionable insights from various forms of data, then Python is the artist.

You may also like to read:

1. The Best Machine Learning Tools: Python vs R vs SAS

2. 10 Steps to Mastering Python for Data Science | For Beginners

![Learning Logistic Regression – Applications using Python [Part 2- Practical]](https://www.analytixlabs.co.in/blog/wp-content/uploads/2023/11/Learning-Logistic-Regression-370x245.jpg)