This article can be considered as a beginner’s guide to knowing about artificial intelligence. The discipline of artificial intelligence is complex, and this article will act as an artificial intelligence tutorial for you to understand the steps you can take to learn AI, a brief history of it, along with its various concepts, levels, application areas, pros, and cons.

Introduction: A brief about AI and How To Learn It.

Artificial Intelligence is one of those phenomena that don’t have a concrete definition, to begin with, and its definition depends on from whom the definition is being asked. Still, the simplest understanding of Artificial Intelligence, or simply called AI, refers to that phenomenon where machines tend to mimic human thinking, intelligence, and to a certain degree behavior and thereby help solve various problems faced by mankind as a whole.

In today’s day and age, AI is widely used, and an artificial intelligence tutorial can greatly help in knowing about this discipline. Regarding “how to learn AI?”, there is not a straight forward tried and tested path to learn AI and become an expert of it; however, broadly, one generally needs to belong from a STEM field or at least have a decent knowledge of statistics, mathematics, and computers.

Besides knowing about computer programming and advanced mathematics, knowing about domains such as Data Science, particularly machine learning and predictive modeling, can greatly help individuals shape their understanding of AI.

AnalytixLabs is the premier Data Analytics Institute that specializes in training individuals and corporates to gain industry-relevant knowledge of Data Science and its related aspects. It is led by a faculty of McKinsey, IIT, IIM, and FMS alumni who have a great level of practical expertise. Being in the education sector for a long enough time and having a wide client base, AnalytixLabs helps young aspirants greatly to have a career in Data Science.

What Is AI?

As mentioned earlier, there are many definitions of AI. Still, if we were to consider a broad definition, we can use the one provided by the father of AI, Marvin Lee Minsky and John McCarthy. He considered AI to be certain computer systems that could perform human activities using their intelligence.

Thus, these systems should be able to demonstrate certain features of human intelligence, which include logical reasoning, problem-solving, understanding knowledge that may not be provided in a typical binary or structured way, along with applying purely human concepts of perceptions, social norms, etc.

However, one must always remember that while the understanding of AI still emits particularly from sci-fi movies where humanoid exists, which manifests the epitome of Artificial Intelligence, in reality, what form of computer systems are considered as AI has always been dependent on the time. Earlier computer systems that could recognize text from images were considered AI. Today a system must be much more sophisticated for it to be considered part of AI.

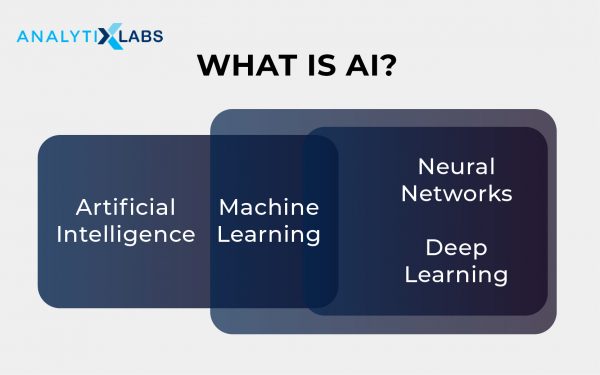

Whenever questions such as “what is AI?” are asked, somewhere in the definition, the reference to machine learning is made, and there is a reason for that. While the meaning of machine learning can be highly debated, one cannot deny that machine learning and Artificial Intelligence are two intertwined and highly related concepts, if not parts of each other (though that is debatable too).

Machine Learning refers to avoiding hardcoding and manually programming the systems to accomplish a task and letting the machine decide and take action. This is achieved by providing large amounts of historical well-labeled data, i.e., data with the predictors and the target variable. This way, the machine can establish a pattern between the numerous inputs and the target label, thus predicting and taking numerous “wise” and correct actions. While it uses huge amounts of well-labeled data, AI uses a different mechanism to establish this pattern and uses an architecture that mimics the human brain.

This architecture often mimics the functioning of the human cell neuron and creates neural networks, and comes up with answers. In a purely technical understanding, patterns are established deep within the networks. The creator cannot even comprehend how exactly the system is coming to a conclusion (even if it’s correct). Hence, this form of machine learning is known as deep learning. The way deep learning approaches a problem provides little understanding regarding what it actually “thought” before coming to an answer, thereby leaving an iron curtain in front of the AI system creators. This is the source of all speculation, skepticism, and source material for all the movies and novels where AI-powered systems take over mankind.

Even with the current level of AI, most experts believe that for AI to gain its full potential, it must gain “common sense” to act and solve the complex problems that humans solve on a day-to-day basis.

Related: Components Of Artificial Intelligence – How It Works?

Brief History of AI

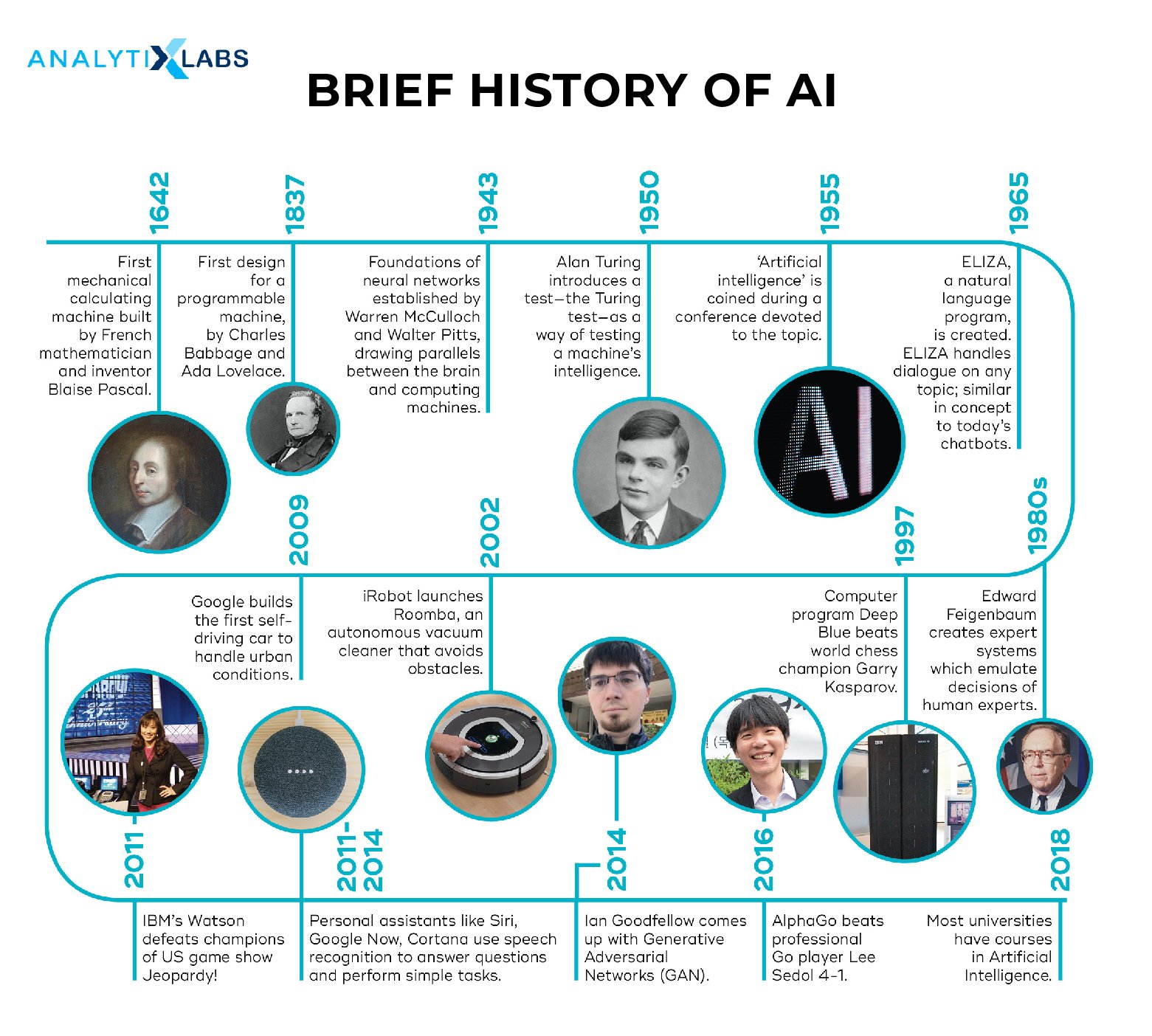

The history of artificial intelligence is an interesting one, especially if we consider how long the concept of AI has been with us. If we try to think of what AI represents, then we can find a slight glimpse of its concepts in the Greek, Chinese and Egyptian myths. However, as far as the term “Artificial Intelligence” is concerned, it was coined in 1956 at Dartmouth College in New Hampshire. Suppose Alan Turning is the father of computers. In that case, (as mentioned earlier), the fathers of Artificial Intelligence are Marvin Minsky and John McCarthy. They in MIT worked on various AI projects, which are now called the MIT Computer Science and Artificial Intelligence Laboratory. Since then, it has been milestones after milestones that have been achieved in the field of Artificial Intelligence. While every passing year and contributed something towards the growth of AI, the following are the most important years in its development-

1961: While the creation of the MIT laboratory of AI was a major milestone that was even preceded by the work done by Alan Turing on early forms of computer in the 1940s, the proposal of 3 laws of robotics by Isaac Asimov’s in 1950, and the subsequent development of the first AI-based checkers-playing program written by Christopher Strachey in 1951, 1961, however, saw the first wide-scale implementation of AI when robots were introduced in the factories of General Motors to easy the work on the assembly lines.

1964: Natural Language Processing and understanding is first achieved this year when Daniel Bobrow develops STUDENT as his MIT Ph.D. dissertation (“Natural Language Input for a Computer Problem Solving System).

1965: In addition to the previous accomplishment in the field of NLP (Natural Language Processing), ELIZA is developed by Joseph Weizenbaum that is a kind of an “interactive chatbot” that can communicate with the users in the English language.

1969: The famous paper “Perceptrons: An Introduction to Computational Geometry” by Marvin Minsky and Seymour Papert is published that points out the limitation of the then-popular simple single-layered neural networks and how they were unable to solve a simple XOR problem.

1974: The AI Stanford Lab comes up with a self-driving car- the Stanford Cart that is able to detect hurdles on its path and maneuver through without hitting objects placed in its way. However, the final form is obtained only by 1979.

1974 – 1979: The first AI Winter hits the development of AI mainly due to the lack of memory of processing power available at that time. Government-funded investment in AI drops, and the general interest sees a drop as well in the world of academia.

1981: Japan decides to work on its “fifth generation” computer project to ease conversations, perform translations, and reason like humans, which receives huge findings from the US ad Britain, thus ending the first AI winter.

1983: XCON (eXpert CONfigure) program (an early type of a rule-based Expert System computer developed in 1978 to assist the ordering of DEC’s VAX computers) reaches its peak of 2,500 rules and is accepted by a huge number of corporations all around the world. Thus, XCON becomes the first widely used computer system to integrate AI techniques in solving real-world problems, such as selecting the components based on the requirements of the customers.

1984: The annual meeting of the Association for the Advancement of Artificial Intelligence takes place at the University of Texas where Roger Schank and Marvin Minsky warns the AI community of another AI winter, citing the major issue being the busting of the ongoing AI bubble due to perpetually dropping research funding and industry investment.

1986: David Rumelhart, Geoffrey Hinton, and Ronald Williams propose a learning technique called backpropagation that could be applied to neural networks in their paper “Learning representations by back-propagating errors.”

1987 – 1993: As Schank and Minsky had warned, the second AI Winters hits. The slow and inelegant expert systems become too costly for the corporations who increasingly prefer Microsoft and Apple OS on personal computers to solve their needs. Defense Advanced Research Projects Agency (DARPA), the United States Department of Defense’s research and development agency responsible for the development of emerging technologies for use by the military, stops funding AI-based projects as it finds AI not to be the catalyst for the next wave of computer advancement. In 1998 Minsky and Papert publish an expanded version of their book Perceptrons and cite the continuous committing of old mistakes by the AI community as the reason for its general decline. The backpropagation technique proposed by Rumelhart is applied on a multi-layered neural network to recognize handwritten text at the AT&T Labs. However, the problem of limited processing power remains as it takes 3 days to train the network. In 1992, Bernhard Boser, Isabelle Guyon, and Vladimir Vapnik (the original creator of SVM in 1963) found a way to apply a kernel trick to Support Vector Machines (SVM) in order to solve non-linear classification problems. Though much simpler and faster than neural networks, this algorithm can accomplish tasks such as image classification, which greatly hits the development of AI.

1995: A.L.I.C.E inspired by ELIZA is developed by Richard Wallace but is much more advanced due to the world wide web’s availability.

1997: LoSepp Hochreiter and Jürgen Schmidhuber develop long Short Term Memory (LSTM) techniques to recognize handwriting and speech. The same year IBM develops Deep Blue, a chess-playing program, and beats Garry Kasparov, a World Chess Champion, at a widely publicized event.

1998 – 2000: Multiple robots are developed around the world. In 1998, Furby was developed as the first robot pet dog, and In 1999, Sony develops its robotic dog, AIBO. In 2000, Kismet was developed by Cynthia Breazeal at MIT to recognize human emotions, while in the same year, ASIMO is developed by Honda as the humanoid robot capable of interacting with humans and deliver trays at restaurants.

2004: DARPA recognized AI’s potential and launched a challenge to design autonomous vehicles (no one wins the competition, though).

2009: Google starts with the project of developing a self-driving car.

2010: Narrative Science started as a student project develops its prototype, Stats Monkey, that writes sports stories automatically based on widely available data.

2011: IBM’s Watson, a Q/A computer program wins the popular show Jeopardy. The race to build the most sophisticated virtual assistant begins with the many assistants becoming mainstream, such as Facebook’s M, Microsoft’s Cortona, Apple’s Siri, and Google’s Google Now.

2015: Elon Musk and various other prominent figures donate to $1 Billion to Open AI.

2016: Google’s Deep Mind Technologies developed AlphaGo defeats the Korean Go champion Lee Sedol. The same year Stanford issues the AI 100 report marking a 100-year effort to study AI and anticipate how it will affect people.

Present: A large number of universities across the globe have started offering academic formal courses in the field of AI, thus making it a mainstream subject to study.

How to learn AI?

Learning about any discipline can be difficult and requires a lot of hard work and dedication from the aspirant. This becomes a challenging task, particularly when the field is not a high format field such as theoretical physics or chemistry, and it’s still evolving at a rapid pace. All of this is true for Artificial Intelligence, and thus there is an ever-increasing demand for an artificial intelligence tutorial, especially those that cover artificial intelligence basics. While there are many ways to learn, AI for beginners is a complicated thing to understand, and one must need to focus on the following aspects of AI.

- Knowledge about data

Everything boils down to data in artificial intelligence, as data is the source of information from which any AI-enabled system learns. Thus one first must get acquainted with data and the field of Data Science in general.

- Understanding of the Machine Learning and its related concepts

Once some idea regarding data is achieved such as data exploration, manipulation, and mining, one must understanding concepts such as predictive modeling. Here topics such as the theoretical and applicative aspects of traditional and Bayesian statistics, mathematics, and numerous statistical and mathematical algorithms must be understood.

- Knowing a Tool

To apply all of the knowledge gathered at this stage, a tool must be perfected. While there are a lot of tools in which artificial intelligence is performed, one tool is used for data exploration, manipulation, reporting, predictive modeling, machine learning, and deep learning is python. Thus, one must learn a great deal and build a good foundation in Python.

- Understand what AI is all about

AI for beginners is a fascinating and sometimes a hyped subject matter. Until and unless a decent understanding of AI is not gathered, an aspirant will not be able to set their expectations right. Thus knowing about the various meanings, types, levels, algorithms, techniques, and uses of AI is a must. A simple Feed Forward Network is something learners can start from as concepts such as dense networks, backpropagation, epochs, weights, and bias will help grasp other, more complicated algorithms of AI.

- Exploring Resources

To learn all of the above, one can focus on each and every aspect individually and then thread all these concepts together to become good in the field of AI. These individual resources can be in the form of dedicated books, online courses, the academic institution providing degrees. However, online courses focus on AI, but it is virtually impossible that a single course can talk about all the aspects in a single go.

- Getting Involved in the community

During the learning stage, one must also get involved in the AI community. This can be done by subscribing to the AI-related news, joining online AI-based communities, attending workshops, etc. This provides a high level of exposure to individuals and helps in expanding their reach.

- Starting off with the practical application of knowledge

Once a decent amount of knowledge is achieved, one can only learn AI by applying it and solving real-world problems. One can first start with copying and implementing AI models developed by others and then reverse engineering their project in order to understand how its creator created the AI system. This way, slowly learners can know how to apply the statistical and mathematical equations, logic and concepts learned earlier. One must start with simple AI algorithms and develop models that solve non-complex problems and then work their way up towards more complicated algorithms and problems.

You may also like to read: How to Learn Artificial Intelligence? Get Started With AI

AI Concepts

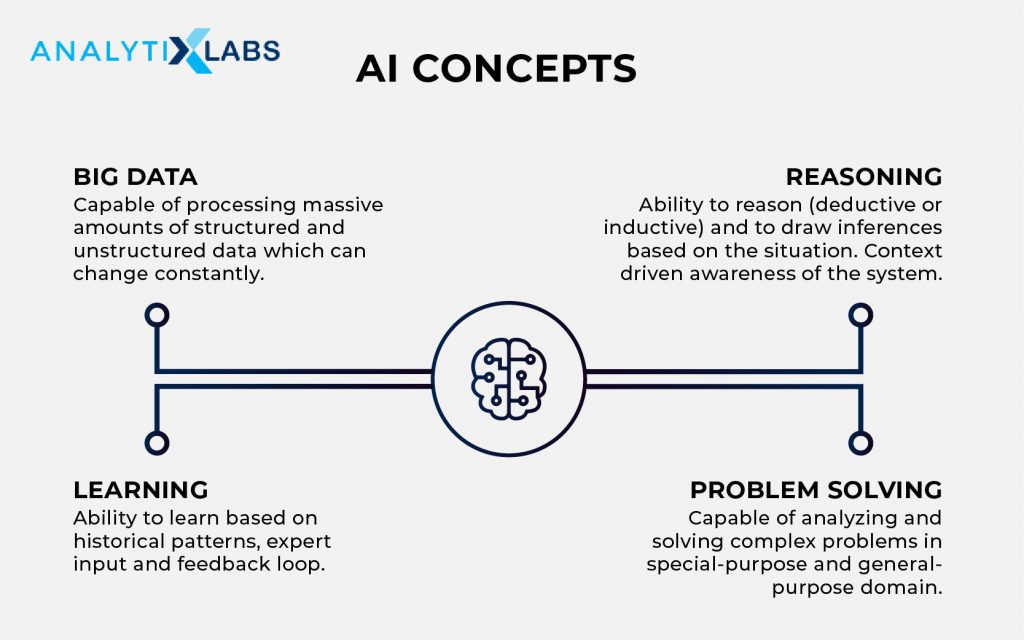

AI is not a homogeneous field and many concepts of different fields are involved in it. However, there are some artificial intelligence basics and these basic important AI concepts include-

- Big Data

AI needs a large amount of data to train on, and the concept of Big Data is important to know. The latest resurgence of AI has been attributed to the vast availability of data provided mainly through the web. To manage this high velocity, variety, and volume of data, architectures, and methodologies are developed in the field of Big Data.

- Machine Learning

A highly contested topic can be the appropriation of Machine Learning and where Machine Learning falls relative to Artificial Intelligence. One of the understanding is that machine learning is a subcategory of AI as when a large amount of data is fed into a system that helps make the machine learn how to come up with the correct answer, the phenomenon of machine learning takes place. Thus, Artificial Intelligence uses these concepts in a way such that it can mimic the human brain.

- Deep Learning and Neural Networks

As mentioned above, a machine learning algorithm also “learns” and requires a large amount of data, but what makes a typical AI system is the use of a specific architecture to perform this learning, i.e., the use of neural networks that mimic the functioning of the human brain. Here multiple neurons are connected and stacked together. Then weights are introduced in these networks where each neuron has a weight. Thus when an input is provided, a result is found, and to get better results, the weights are tweaked. As there are multiple neurons, we may not know how each neuron functions and don’t know its role in the AI model’s capability to find the right answer. These deep layers between the input and output layer having multiple neurons have caused the AI examiners to not have the complete picture of how the system “thinks,” and this is how the name deep learning is commonly associated with AI-based algorithms.

There are multiple deep learning algorithms out there that mimic different parts of the brain. The most common of these are

- Artificial Neural Networks (or simply a Deep Feed Forward Network): Acts as the temporal Lobe of the human brain responsible for the long term memory and decision making

- Recurrent Neural Networks: Mimics the Parietal Lobe of the brain responsible for short term memory, often used during conversations

- Conventional Neural Networks: Works like the Occipital Lobe of the brain responsible for vision

However this is not an exhaustive list and there are multiple neural networks such as Long Short Term Memory (LSTM), Auto Encoder (AE), Markov Chain (MC), Generative Adversarial Network(GAN), Liquid State Machine (LSM), Gated Recurrent Network (GRN) with each solving specific kind of problems.

- Backpropagation

It is because of backpropagation that the AI system is able to “learn”. It refers to that process where the error is calculated after an epoch and the weights in the network are made to update in order to reduce the error. Here concepts of partial derivatives and chain rule come in handy.

- Reinforcement Learning Setup

Various Machine and Deep Learning algorithms learn in a setup. While most typical machine learning algorithms learn in a supervised learning setup where there is training/development data and it is expected from the algorithms to go through all the input features and understand their connection with the label in the target variable, the reinforcement learning setup is bit different. Reinforcement Learning setup where most of the deep learning algorithm work uses the concept of “sticks and carrots” where if the network predicts the correct label then it is rewarded while upon being wrong it is punished, of course, all of this is done mathematically. Thus, the Reinforcement learning setup makes the AI system learn the way parents make a toddler or child learn about new words or objects.

You may also like to read: Fundamentals Of Neural Networks & Deep Learning

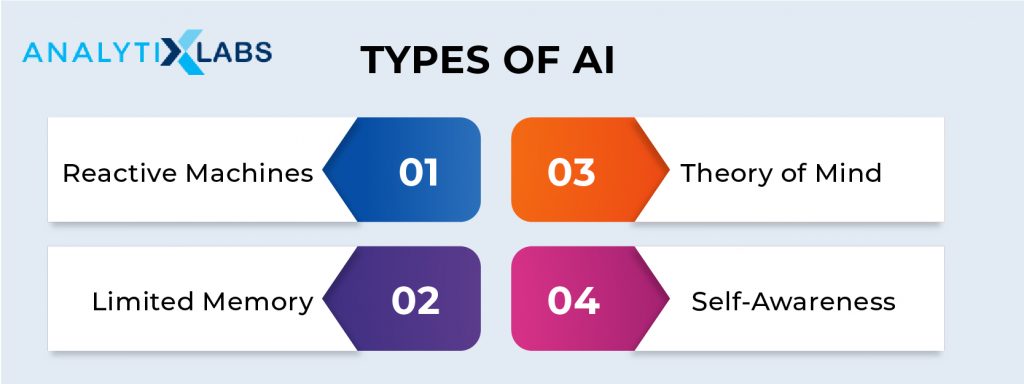

Types of AI

- Reactive

This type of AI has no memory of its own and responds to external factors. Thus, the concept of learning is limited here as they do not use their experience to build upon and are generally hard-coded rule-based AI systems. The earliest form of AI were these reactive AI systems such as IBM’s Deep Blue. Thus Deep Blue could play chess very well and could predict the next plausible moves of its competitors but couldn’t learn from its past won and lost matches. As these systems are not creative, they can be easily fooled and manipulated.

- Limited Memory

Limited Memory AI systems have the concept of memory and utilizing it to get better over time. Here the historical data plays a crucial role, and as more data is received, the system becomes more efficient and better in its working. Most of the modern-day AI systems, particularly those who use deep learning architectures, use this concept. This is the reason that, increasingly, tech giants such as Google provide pre-trained deep learning models whereby providing an AI with some memory so the other users can further train them on their data, thus expanding the “memory” of the AI system. Therefore, if the reactive AI systems were rule-based, limited memory systems that use machine learning fundamentals such as Deep Mind’s AlphaZero that are more than capable of beating reactive AI systems such as IBM’s Deep Blue. It is important to note that the backward propagation mentioned during the history of AI is one of the major reasons that such systems exist today.

- Theory of Mind

AI systems of this type are special as they can comprehend the need of other AI and intelligent systems such as humans. This type of AI system can deal with understanding complex human emotions, intentions, requirements, thoughts, complex speech patterns, etc. This way, these AI systems will be able to integrate deeply with us and be there at every walk of life.

- Self Awareness

This is the highest type of AI system as they hold human-like intelligence and are self-aware to such an extent that they are independent in their thinking. Thus, these AI systems noll be able to understand other beings’ emotions unmanifest their own emotions aThis self-awareness can lead to the most prominent and basic instinct, i.e., the basic instinct of survival, thus raising many moral dilemmas for humans to develop AI systems of such type.

Levels of AI

There are broadly three levels of AI: Narrow AI, General AI, and Super AI.

- Narrow AI (also known as Weak AI)

These artificial intelligence systems perform a specific task and are “intelligent” in accomplishing those tasks only. These AI are found in all the numerous dedicated applications and systems that ease our day to day life. For example, the various voice assistants such as Siri are examples of such Narrow AI where these systems specialize in understanding voice command and performing actions based on it. Similarly, software in self-driving cars such as Tesla, e-commerce websites that provide recommended products such as Amazon or Flipkart are examples of such Narrow AI.

Most of the new applications are based around Narrow AI only that specializes in specific tasks. This AI technology is finding its roots in various domains such as as-

- mapping earth of natural resources used in mining domains

- systems to manage hotels and flight and other sectors in the hospitality domain

- image recognition and identification of patterns in them such as identification of diseases in the medical domain

- identification of anomalous and fraudulent transaction in the banking domain

Thus, every field and sub-field is busy developing their own Narrow AI system

- General AI (also known as strong AI)

This form of AI tries to mimic human intelligence which is generic and highly adaptable, thus can solve a multitude of problems. This is still the most difficult form of AI, which is commonly associated with the term “Artificial Intelligence,” where machines tend to think and “feel” like humans. This form of AI is highly popularized through novels and movies. There are numerous characters in popular culture, especially in movies that manifest this form of AI, such as Wall-E (Wall-E), C-3P0, R2D2 (Star Wars), T-800 (The Terminator), HAL 9000 (2001: A Space Odyssey), TARS, CASE (Interstellar) or Agent Smith and the machines in the movie Matrix. There is a great level of debate if humans will be able to achieve this level of technology in the near future (that too if they are able to do so in the first place).

The debate over the proper identification of general AI is also fierce as the term intelligence in itself is vague. To solve this question, Alan Turing proposed a test known as the Turing test, which checks if an AI system can talk like a human as that involves a good level of intelligence. This test involved an examinator chatting with multiple people, not knowing if the person on the other side is a human or is an AI system. To consider an AI system as intelligent, it must mislead the invigilator into thinking that it too is a human. AI Systems such as ELIZA and PARRY were able to pass this test by focusing on the loopholes and not by actually being intelligent thus exposing the limitation in our description of intelligence as many phenomena considered as part of intelligence were not the case. However, recent systems have exploited the huge data available online of actual conversations and have gained the ability to improve over time by remembering the previous conversation held with them. However, the limitation persists which is the general inability of modern, general AI systems to quickly adapt to new topics and understand delicate yet complex things such as sarcasm, cultural ideas, intuition, etc.

- Super AI (also known as Artificial Superintelligence)

This is a theoretical level of AI which imagines the capability of Artificial Intelligence to go beyond human intelligence. This level thinks of those AI systems that will be completely independent, will be able to take their own decision, and will even be able to feel, sense, and see things and emotions. The systems working at this level will be able to accomplish those tasks that even humans are not able to do and will sort of act as the next evolutionary point for humans. However, this level of AI raises many moral, ethical, and existential questions and this is the reason that often AI is seen with skepticism by the general public.

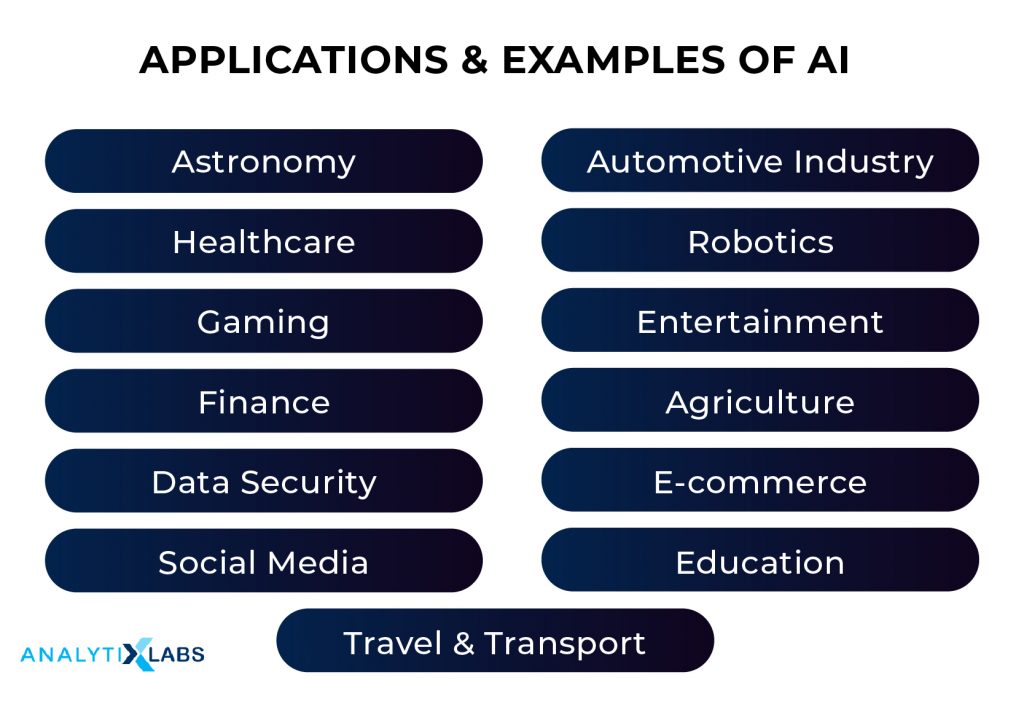

In modern days, the application and manifestation of AI are ubiquitous. There are limitless avenues and domains where AI is used, still, to put forth some of them, the following are among the most common ones.

- Speech Recognition

The widely accepted use of AI is in speech recognition. This way, AI-enabled systems that can recognize speech can help speech to text, perform actions on voice commands, and even act as an additional security measure. This technology has greatly helped in the development of virtual assistants such as Siri, Alexa, etc.

- Computer Vision

It seems that AI tends to tap into the sensory capabilities of humans and thus the next application is related to vision. AI systems help machines in “looking at the real world” by converting the images and the visual world around them into machine-understandable data and perform actions based on it. These technologies have paved the way for facial recognition systems that greatly help in security, surveillance, emotion detection, customer feedback, etc.

- Game Playing

Perhaps one of the earliest forms of AI, the games deployed AI for the players to compete with. This Idea was further worked upon by various corporations coming up with a system to beat humans at various games such as Deep Blue for chess and AlphaGo for Go.

- Natural Language Processing

Understanding human language and interacting with it has been one of the most difficult and looked upon as a challenge for the tech community. Today, chatbox helps ease the workload off the service sector, especially in the hospitality domain, by providing customer assistance and support. Other applications include in keyboard helping users in correcting spelling mistakes and predicting the next word or phrases for them.

- Healthcare

AI has found great application in the healthcare domain as it has enabled early detection of diseases, aiding researchers in the development of new drugs, and helping in the identification of mutated genes, among other things. Support tools have been created that through AI-enabled software are able to perform delicate operations that require an extremely high level of precision.

- Cybersecurity

AI systems have enabled the detection of online frauds and even alerting the concerned people and authorities even before they take place. Cyberattacks can also be deterred through the use of AI.

Conclusion: Authors opinion

Artificial Intelligence has always been one of the most sought-after technologies that humans as a whole have fancied about for centuries. However, it wasn’t until recently that we have been able to develop systems that can be called to possess Artificial Intelligence. While the potential of AI entices us especially as we see its ever-increasing use in the fields of healthcare, security, social media, etc, the growing potential of AI also raises questions. While the typical skepticism around AI is regarding its dangers to the human civilization’s existence and its outstripping human capabilities and posing an existential threat to humans. While these skepticism are not baseless, the immediate problems are different. AI has enabled automation and made routine, repetitive work be performed with utmost accuracy. This must be kept in mind that the majority of the workforce is involved in routine, repetitive jobs. Thus, the development of AI increases job loss risk, especially in those countries where there is a growing young population, which are mostly underdeveloped and developing countries. Thus, the growth of AI must be sustainable and help the masses.

FAQs

- Why is AI booming now?

AI development has been a long, tedious process and has seen its fair share of tough days. However, AI is flourishing now due to multiple things happening in a very short span of time. AI requires information, the more the better, and with the advent of the world wide web and internet of things, the volume of accessible data has greatly increased. The second reason has been the computing power that has greatly increased because of the greatly powerful and easily available RAM, CPU, and GPUs. Powerful systems have made it possible to train models in a short amount of time. Tech firms have provided cloud services where the cost of acquiring hardware has been substantially reduced. An example of this is Google’s Tensor Flow Research Cloud which individuals can use to develop their AI-based models.

- Where is AI used? Examples

One may not realize this but AI is now used everywhere. It is used in self-driving cars, automating tasks in factories, making precision robots perform delicate health operations, guiding drones and missiles, surveillance and cybersecurity to detect threats, in keyboards to suggest words. It also plays a great role in various virtual assistants provided at numerous tech platforms, helps in suggesting videos on youtube, posts on Facebook, and products on amazon. Thus its use is ubiquitous.

- What Makes AI So Popular?

The sheer capability and potential of AI make it highly popular. It is so popular because of the fiction people have regarding robots in general and what AI can do to mankind, a notion perpetuated by sci-fi movies and novels. However, in practicality, it’s the level of accuracy, sophistication, cost reduction, and efficiency that AI-based or supported operations provide, making corporations one after the other opt for AI-based systems.

- What is AI Coded in?

Artificial Intelligence algorithms and architectures can be coded in any modern-day computer language. However, the scalability and simplicity provided by python is the reason that python is commonly associated with Machine Learning and AI. However, other languages include Haskell, R, C++, Perl, and Julia may also be used.

- How do I start learning AI?

A common question is “how to learn AI?”. For this, one needs an artificial intelligence tutorial. One can start learning AI by firstly knowing about it as a field which can be done by going through blog posts, books, articles, etc. As in the hierarchy of computer technologies, AI is almost at the top, one needs to learn other things such as statistics (traditional and Bayesian), mathematics (linear algebra and calculus), programming (python), knowledge of datasets (types, ways to import, export, explore, mine, manipulate), predictive modeling and its related concepts (types of variables, underfitting, overfitting, generalization, model evaluation, and validation, etc.), machine learning and its related concepts (theoretical understanding of algorithms and ways to implement) and finally understanding of the techniques and kinds of AI algorithms (theoretical and practical implementation).

All these subtasks can be learned by either through self-learning (reading books, going through online material) and by making their own artificial intelligence tutorial, or by going through an artificial intelligence tutorial already available in the form of online courses (courses found on Analytixlabs, Coursera, Udemy, etc.) or academic courses (B.A. Mathematics / Computer Science / MS Artificial Intelligence).

This article aimed at providing the reader with an artificial intelligence tutorial helping in the understanding of Artificial Intelligence, its functioning, importance, and place in the current world of technology, its history and development till present, advantages, and dangers. The readers are encouraged to understand the concepts mentioned in this article, do more research on them, and implement the AI concepts in the real world to better understand this field. If you have any opinions or queries related to this article, please feel free to post and help us get more insights regarding this fascinating subject.

You may also like to read:

1. 18 (Interesting) Artificial Intelligence Projects Ideas

2. A Practical Approach to Artificial Intelligence Course Syllabus

3. How to Become an AI Engineer? Know about Skills, Role & Salary

1 Comment

Thank you for sharing information about artificial intelligence. I really liked all your points and your useful post. Thanks.